12 changed files with 68 additions and 9 deletions

+ 38

- 5

README.md

View File

+ 30

- 4

README_CN.md

View File

BIN

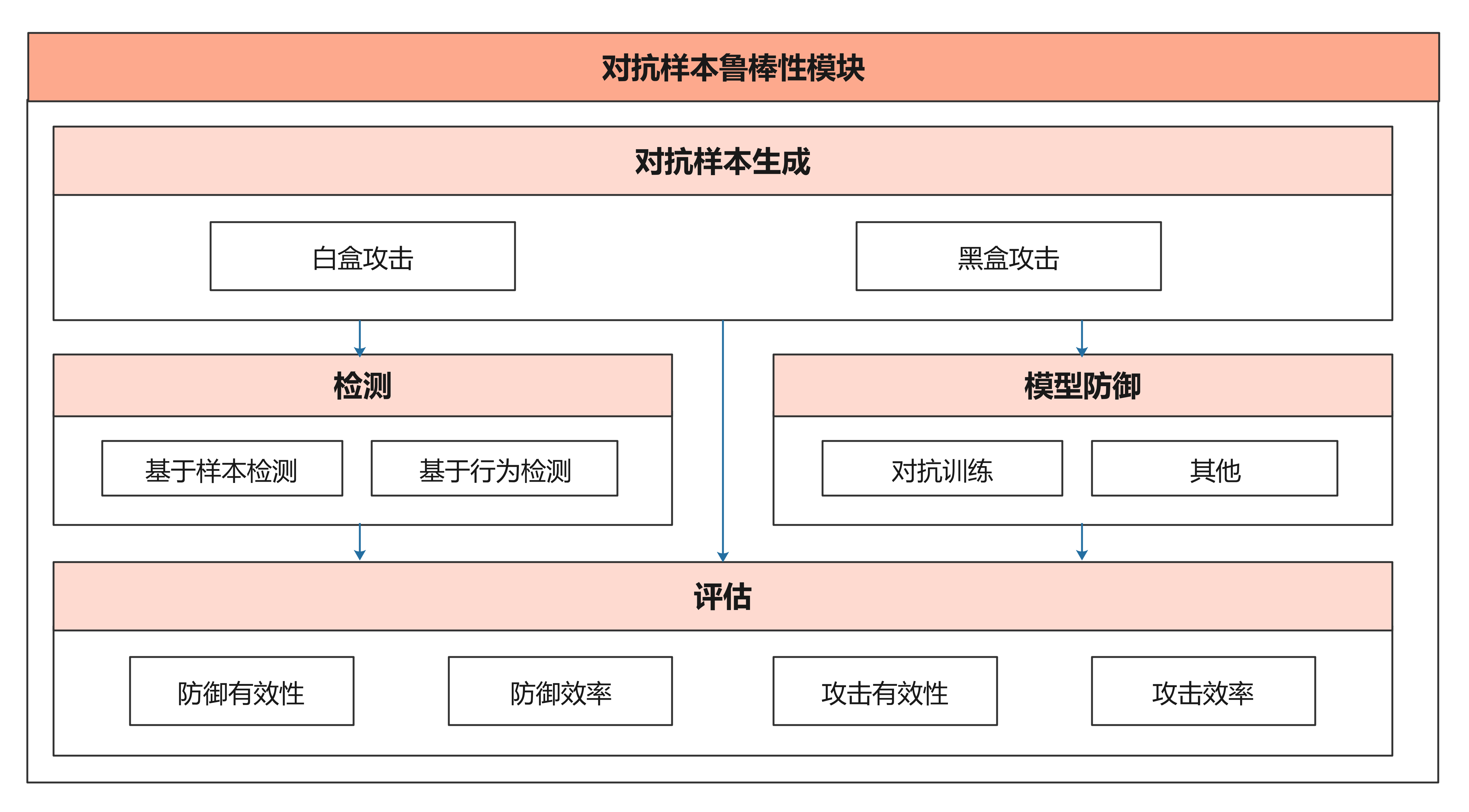

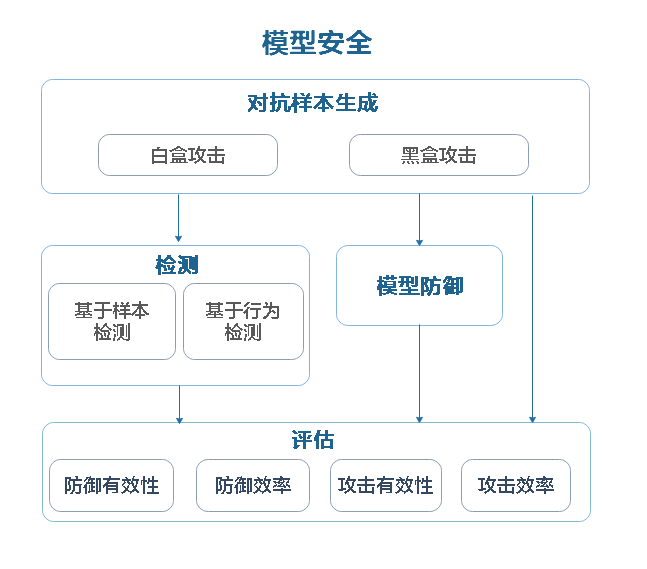

docs/adversarial_robustness_cn.png

View File

BIN

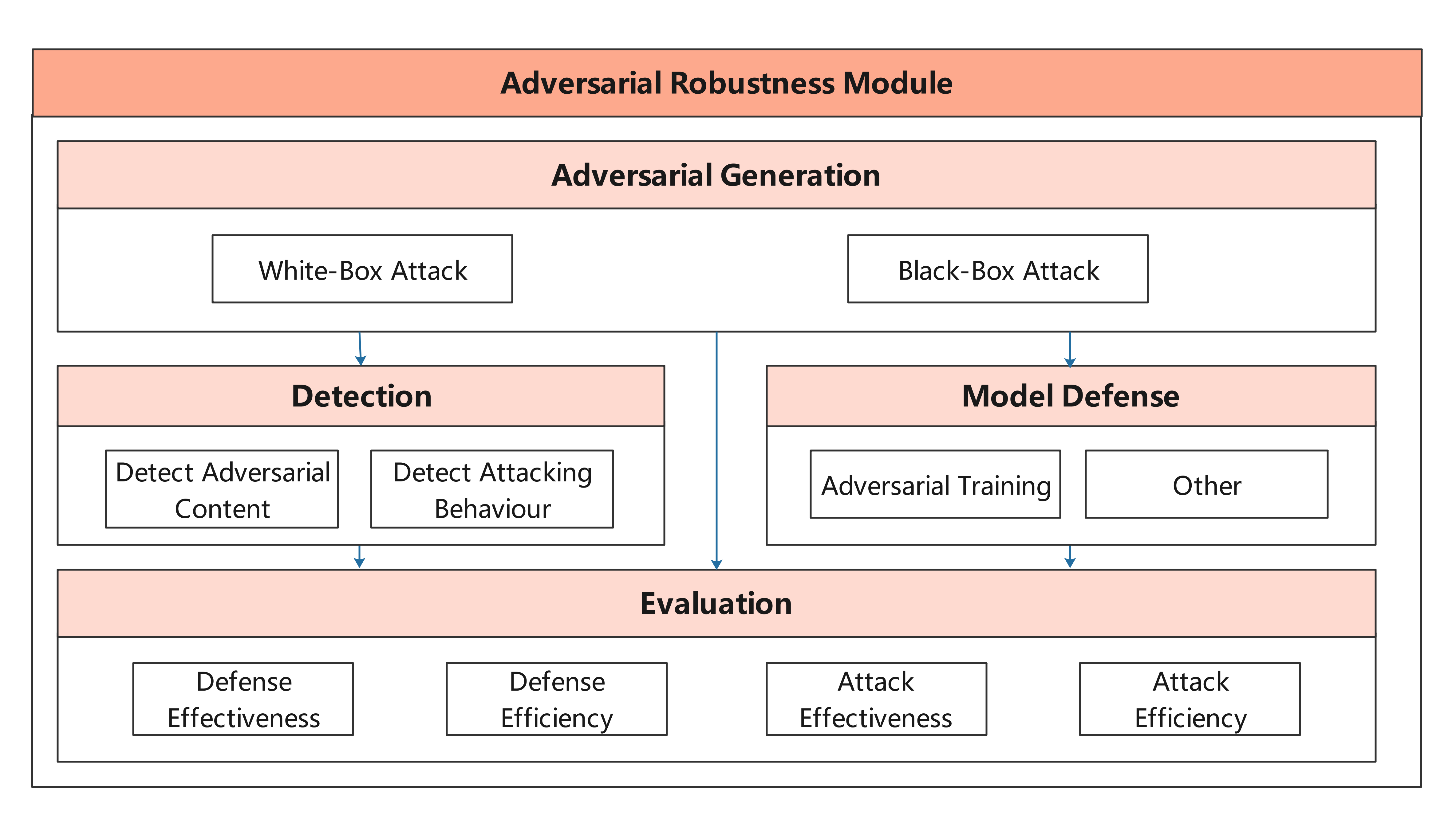

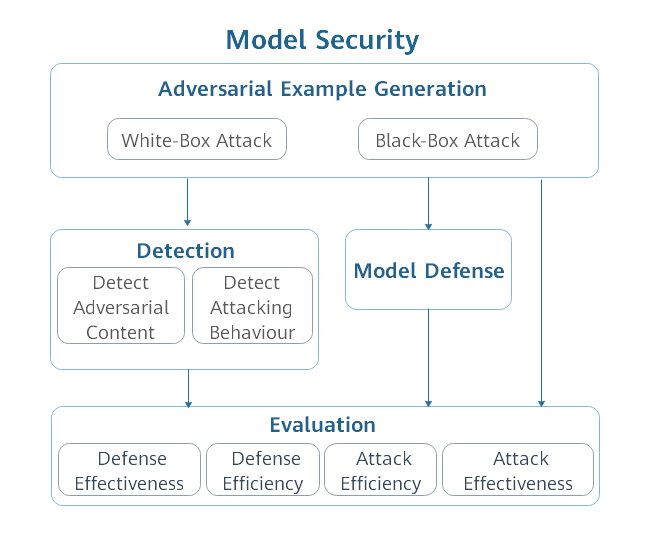

docs/adversarial_robustness_en.png

View File

BIN

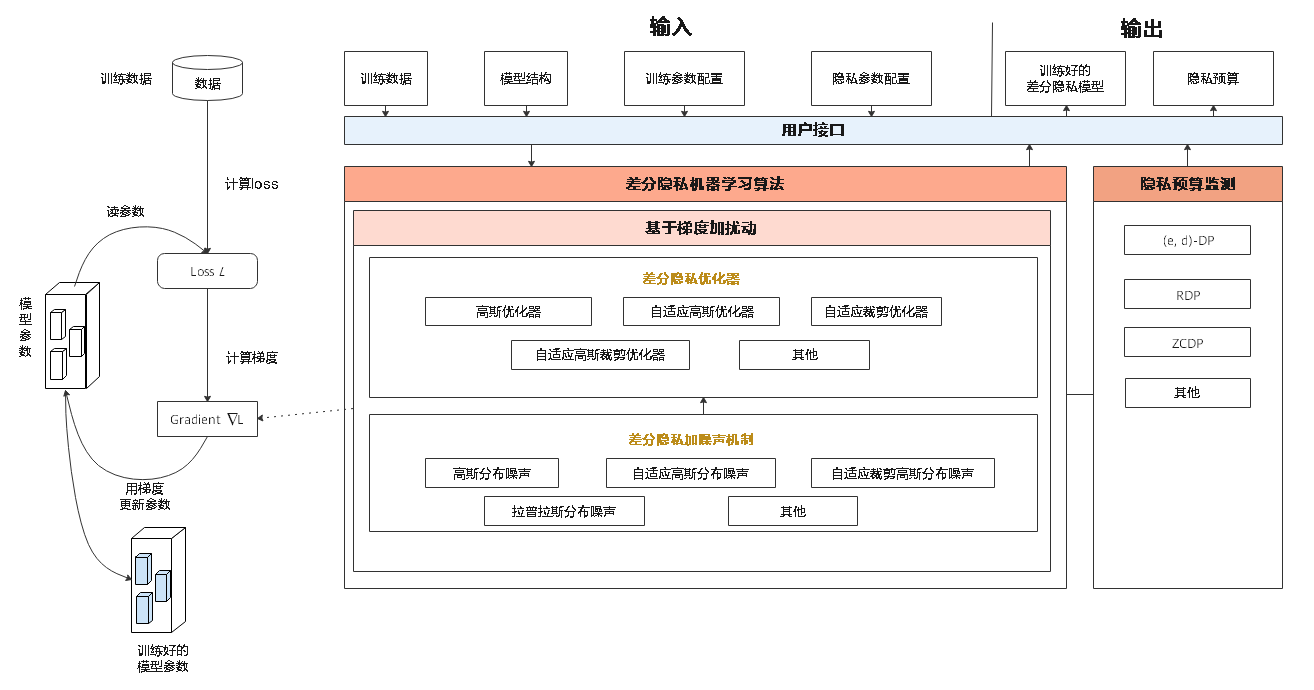

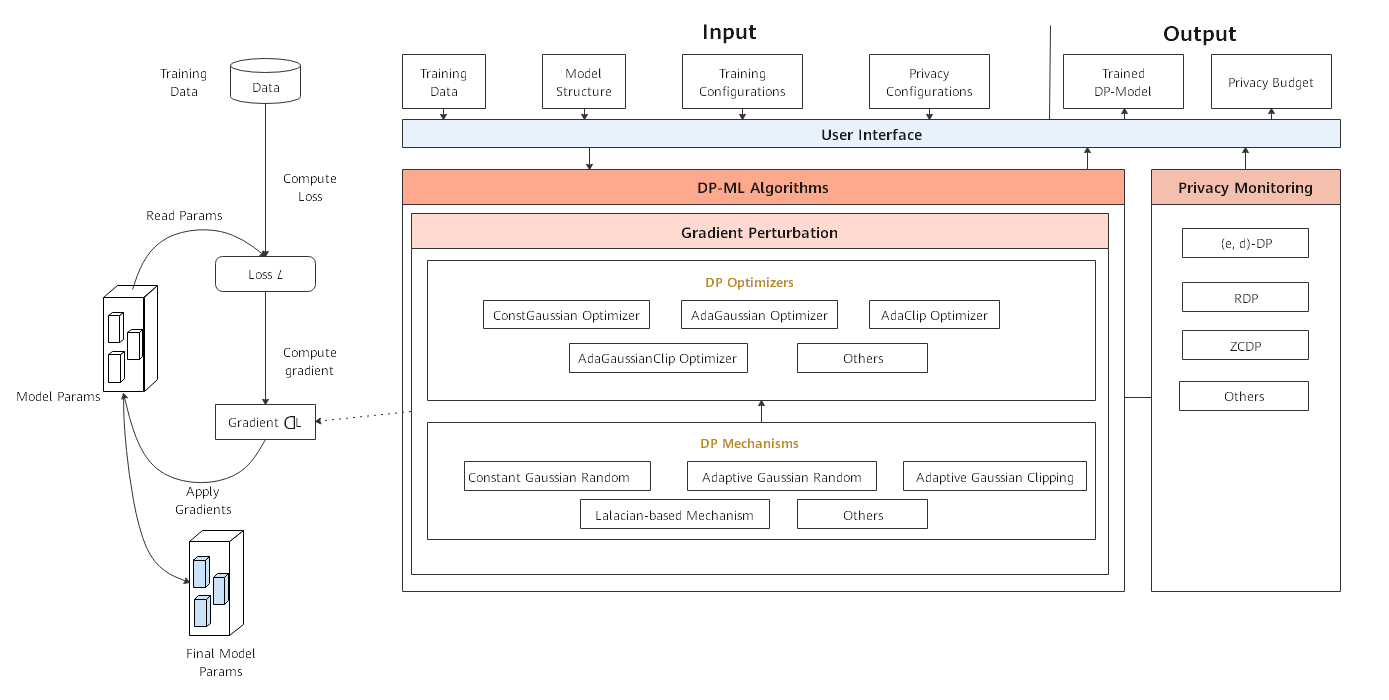

docs/differential_privacy_architecture_cn.png

View File

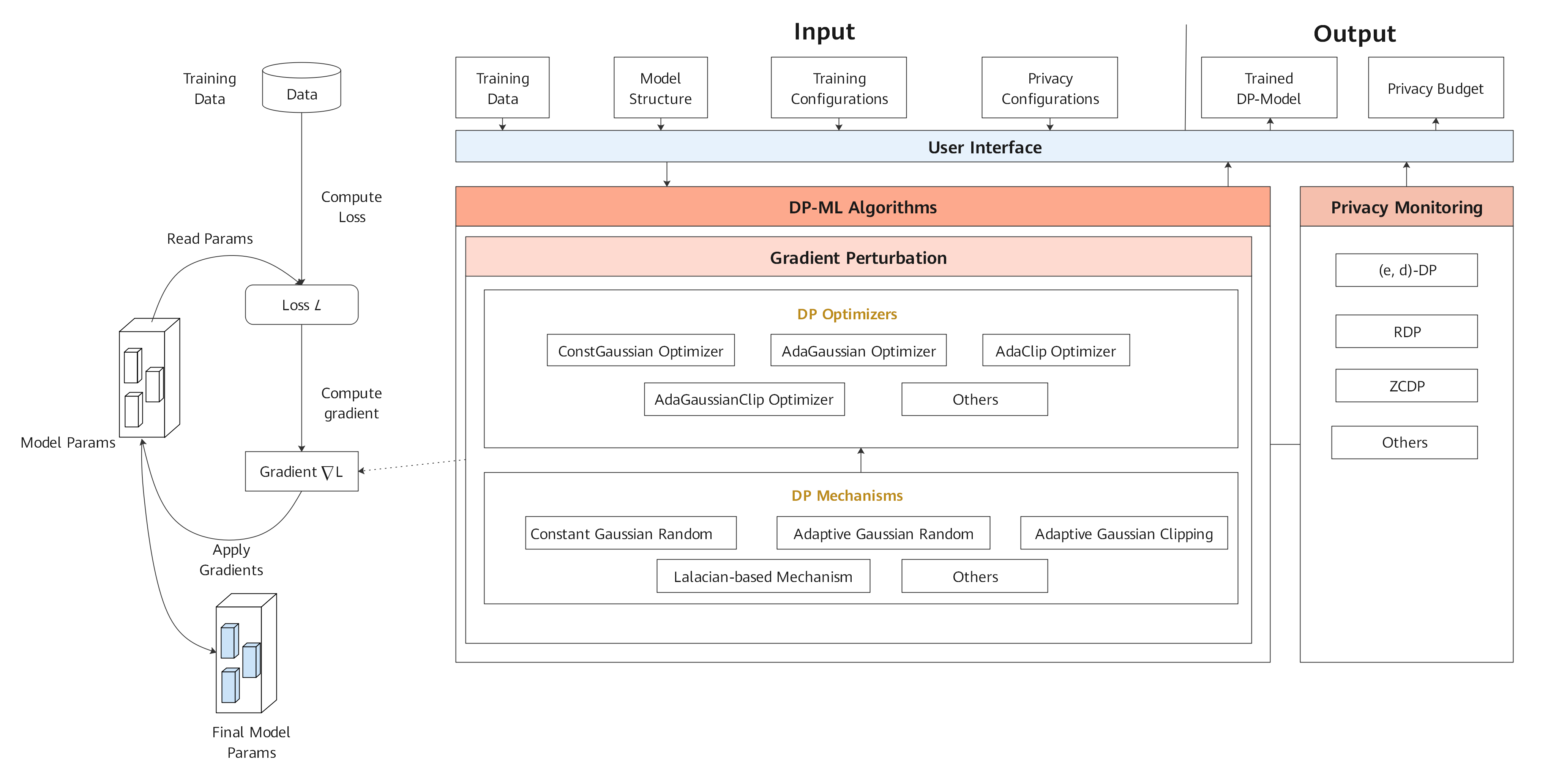

BIN

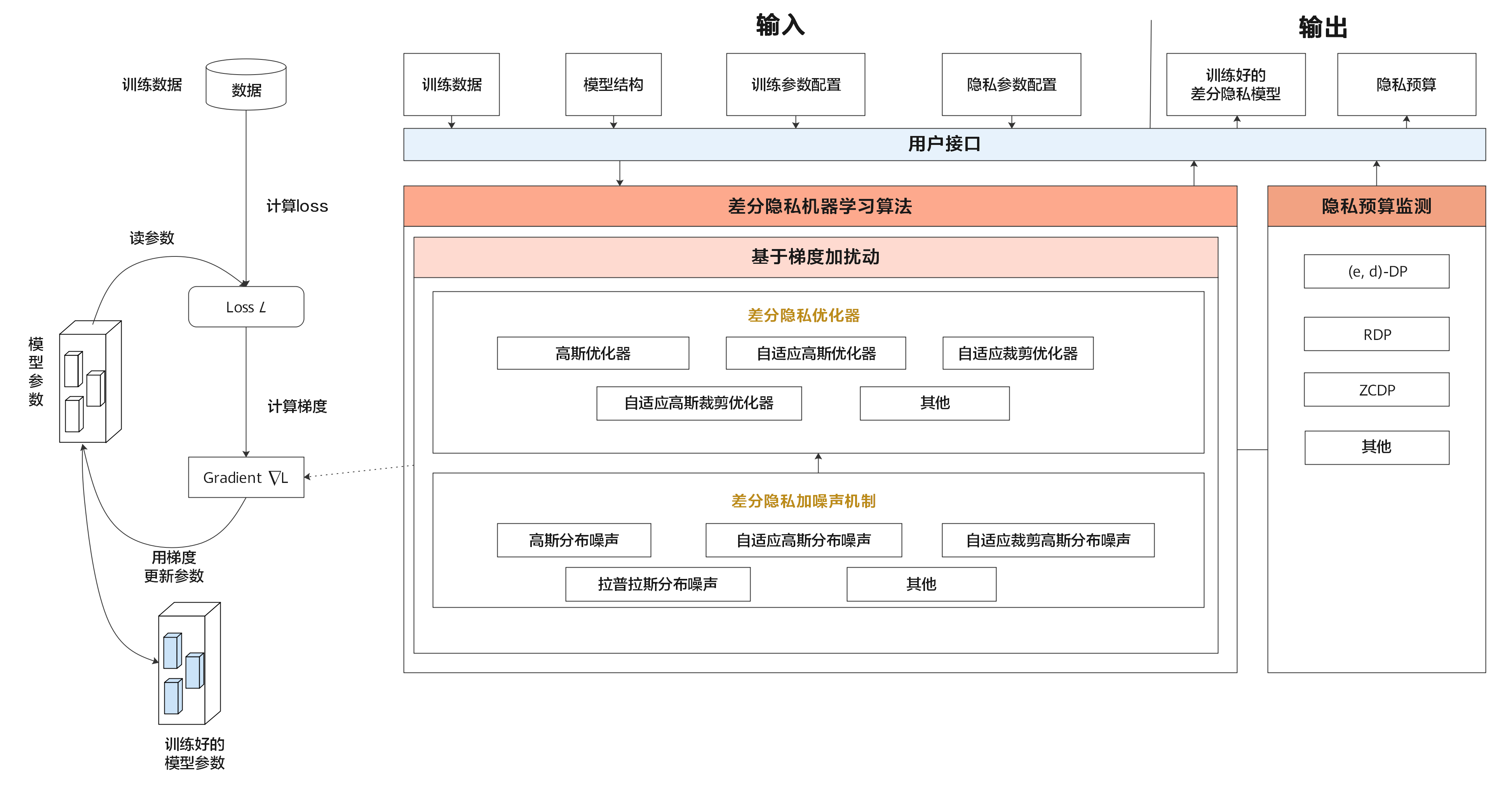

docs/differential_privacy_architecture_en.png

View File

BIN

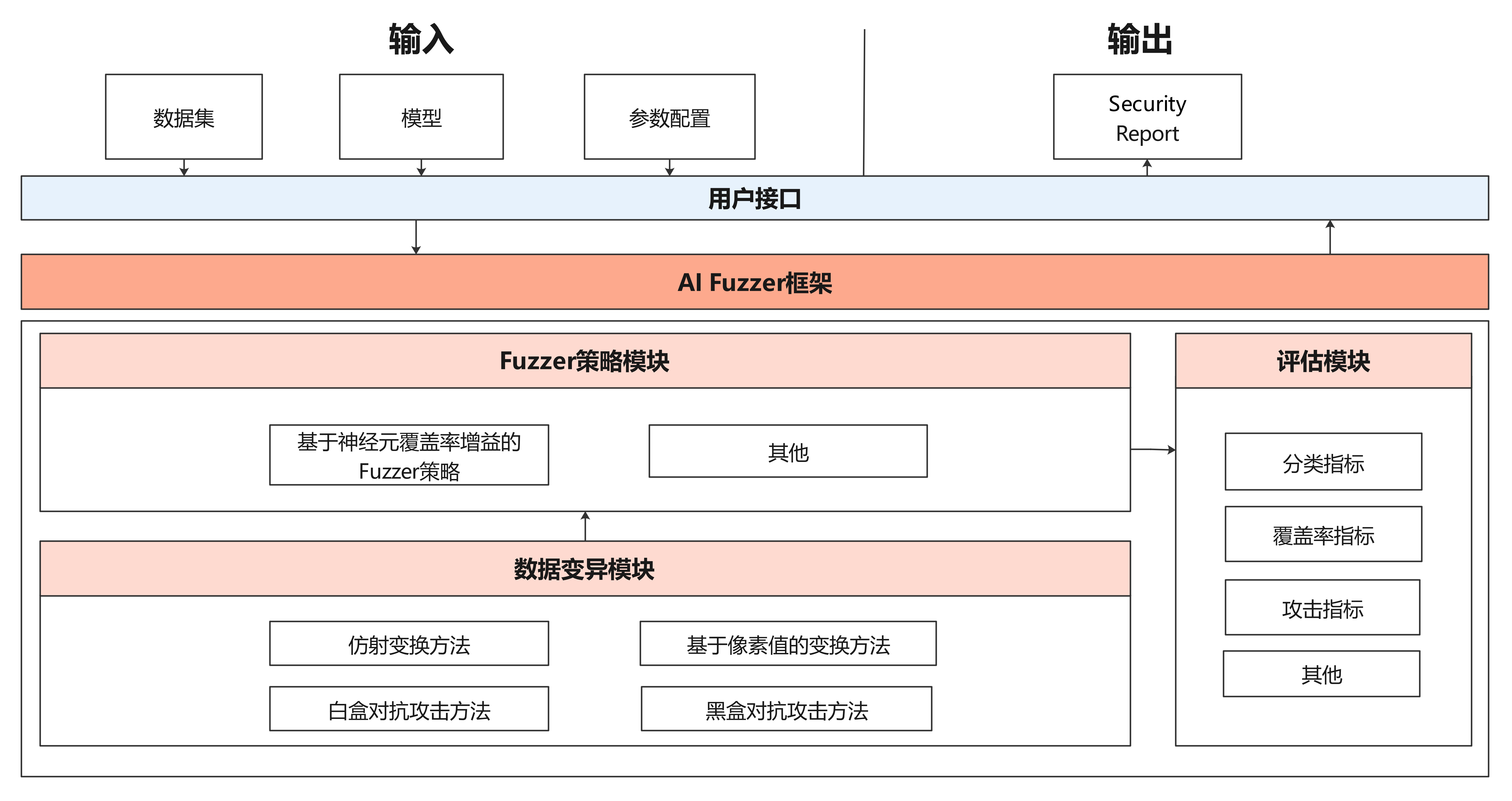

docs/fuzzer_architecture_cn.png

View File

BIN

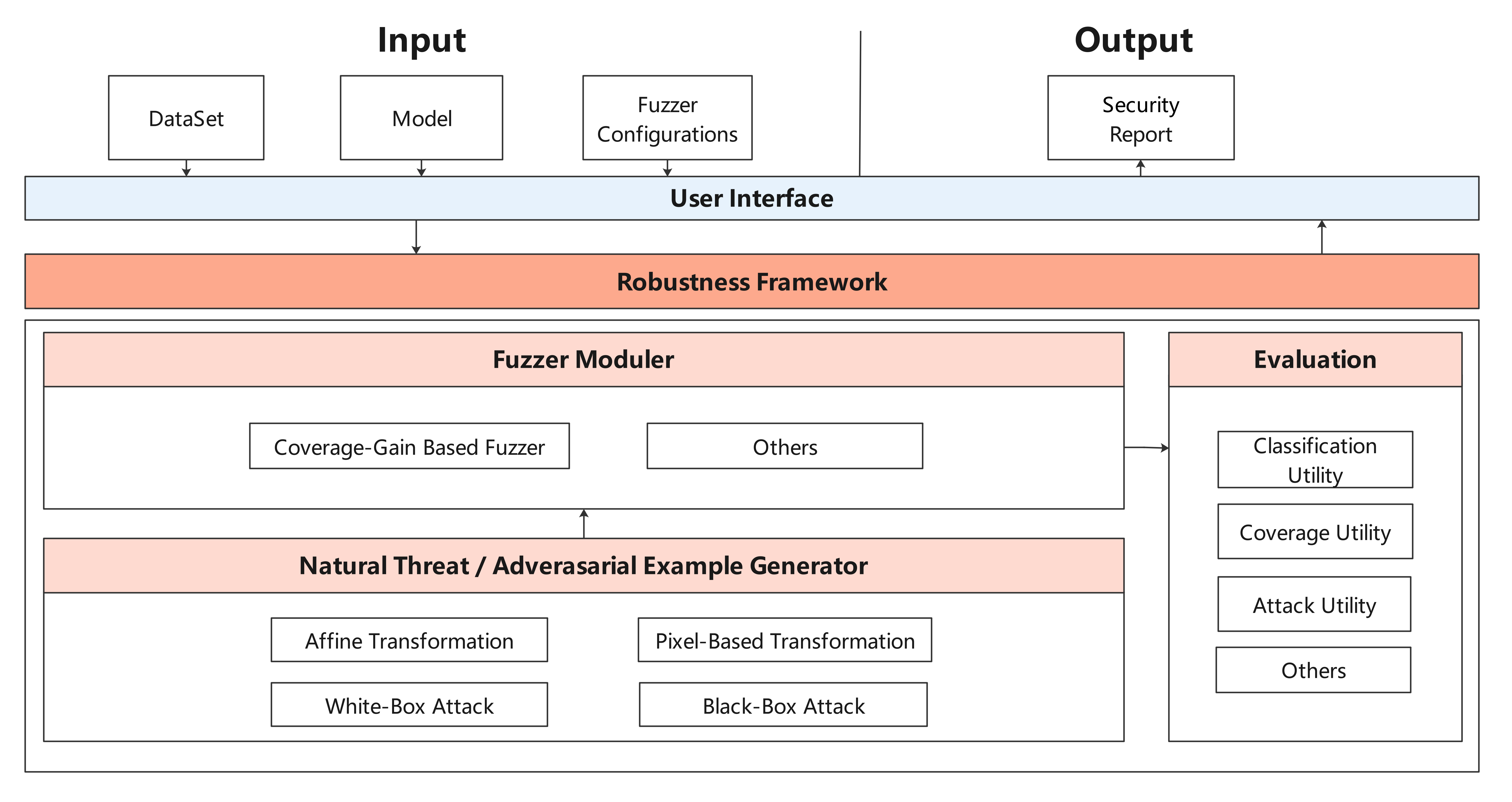

docs/fuzzer_architecture_en.png

View File

BIN

docs/mindarmour_architecture.png

View File

BIN

docs/mindarmour_architecture_cn.png

View File

BIN

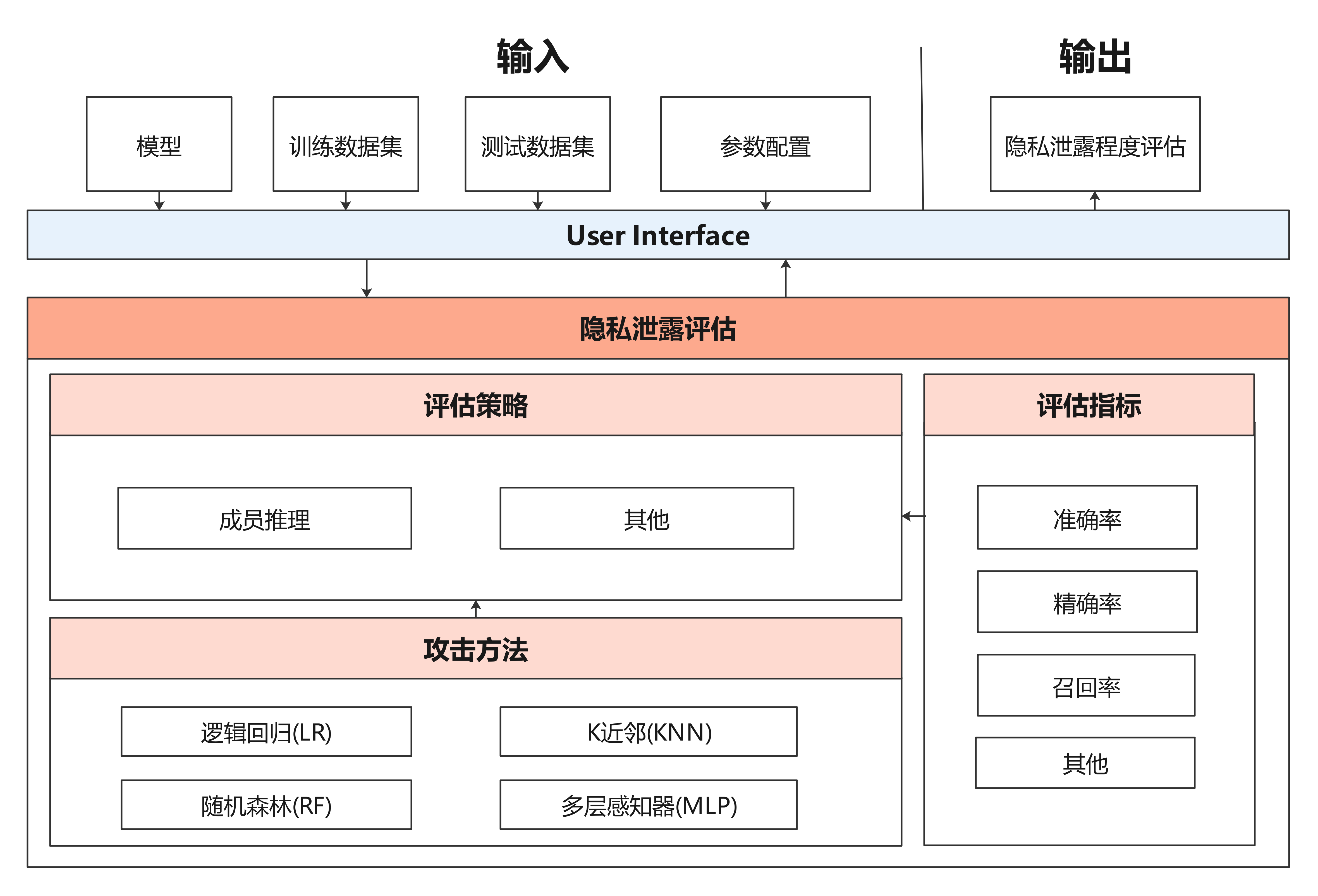

docs/privacy_leakage_cn.png

View File

BIN

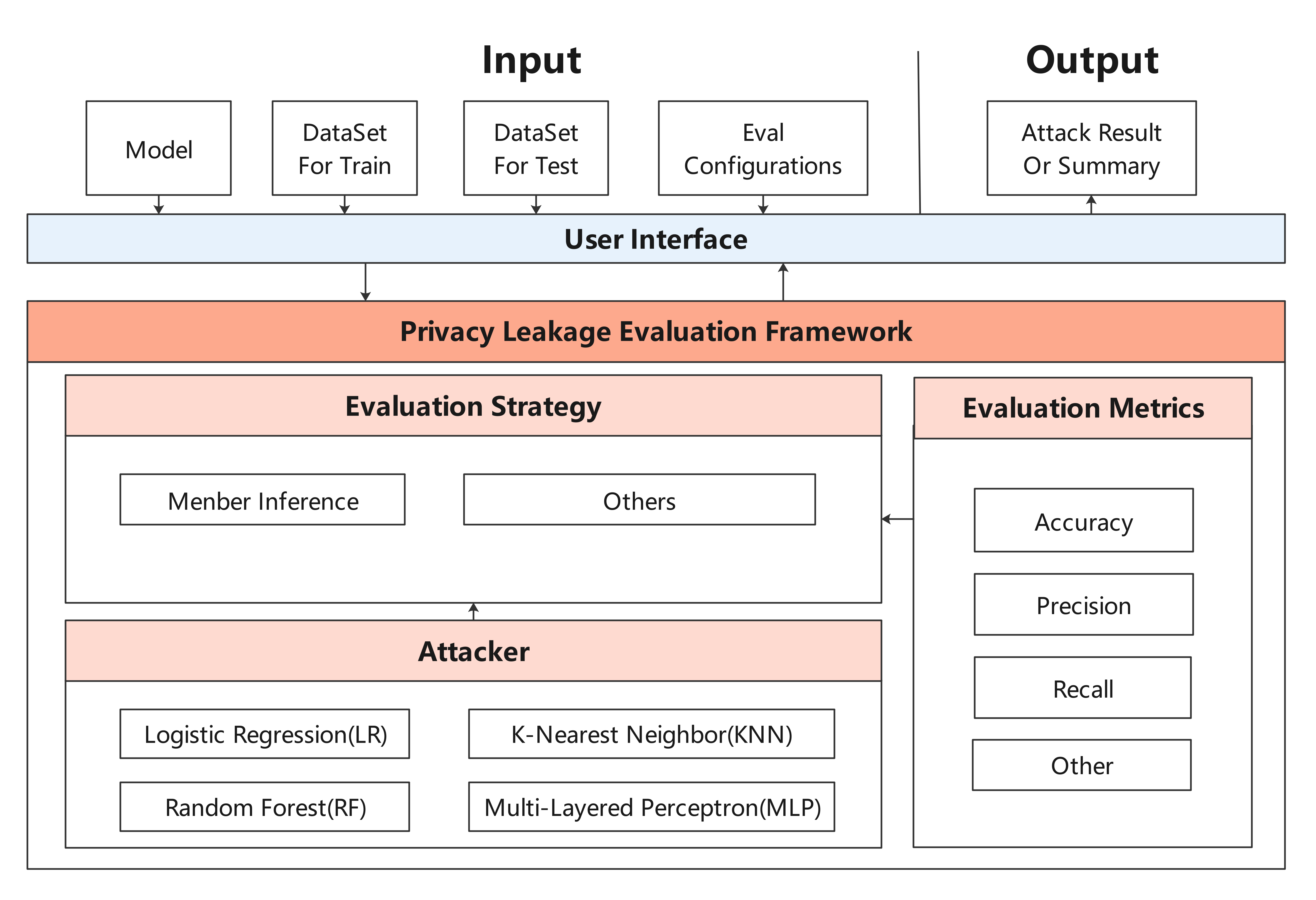

docs/privacy_leakage_en.png

View File

Loading…