commit

96302d7f1b

100 changed files with 13385 additions and 0 deletions

Unified View

Diff Options

-

+25 -0.gitee/PULL_REQUEST_TEMPLATE.md

-

+28 -0.gitignore

-

+201 -0LICENSE

-

+2 -0NOTICE

-

+74 -0README.md

-

+11 -0RELEASE.md

-

+3 -0docs/README.md

-

BINdocs/mindarmour_architecture.png

-

+62 -0example/data_processing.py

-

+46 -0example/mnist_demo/README.md

-

+64 -0example/mnist_demo/lenet5_net.py

-

+118 -0example/mnist_demo/mnist_attack_cw.py

-

+120 -0example/mnist_demo/mnist_attack_deepfool.py

-

+119 -0example/mnist_demo/mnist_attack_fgsm.py

-

+138 -0example/mnist_demo/mnist_attack_genetic.py

-

+150 -0example/mnist_demo/mnist_attack_hsja.py

-

+124 -0example/mnist_demo/mnist_attack_jsma.py

-

+132 -0example/mnist_demo/mnist_attack_lbfgs.py

-

+168 -0example/mnist_demo/mnist_attack_nes.py

-

+119 -0example/mnist_demo/mnist_attack_pgd.py

-

+138 -0example/mnist_demo/mnist_attack_pointwise.py

-

+131 -0example/mnist_demo/mnist_attack_pso.py

-

+142 -0example/mnist_demo/mnist_attack_salt_and_pepper.py

-

+144 -0example/mnist_demo/mnist_defense_nad.py

-

+326 -0example/mnist_demo/mnist_evaluation.py

-

+182 -0example/mnist_demo/mnist_similarity_detector.py

-

+88 -0example/mnist_demo/mnist_train.py

-

+13 -0mindarmour/__init__.py

-

+39 -0mindarmour/attacks/__init__.py

-

+97 -0mindarmour/attacks/attack.py

-

+0 -0mindarmour/attacks/black/__init__.py

-

+75 -0mindarmour/attacks/black/black_model.py

-

+230 -0mindarmour/attacks/black/genetic_attack.py

-

+510 -0mindarmour/attacks/black/hop_skip_jump_attack.py

-

+432 -0mindarmour/attacks/black/natural_evolutionary_strategy.py

-

+326 -0mindarmour/attacks/black/pointwise_attack.py

-

+302 -0mindarmour/attacks/black/pso_attack.py

-

+166 -0mindarmour/attacks/black/salt_and_pepper_attack.py

-

+419 -0mindarmour/attacks/carlini_wagner.py

-

+154 -0mindarmour/attacks/deep_fool.py

-

+402 -0mindarmour/attacks/gradient_method.py

-

+432 -0mindarmour/attacks/iterative_gradient_method.py

-

+196 -0mindarmour/attacks/jsma.py

-

+224 -0mindarmour/attacks/lbfgs.py

-

+15 -0mindarmour/defenses/__init__.py

-

+169 -0mindarmour/defenses/adversarial_defense.py

-

+86 -0mindarmour/defenses/defense.py

-

+56 -0mindarmour/defenses/natural_adversarial_defense.py

-

+69 -0mindarmour/defenses/projected_adversarial_defense.py

-

+18 -0mindarmour/detectors/__init__.py

-

+0 -0mindarmour/detectors/black/__init__.py

-

+284 -0mindarmour/detectors/black/similarity_detector.py

-

+101 -0mindarmour/detectors/detector.py

-

+126 -0mindarmour/detectors/ensemble_detector.py

-

+228 -0mindarmour/detectors/mag_net.py

-

+235 -0mindarmour/detectors/region_based_detector.py

-

+171 -0mindarmour/detectors/spatial_smoothing.py

-

+14 -0mindarmour/evaluations/__init__.py

-

+275 -0mindarmour/evaluations/attack_evaluation.py

-

+0 -0mindarmour/evaluations/black/__init__.py

-

+204 -0mindarmour/evaluations/black/defense_evaluation.py

-

+152 -0mindarmour/evaluations/defense_evaluation.py

-

+141 -0mindarmour/evaluations/visual_metrics.py

-

+7 -0mindarmour/utils/__init__.py

-

+269 -0mindarmour/utils/_check_param.py

-

+154 -0mindarmour/utils/logger.py

-

+147 -0mindarmour/utils/util.py

-

+38 -0package.sh

-

+7 -0requirements.txt

-

+102 -0setup.py

-

+311 -0tests/st/resnet50/resnet_cifar10.py

-

+76 -0tests/st/resnet50/test_cifar10_attack_fgsm.py

-

+144 -0tests/ut/python/attacks/black/test_genetic_attack.py

-

+166 -0tests/ut/python/attacks/black/test_hsja.py

-

+217 -0tests/ut/python/attacks/black/test_nes.py

-

+90 -0tests/ut/python/attacks/black/test_pointwise_attack.py

-

+166 -0tests/ut/python/attacks/black/test_pso_attack.py

-

+123 -0tests/ut/python/attacks/black/test_salt_and_pepper_attack.py

-

+74 -0tests/ut/python/attacks/test_batch_generate_attack.py

-

+90 -0tests/ut/python/attacks/test_cw.py

-

+119 -0tests/ut/python/attacks/test_deep_fool.py

-

+242 -0tests/ut/python/attacks/test_gradient_method.py

-

+136 -0tests/ut/python/attacks/test_iterative_gradient_method.py

-

+161 -0tests/ut/python/attacks/test_jsma.py

-

+72 -0tests/ut/python/attacks/test_lbfgs.py

-

+107 -0tests/ut/python/defenses/mock_net.py

-

+66 -0tests/ut/python/defenses/test_ad.py

-

+70 -0tests/ut/python/defenses/test_ead.py

-

+65 -0tests/ut/python/defenses/test_nad.py

-

+66 -0tests/ut/python/defenses/test_pad.py

-

+101 -0tests/ut/python/detectors/black/test_similarity_detector.py

-

+112 -0tests/ut/python/detectors/test_ensemble_detector.py

-

+164 -0tests/ut/python/detectors/test_mag_net.py

-

+115 -0tests/ut/python/detectors/test_region_based_detector.py

-

+116 -0tests/ut/python/detectors/test_spatial_smoothing.py

-

+73 -0tests/ut/python/evaluations/black/test_black_defense_eval.py

-

+95 -0tests/ut/python/evaluations/test_attack_eval.py

-

+51 -0tests/ut/python/evaluations/test_defense_eval.py

-

+57 -0tests/ut/python/evaluations/test_radar_metric.py

-

BINtests/ut/python/test_data/test_images.npy

+ 25

- 0

.gitee/PULL_REQUEST_TEMPLATE.md

View File

| @@ -0,0 +1,25 @@ | |||||

| <!-- Thanks for sending a pull request! Here are some tips for you: | |||||

| If this is your first time, please read our contributor guidelines: https://gitee.com/mindspore/mindspore/blob/master/CONTRIBUTING.md | |||||

| --> | |||||

| **What type of PR is this?** | |||||

| > Uncomment only one ` /kind <>` line, hit enter to put that in a new line, and remove leading whitespaces from that line: | |||||

| > | |||||

| > /kind bug | |||||

| > /kind task | |||||

| > /kind feature | |||||

| **What this PR does / why we need it**: | |||||

| **Which issue(s) this PR fixes**: | |||||

| <!-- | |||||

| *Automatically closes linked issue when PR is merged. | |||||

| Usage: `Fixes #<issue number>`, or `Fixes (paste link of issue)`. | |||||

| --> | |||||

| Fixes # | |||||

| **Special notes for your reviewer**: | |||||

+ 28

- 0

.gitignore

View File

| @@ -0,0 +1,28 @@ | |||||

| *.dot | |||||

| *.ir | |||||

| *.dat | |||||

| *.pyc | |||||

| *.csv | |||||

| *.gz | |||||

| *.tar | |||||

| *.zip | |||||

| *.rar | |||||

| *.ipynb | |||||

| .idea/ | |||||

| build/ | |||||

| dist/ | |||||

| local_script/ | |||||

| example/dataset/ | |||||

| example/mnist_demo/MNIST_unzip/ | |||||

| example/mnist_demo/trained_ckpt_file/ | |||||

| example/mnist_demo/model/ | |||||

| example/cifar_demo/model/ | |||||

| example/dog_cat_demo/model/ | |||||

| mindarmour.egg-info/ | |||||

| *model/ | |||||

| *MNIST/ | |||||

| *out.data/ | |||||

| *defensed_model/ | |||||

| *pre_trained_model/ | |||||

| *__pycache__/ | |||||

| *kernel_meta | |||||

+ 201

- 0

LICENSE

View File

| @@ -0,0 +1,201 @@ | |||||

| Apache License | |||||

| Version 2.0, January 2004 | |||||

| http://www.apache.org/licenses/ | |||||

| TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION | |||||

| 1. Definitions. | |||||

| "License" shall mean the terms and conditions for use, reproduction, | |||||

| and distribution as defined by Sections 1 through 9 of this document. | |||||

| "Licensor" shall mean the copyright owner or entity authorized by | |||||

| the copyright owner that is granting the License. | |||||

| "Legal Entity" shall mean the union of the acting entity and all | |||||

| other entities that control, are controlled by, or are under common | |||||

| control with that entity. For the purposes of this definition, | |||||

| "control" means (i) the power, direct or indirect, to cause the | |||||

| direction or management of such entity, whether by contract or | |||||

| otherwise, or (ii) ownership of fifty percent (50%) or more of the | |||||

| outstanding shares, or (iii) beneficial ownership of such entity. | |||||

| "You" (or "Your") shall mean an individual or Legal Entity | |||||

| exercising permissions granted by this License. | |||||

| "Source" form shall mean the preferred form for making modifications, | |||||

| including but not limited to software source code, documentation | |||||

| source, and configuration files. | |||||

| "Object" form shall mean any form resulting from mechanical | |||||

| transformation or translation of a Source form, including but | |||||

| not limited to compiled object code, generated documentation, | |||||

| and conversions to other media types. | |||||

| "Work" shall mean the work of authorship, whether in Source or | |||||

| Object form, made available under the License, as indicated by a | |||||

| copyright notice that is included in or attached to the work | |||||

| (an example is provided in the Appendix below). | |||||

| "Derivative Works" shall mean any work, whether in Source or Object | |||||

| form, that is based on (or derived from) the Work and for which the | |||||

| editorial revisions, annotations, elaborations, or other modifications | |||||

| represent, as a whole, an original work of authorship. For the purposes | |||||

| of this License, Derivative Works shall not include works that remain | |||||

| separable from, or merely link (or bind by name) to the interfaces of, | |||||

| the Work and Derivative Works thereof. | |||||

| "Contribution" shall mean any work of authorship, including | |||||

| the original version of the Work and any modifications or additions | |||||

| to that Work or Derivative Works thereof, that is intentionally | |||||

| submitted to Licensor for inclusion in the Work by the copyright owner | |||||

| or by an individual or Legal Entity authorized to submit on behalf of | |||||

| the copyright owner. For the purposes of this definition, "submitted" | |||||

| means any form of electronic, verbal, or written communication sent | |||||

| to the Licensor or its representatives, including but not limited to | |||||

| communication on electronic mailing lists, source code control systems, | |||||

| and issue tracking systems that are managed by, or on behalf of, the | |||||

| Licensor for the purpose of discussing and improving the Work, but | |||||

| excluding communication that is conspicuously marked or otherwise | |||||

| designated in writing by the copyright owner as "Not a Contribution." | |||||

| "Contributor" shall mean Licensor and any individual or Legal Entity | |||||

| on behalf of whom a Contribution has been received by Licensor and | |||||

| subsequently incorporated within the Work. | |||||

| 2. Grant of Copyright License. Subject to the terms and conditions of | |||||

| this License, each Contributor hereby grants to You a perpetual, | |||||

| worldwide, non-exclusive, no-charge, royalty-free, irrevocable | |||||

| copyright license to reproduce, prepare Derivative Works of, | |||||

| publicly display, publicly perform, sublicense, and distribute the | |||||

| Work and such Derivative Works in Source or Object form. | |||||

| 3. Grant of Patent License. Subject to the terms and conditions of | |||||

| this License, each Contributor hereby grants to You a perpetual, | |||||

| worldwide, non-exclusive, no-charge, royalty-free, irrevocable | |||||

| (except as stated in this section) patent license to make, have made, | |||||

| use, offer to sell, sell, import, and otherwise transfer the Work, | |||||

| where such license applies only to those patent claims licensable | |||||

| by such Contributor that are necessarily infringed by their | |||||

| Contribution(s) alone or by combination of their Contribution(s) | |||||

| with the Work to which such Contribution(s) was submitted. If You | |||||

| institute patent litigation against any entity (including a | |||||

| cross-claim or counterclaim in a lawsuit) alleging that the Work | |||||

| or a Contribution incorporated within the Work constitutes direct | |||||

| or contributory patent infringement, then any patent licenses | |||||

| granted to You under this License for that Work shall terminate | |||||

| as of the date such litigation is filed. | |||||

| 4. Redistribution. You may reproduce and distribute copies of the | |||||

| Work or Derivative Works thereof in any medium, with or without | |||||

| modifications, and in Source or Object form, provided that You | |||||

| meet the following conditions: | |||||

| (a) You must give any other recipients of the Work or | |||||

| Derivative Works a copy of this License; and | |||||

| (b) You must cause any modified files to carry prominent notices | |||||

| stating that You changed the files; and | |||||

| (c) You must retain, in the Source form of any Derivative Works | |||||

| that You distribute, all copyright, patent, trademark, and | |||||

| attribution notices from the Source form of the Work, | |||||

| excluding those notices that do not pertain to any part of | |||||

| the Derivative Works; and | |||||

| (d) If the Work includes a "NOTICE" text file as part of its | |||||

| distribution, then any Derivative Works that You distribute must | |||||

| include a readable copy of the attribution notices contained | |||||

| within such NOTICE file, excluding those notices that do not | |||||

| pertain to any part of the Derivative Works, in at least one | |||||

| of the following places: within a NOTICE text file distributed | |||||

| as part of the Derivative Works; within the Source form or | |||||

| documentation, if provided along with the Derivative Works; or, | |||||

| within a display generated by the Derivative Works, if and | |||||

| wherever such third-party notices normally appear. The contents | |||||

| of the NOTICE file are for informational purposes only and | |||||

| do not modify the License. You may add Your own attribution | |||||

| notices within Derivative Works that You distribute, alongside | |||||

| or as an addendum to the NOTICE text from the Work, provided | |||||

| that such additional attribution notices cannot be construed | |||||

| as modifying the License. | |||||

| You may add Your own copyright statement to Your modifications and | |||||

| may provide additional or different license terms and conditions | |||||

| for use, reproduction, or distribution of Your modifications, or | |||||

| for any such Derivative Works as a whole, provided Your use, | |||||

| reproduction, and distribution of the Work otherwise complies with | |||||

| the conditions stated in this License. | |||||

| 5. Submission of Contributions. Unless You explicitly state otherwise, | |||||

| any Contribution intentionally submitted for inclusion in the Work | |||||

| by You to the Licensor shall be under the terms and conditions of | |||||

| this License, without any additional terms or conditions. | |||||

| Notwithstanding the above, nothing herein shall supersede or modify | |||||

| the terms of any separate license agreement you may have executed | |||||

| with Licensor regarding such Contributions. | |||||

| 6. Trademarks. This License does not grant permission to use the trade | |||||

| names, trademarks, service marks, or product names of the Licensor, | |||||

| except as required for reasonable and customary use in describing the | |||||

| origin of the Work and reproducing the content of the NOTICE file. | |||||

| 7. Disclaimer of Warranty. Unless required by applicable law or | |||||

| agreed to in writing, Licensor provides the Work (and each | |||||

| Contributor provides its Contributions) on an "AS IS" BASIS, | |||||

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or | |||||

| implied, including, without limitation, any warranties or conditions | |||||

| of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A | |||||

| PARTICULAR PURPOSE. You are solely responsible for determining the | |||||

| appropriateness of using or redistributing the Work and assume any | |||||

| risks associated with Your exercise of permissions under this License. | |||||

| 8. Limitation of Liability. In no event and under no legal theory, | |||||

| whether in tort (including negligence), contract, or otherwise, | |||||

| unless required by applicable law (such as deliberate and grossly | |||||

| negligent acts) or agreed to in writing, shall any Contributor be | |||||

| liable to You for damages, including any direct, indirect, special, | |||||

| incidental, or consequential damages of any character arising as a | |||||

| result of this License or out of the use or inability to use the | |||||

| Work (including but not limited to damages for loss of goodwill, | |||||

| work stoppage, computer failure or malfunction, or any and all | |||||

| other commercial damages or losses), even if such Contributor | |||||

| has been advised of the possibility of such damages. | |||||

| 9. Accepting Warranty or Additional Liability. While redistributing | |||||

| the Work or Derivative Works thereof, You may choose to offer, | |||||

| and charge a fee for, acceptance of support, warranty, indemnity, | |||||

| or other liability obligations and/or rights consistent with this | |||||

| License. However, in accepting such obligations, You may act only | |||||

| on Your own behalf and on Your sole responsibility, not on behalf | |||||

| of any other Contributor, and only if You agree to indemnify, | |||||

| defend, and hold each Contributor harmless for any liability | |||||

| incurred by, or claims asserted against, such Contributor by reason | |||||

| of your accepting any such warranty or additional liability. | |||||

| END OF TERMS AND CONDITIONS | |||||

| APPENDIX: How to apply the Apache License to your work. | |||||

| To apply the Apache License to your work, attach the following | |||||

| boilerplate notice, with the fields enclosed by brackets "[]" | |||||

| replaced with your own identifying information. (Don't include | |||||

| the brackets!) The text should be enclosed in the appropriate | |||||

| comment syntax for the file format. We also recommend that a | |||||

| file or class name and description of purpose be included on the | |||||

| same "printed page" as the copyright notice for easier | |||||

| identification within third-party archives. | |||||

| Copyright [yyyy] [name of copyright owner] | |||||

| Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| you may not use this file except in compliance with the License. | |||||

| You may obtain a copy of the License at | |||||

| http://www.apache.org/licenses/LICENSE-2.0 | |||||

| Unless required by applicable law or agreed to in writing, software | |||||

| distributed under the License is distributed on an "AS IS" BASIS, | |||||

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| See the License for the specific language governing permissions and | |||||

| limitations under the License. | |||||

+ 2

- 0

NOTICE

View File

| @@ -0,0 +1,2 @@ | |||||

| MindSpore MindArmour | |||||

| Copyright 2019-2020 Huawei Technologies Co., Ltd | |||||

+ 74

- 0

README.md

View File

| @@ -0,0 +1,74 @@ | |||||

| # MindArmour | |||||

| - [What is MindArmour](#what-is-mindarmour) | |||||

| - [Setting up](#setting-up-mindarmour) | |||||

| - [Docs](#docs) | |||||

| - [Community](#community) | |||||

| - [Contributing](#contributing) | |||||

| - [Release Notes](#release-notes) | |||||

| - [License](#license) | |||||

| ## What is MindArmour | |||||

| A tool box for MindSpore users to enhance model security and trustworthiness. | |||||

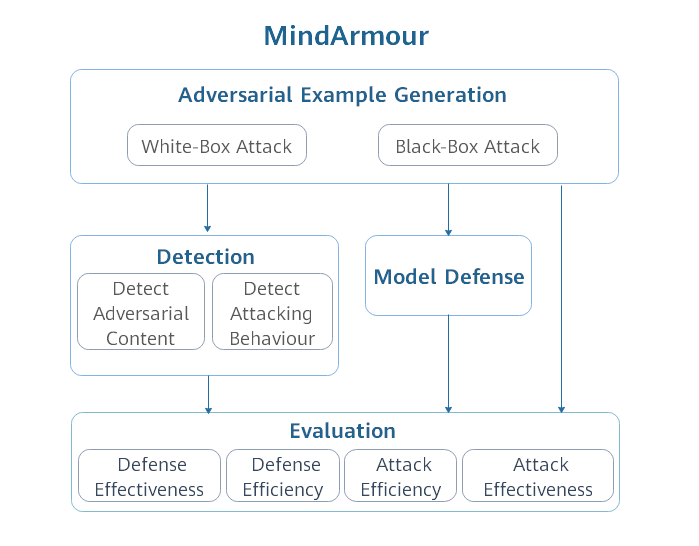

| MindArmour is designed for adversarial examples, including four submodule: adversarial examples generation, adversarial example detection, model defense and evaluation. The architecture is shown as follow: | |||||

|  | |||||

| ## Setting up MindArmour | |||||

| ### Dependencies | |||||

| This library uses MindSpore to accelerate graph computations performed by many machine learning models. Therefore, installing MindSpore is a pre-requisite. All other dependencies are included in `setup.py`. | |||||

| ### Installation | |||||

| #### Installation for development | |||||

| 1. Download source code from Gitee. | |||||

| ```bash | |||||

| git clone https://gitee.com/mindspore/mindarmour.git | |||||

| ``` | |||||

| 2. Compile and install in MindArmour directory. | |||||

| ```bash | |||||

| $ cd mindarmour | |||||

| $ python setup.py install | |||||

| ``` | |||||

| #### `Pip` installation | |||||

| 1. Download whl package from [MindSpore website](https://www.mindspore.cn/versions/en), then run the following command: | |||||

| ``` | |||||

| pip install mindarmour-{version}-cp37-cp37m-linux_{arch}.whl | |||||

| ``` | |||||

| 2. Successfully installed, if there is no error message such as `No module named 'mindarmour'` when execute the following command: | |||||

| ```bash | |||||

| python -c 'import mindarmour' | |||||

| ``` | |||||

| ## Docs | |||||

| Guidance on installation, tutorials, API, see our [User Documentation](https://gitee.com/mindspore/docs). | |||||

| ## Community | |||||

| - [MindSpore Slack](https://join.slack.com/t/mindspore/shared_invite/enQtOTcwMTIxMDI3NjM0LTNkMWM2MzI5NjIyZWU5ZWQ5M2EwMTQ5MWNiYzMxOGM4OWFhZjI4M2E5OGI2YTg3ODU1ODE2Njg1MThiNWI3YmQ) - Ask questions and find answers. | |||||

| ## Contributing | |||||

| Welcome contributions. See our [Contributor Wiki](https://gitee.com/mindspore/mindspore/blob/master/CONTRIBUTING.md) for more details. | |||||

| ## Release Notes | |||||

| The release notes, see our [RELEASE](RELEASE.md). | |||||

| ## License | |||||

| [Apache License 2.0](LICENSE) | |||||

+ 11

- 0

RELEASE.md

View File

| @@ -0,0 +1,11 @@ | |||||

| # Release 0.1.0-alpha | |||||

| Initial release of MindArmour. | |||||

| ## Major Features | |||||

| - Support adversarial attack and defense on the platform of MindSpore. | |||||

| - Include 13 white-box and 7 black-box attack methods. | |||||

| - Provide 5 detection algorithms to detect attacking in multiple way. | |||||

| - Provide adversarial training to enhance model security. | |||||

| - Provide 6 evaluation metrics for attack methods and 9 evaluation metrics for defense methods. | |||||

+ 3

- 0

docs/README.md

View File

| @@ -0,0 +1,3 @@ | |||||

| # MindArmour Documentation | |||||

| The MindArmour documentation is in the [MindSpore Docs](https://gitee.com/mindspore/docs) repository. | |||||

BIN

docs/mindarmour_architecture.png

View File

+ 62

- 0

example/data_processing.py

View File

| @@ -0,0 +1,62 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import mindspore.dataset as ds | |||||

| import mindspore.dataset.transforms.vision.c_transforms as CV | |||||

| import mindspore.dataset.transforms.c_transforms as C | |||||

| from mindspore.dataset.transforms.vision import Inter | |||||

| import mindspore.common.dtype as mstype | |||||

| def generate_mnist_dataset(data_path, batch_size=32, repeat_size=1, | |||||

| num_parallel_workers=1, sparse=True): | |||||

| """ | |||||

| create dataset for training or testing | |||||

| """ | |||||

| # define dataset | |||||

| ds1 = ds.MnistDataset(data_path) | |||||

| # define operation parameters | |||||

| resize_height, resize_width = 32, 32 | |||||

| rescale = 1.0 / 255.0 | |||||

| shift = 0.0 | |||||

| # define map operations | |||||

| resize_op = CV.Resize((resize_height, resize_width), | |||||

| interpolation=Inter.LINEAR) | |||||

| rescale_op = CV.Rescale(rescale, shift) | |||||

| hwc2chw_op = CV.HWC2CHW() | |||||

| type_cast_op = C.TypeCast(mstype.int32) | |||||

| one_hot_enco = C.OneHot(10) | |||||

| # apply map operations on images | |||||

| if not sparse: | |||||

| ds1 = ds1.map(input_columns="label", operations=one_hot_enco, | |||||

| num_parallel_workers=num_parallel_workers) | |||||

| type_cast_op = C.TypeCast(mstype.float32) | |||||

| ds1 = ds1.map(input_columns="label", operations=type_cast_op, | |||||

| num_parallel_workers=num_parallel_workers) | |||||

| ds1 = ds1.map(input_columns="image", operations=resize_op, | |||||

| num_parallel_workers=num_parallel_workers) | |||||

| ds1 = ds1.map(input_columns="image", operations=rescale_op, | |||||

| num_parallel_workers=num_parallel_workers) | |||||

| ds1 = ds1.map(input_columns="image", operations=hwc2chw_op, | |||||

| num_parallel_workers=num_parallel_workers) | |||||

| # apply DatasetOps | |||||

| buffer_size = 10000 | |||||

| ds1 = ds1.shuffle(buffer_size=buffer_size) | |||||

| ds1 = ds1.batch(batch_size, drop_remainder=True) | |||||

| ds1 = ds1.repeat(repeat_size) | |||||

| return ds1 | |||||

+ 46

- 0

example/mnist_demo/README.md

View File

| @@ -0,0 +1,46 @@ | |||||

| # mnist demo | |||||

| ## Introduction | |||||

| The MNIST database of handwritten digits, available from this page, has a training set of 60,000 examples, and a test set of 10,000 examples. It is a subset of a larger set available from MNIST. The digits have been size-normalized and centered in a fixed-size image. | |||||

| ## run demo | |||||

| ### 1. download dataset | |||||

| ```sh | |||||

| $ cd example/mnist_demo | |||||

| $ mkdir MNIST_unzip | |||||

| $ cd MNIST_unzip | |||||

| $ mkdir train | |||||

| $ mkdir test | |||||

| $ cd train | |||||

| $ wget "http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz" | |||||

| $ wget "http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz" | |||||

| $ gzip train-images-idx3-ubyte.gz -d | |||||

| $ gzip train-labels-idx1-ubyte.gz -d | |||||

| $ cd ../test | |||||

| $ wget "http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz" | |||||

| $ wget "http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz" | |||||

| $ gzip t10k-images-idx3-ubyte.gz -d | |||||

| $ gzip t10k-images-idx3-ubyte.gz -d | |||||

| $ cd ../../ | |||||

| ``` | |||||

| ### 1. trian model | |||||

| ```sh | |||||

| $ python mnist_train.py | |||||

| ``` | |||||

| ### 2. run attack test | |||||

| ```sh | |||||

| $ mkdir out.data | |||||

| $ python mnist_attack_jsma.py | |||||

| ``` | |||||

| ### 3. run defense/detector test | |||||

| ```sh | |||||

| $ python mnist_defense_nad.py | |||||

| $ python mnist_similarity_detector.py | |||||

| ``` | |||||

+ 64

- 0

example/mnist_demo/lenet5_net.py

View File

| @@ -0,0 +1,64 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import mindspore.nn as nn | |||||

| import mindspore.ops.operations as P | |||||

| from mindspore.common.initializer import TruncatedNormal | |||||

| def conv(in_channels, out_channels, kernel_size, stride=1, padding=0): | |||||

| weight = weight_variable() | |||||

| return nn.Conv2d(in_channels, out_channels, | |||||

| kernel_size=kernel_size, stride=stride, padding=padding, | |||||

| weight_init=weight, has_bias=False, pad_mode="valid") | |||||

| def fc_with_initialize(input_channels, out_channels): | |||||

| weight = weight_variable() | |||||

| bias = weight_variable() | |||||

| return nn.Dense(input_channels, out_channels, weight, bias) | |||||

| def weight_variable(): | |||||

| return TruncatedNormal(0.2) | |||||

| class LeNet5(nn.Cell): | |||||

| """ | |||||

| Lenet network | |||||

| """ | |||||

| def __init__(self): | |||||

| super(LeNet5, self).__init__() | |||||

| self.conv1 = conv(1, 6, 5) | |||||

| self.conv2 = conv(6, 16, 5) | |||||

| self.fc1 = fc_with_initialize(16*5*5, 120) | |||||

| self.fc2 = fc_with_initialize(120, 84) | |||||

| self.fc3 = fc_with_initialize(84, 10) | |||||

| self.relu = nn.ReLU() | |||||

| self.max_pool2d = nn.MaxPool2d(kernel_size=2, stride=2) | |||||

| self.reshape = P.Reshape() | |||||

| def construct(self, x): | |||||

| x = self.conv1(x) | |||||

| x = self.relu(x) | |||||

| x = self.max_pool2d(x) | |||||

| x = self.conv2(x) | |||||

| x = self.relu(x) | |||||

| x = self.max_pool2d(x) | |||||

| x = self.reshape(x, (-1, 16*5*5)) | |||||

| x = self.fc1(x) | |||||

| x = self.relu(x) | |||||

| x = self.fc2(x) | |||||

| x = self.relu(x) | |||||

| x = self.fc3(x) | |||||

| return x | |||||

+ 118

- 0

example/mnist_demo/mnist_attack_cw.py

View File

| @@ -0,0 +1,118 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import sys | |||||

| import time | |||||

| import numpy as np | |||||

| import pytest | |||||

| from scipy.special import softmax | |||||

| from mindspore import Model | |||||

| from mindspore import Tensor | |||||

| from mindspore import context | |||||

| from mindspore.train.serialization import load_checkpoint, load_param_into_net | |||||

| from mindarmour.attacks.carlini_wagner import CarliniWagnerL2Attack | |||||

| from mindarmour.utils.logger import LogUtil | |||||

| from mindarmour.evaluations.attack_evaluation import AttackEvaluate | |||||

| from lenet5_net import LeNet5 | |||||

| context.set_context(mode=context.GRAPH_MODE, device_target="Ascend") | |||||

| sys.path.append("..") | |||||

| from data_processing import generate_mnist_dataset | |||||

| LOGGER = LogUtil.get_instance() | |||||

| TAG = 'CW_Test' | |||||

| @pytest.mark.level1 | |||||

| @pytest.mark.platform_arm_ascend_training | |||||

| @pytest.mark.platform_x86_ascend_training | |||||

| @pytest.mark.env_card | |||||

| @pytest.mark.component_mindarmour | |||||

| def test_carlini_wagner_attack(): | |||||

| """ | |||||

| CW-Attack test | |||||

| """ | |||||

| # upload trained network | |||||

| ckpt_name = './trained_ckpt_file/checkpoint_lenet-10_1875.ckpt' | |||||

| net = LeNet5() | |||||

| load_dict = load_checkpoint(ckpt_name) | |||||

| load_param_into_net(net, load_dict) | |||||

| # get test data | |||||

| data_list = "./MNIST_unzip/test" | |||||

| batch_size = 32 | |||||

| ds = generate_mnist_dataset(data_list, batch_size=batch_size) | |||||

| # prediction accuracy before attack | |||||

| model = Model(net) | |||||

| batch_num = 3 # the number of batches of attacking samples | |||||

| test_images = [] | |||||

| test_labels = [] | |||||

| predict_labels = [] | |||||

| i = 0 | |||||

| for data in ds.create_tuple_iterator(): | |||||

| i += 1 | |||||

| images = data[0].astype(np.float32) | |||||

| labels = data[1] | |||||

| test_images.append(images) | |||||

| test_labels.append(labels) | |||||

| pred_labels = np.argmax(model.predict(Tensor(images)).asnumpy(), | |||||

| axis=1) | |||||

| predict_labels.append(pred_labels) | |||||

| if i >= batch_num: | |||||

| break | |||||

| predict_labels = np.concatenate(predict_labels) | |||||

| true_labels = np.concatenate(test_labels) | |||||

| accuracy = np.mean(np.equal(predict_labels, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy before attacking is : %s", accuracy) | |||||

| # attacking | |||||

| num_classes = 10 | |||||

| attack = CarliniWagnerL2Attack(net, num_classes, targeted=False) | |||||

| start_time = time.clock() | |||||

| adv_data = attack.batch_generate(np.concatenate(test_images), | |||||

| np.concatenate(test_labels), batch_size=32) | |||||

| stop_time = time.clock() | |||||

| pred_logits_adv = model.predict(Tensor(adv_data)).asnumpy() | |||||

| # rescale predict confidences into (0, 1). | |||||

| pred_logits_adv = softmax(pred_logits_adv, axis=1) | |||||

| pred_labels_adv = np.argmax(pred_logits_adv, axis=1) | |||||

| accuracy_adv = np.mean(np.equal(pred_labels_adv, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy after attacking is : %s", | |||||

| accuracy_adv) | |||||

| test_labels = np.eye(10)[np.concatenate(test_labels)] | |||||

| attack_evaluate = AttackEvaluate(np.concatenate(test_images).transpose(0, 2, 3, 1), | |||||

| test_labels, adv_data.transpose(0, 2, 3, 1), | |||||

| pred_logits_adv) | |||||

| LOGGER.info(TAG, 'mis-classification rate of adversaries is : %s', | |||||

| attack_evaluate.mis_classification_rate()) | |||||

| LOGGER.info(TAG, 'The average confidence of adversarial class is : %s', | |||||

| attack_evaluate.avg_conf_adv_class()) | |||||

| LOGGER.info(TAG, 'The average confidence of true class is : %s', | |||||

| attack_evaluate.avg_conf_true_class()) | |||||

| LOGGER.info(TAG, 'The average distance (l0, l2, linf) between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_lp_distance()) | |||||

| LOGGER.info(TAG, 'The average structural similarity between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_ssim()) | |||||

| LOGGER.info(TAG, 'The average costing time is %s', | |||||

| (stop_time - start_time)/(batch_num*batch_size)) | |||||

| if __name__ == '__main__': | |||||

| test_carlini_wagner_attack() | |||||

+ 120

- 0

example/mnist_demo/mnist_attack_deepfool.py

View File

| @@ -0,0 +1,120 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import sys | |||||

| import time | |||||

| import numpy as np | |||||

| import pytest | |||||

| from scipy.special import softmax | |||||

| from mindspore import Model | |||||

| from mindspore import Tensor | |||||

| from mindspore import context | |||||

| from mindspore.train.serialization import load_checkpoint, load_param_into_net | |||||

| from mindarmour.attacks.deep_fool import DeepFool | |||||

| from mindarmour.utils.logger import LogUtil | |||||

| from mindarmour.evaluations.attack_evaluation import AttackEvaluate | |||||

| from lenet5_net import LeNet5 | |||||

| context.set_context(mode=context.GRAPH_MODE, device_target="Ascend") | |||||

| sys.path.append("..") | |||||

| from data_processing import generate_mnist_dataset | |||||

| LOGGER = LogUtil.get_instance() | |||||

| TAG = 'DeepFool_Test' | |||||

| @pytest.mark.level1 | |||||

| @pytest.mark.platform_arm_ascend_training | |||||

| @pytest.mark.platform_x86_ascend_training | |||||

| @pytest.mark.env_card | |||||

| @pytest.mark.component_mindarmour | |||||

| def test_deepfool_attack(): | |||||

| """ | |||||

| DeepFool-Attack test | |||||

| """ | |||||

| # upload trained network | |||||

| ckpt_name = './trained_ckpt_file/checkpoint_lenet-10_1875.ckpt' | |||||

| net = LeNet5() | |||||

| load_dict = load_checkpoint(ckpt_name) | |||||

| load_param_into_net(net, load_dict) | |||||

| # get test data | |||||

| data_list = "./MNIST_unzip/test" | |||||

| batch_size = 32 | |||||

| ds = generate_mnist_dataset(data_list, batch_size=batch_size) | |||||

| # prediction accuracy before attack | |||||

| model = Model(net) | |||||

| batch_num = 3 # the number of batches of attacking samples | |||||

| test_images = [] | |||||

| test_labels = [] | |||||

| predict_labels = [] | |||||

| i = 0 | |||||

| for data in ds.create_tuple_iterator(): | |||||

| i += 1 | |||||

| images = data[0].astype(np.float32) | |||||

| labels = data[1] | |||||

| test_images.append(images) | |||||

| test_labels.append(labels) | |||||

| pred_labels = np.argmax(model.predict(Tensor(images)).asnumpy(), | |||||

| axis=1) | |||||

| predict_labels.append(pred_labels) | |||||

| if i >= batch_num: | |||||

| break | |||||

| predict_labels = np.concatenate(predict_labels) | |||||

| true_labels = np.concatenate(test_labels) | |||||

| accuracy = np.mean(np.equal(predict_labels, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy before attacking is : %s", accuracy) | |||||

| # attacking | |||||

| classes = 10 | |||||

| attack = DeepFool(net, classes, norm_level=2, | |||||

| bounds=(0.0, 1.0)) | |||||

| start_time = time.clock() | |||||

| adv_data = attack.batch_generate(np.concatenate(test_images), | |||||

| np.concatenate(test_labels), batch_size=32) | |||||

| stop_time = time.clock() | |||||

| pred_logits_adv = model.predict(Tensor(adv_data)).asnumpy() | |||||

| # rescale predict confidences into (0, 1). | |||||

| pred_logits_adv = softmax(pred_logits_adv, axis=1) | |||||

| pred_labels_adv = np.argmax(pred_logits_adv, axis=1) | |||||

| accuracy_adv = np.mean(np.equal(pred_labels_adv, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy after attacking is : %s", | |||||

| accuracy_adv) | |||||

| test_labels = np.eye(10)[np.concatenate(test_labels)] | |||||

| attack_evaluate = AttackEvaluate(np.concatenate(test_images).transpose(0, 2, 3, 1), | |||||

| test_labels, adv_data.transpose(0, 2, 3, 1), | |||||

| pred_logits_adv) | |||||

| LOGGER.info(TAG, 'mis-classification rate of adversaries is : %s', | |||||

| attack_evaluate.mis_classification_rate()) | |||||

| LOGGER.info(TAG, 'The average confidence of adversarial class is : %s', | |||||

| attack_evaluate.avg_conf_adv_class()) | |||||

| LOGGER.info(TAG, 'The average confidence of true class is : %s', | |||||

| attack_evaluate.avg_conf_true_class()) | |||||

| LOGGER.info(TAG, 'The average distance (l0, l2, linf) between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_lp_distance()) | |||||

| LOGGER.info(TAG, 'The average structural similarity between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_ssim()) | |||||

| LOGGER.info(TAG, 'The average costing time is %s', | |||||

| (stop_time - start_time)/(batch_num*batch_size)) | |||||

| if __name__ == '__main__': | |||||

| test_deepfool_attack() | |||||

+ 119

- 0

example/mnist_demo/mnist_attack_fgsm.py

View File

| @@ -0,0 +1,119 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import sys | |||||

| import time | |||||

| import numpy as np | |||||

| import pytest | |||||

| from scipy.special import softmax | |||||

| from mindspore import Model | |||||

| from mindspore import Tensor | |||||

| from mindspore import context | |||||

| from mindspore.train.serialization import load_checkpoint, load_param_into_net | |||||

| from mindarmour.attacks.gradient_method import FastGradientSignMethod | |||||

| from mindarmour.utils.logger import LogUtil | |||||

| from mindarmour.evaluations.attack_evaluation import AttackEvaluate | |||||

| from lenet5_net import LeNet5 | |||||

| context.set_context(mode=context.GRAPH_MODE, device_target="Ascend") | |||||

| sys.path.append("..") | |||||

| from data_processing import generate_mnist_dataset | |||||

| LOGGER = LogUtil.get_instance() | |||||

| TAG = 'FGSM_Test' | |||||

| @pytest.mark.level1 | |||||

| @pytest.mark.platform_arm_ascend_training | |||||

| @pytest.mark.platform_x86_ascend_training | |||||

| @pytest.mark.env_card | |||||

| @pytest.mark.component_mindarmour | |||||

| def test_fast_gradient_sign_method(): | |||||

| """ | |||||

| FGSM-Attack test | |||||

| """ | |||||

| # upload trained network | |||||

| ckpt_name = './trained_ckpt_file/checkpoint_lenet-10_1875.ckpt' | |||||

| net = LeNet5() | |||||

| load_dict = load_checkpoint(ckpt_name) | |||||

| load_param_into_net(net, load_dict) | |||||

| # get test data | |||||

| data_list = "./MNIST_unzip/test" | |||||

| batch_size = 32 | |||||

| ds = generate_mnist_dataset(data_list, batch_size, sparse=False) | |||||

| # prediction accuracy before attack | |||||

| model = Model(net) | |||||

| batch_num = 3 # the number of batches of attacking samples | |||||

| test_images = [] | |||||

| test_labels = [] | |||||

| predict_labels = [] | |||||

| i = 0 | |||||

| for data in ds.create_tuple_iterator(): | |||||

| i += 1 | |||||

| images = data[0].astype(np.float32) | |||||

| labels = data[1] | |||||

| test_images.append(images) | |||||

| test_labels.append(labels) | |||||

| pred_labels = np.argmax(model.predict(Tensor(images)).asnumpy(), | |||||

| axis=1) | |||||

| predict_labels.append(pred_labels) | |||||

| if i >= batch_num: | |||||

| break | |||||

| predict_labels = np.concatenate(predict_labels) | |||||

| true_labels = np.argmax(np.concatenate(test_labels), axis=1) | |||||

| accuracy = np.mean(np.equal(predict_labels, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy before attacking is : %s", accuracy) | |||||

| # attacking | |||||

| attack = FastGradientSignMethod(net, eps=0.3) | |||||

| start_time = time.clock() | |||||

| adv_data = attack.batch_generate(np.concatenate(test_images), | |||||

| np.concatenate(test_labels), batch_size=32) | |||||

| stop_time = time.clock() | |||||

| np.save('./adv_data', adv_data) | |||||

| pred_logits_adv = model.predict(Tensor(adv_data)).asnumpy() | |||||

| # rescale predict confidences into (0, 1). | |||||

| pred_logits_adv = softmax(pred_logits_adv, axis=1) | |||||

| pred_labels_adv = np.argmax(pred_logits_adv, axis=1) | |||||

| accuracy_adv = np.mean(np.equal(pred_labels_adv, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy after attacking is : %s", accuracy_adv) | |||||

| attack_evaluate = AttackEvaluate(np.concatenate(test_images).transpose(0, 2, 3, 1), | |||||

| np.concatenate(test_labels), | |||||

| adv_data.transpose(0, 2, 3, 1), | |||||

| pred_logits_adv) | |||||

| LOGGER.info(TAG, 'mis-classification rate of adversaries is : %s', | |||||

| attack_evaluate.mis_classification_rate()) | |||||

| LOGGER.info(TAG, 'The average confidence of adversarial class is : %s', | |||||

| attack_evaluate.avg_conf_adv_class()) | |||||

| LOGGER.info(TAG, 'The average confidence of true class is : %s', | |||||

| attack_evaluate.avg_conf_true_class()) | |||||

| LOGGER.info(TAG, 'The average distance (l0, l2, linf) between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_lp_distance()) | |||||

| LOGGER.info(TAG, 'The average structural similarity between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_ssim()) | |||||

| LOGGER.info(TAG, 'The average costing time is %s', | |||||

| (stop_time - start_time)/(batch_num*batch_size)) | |||||

| if __name__ == '__main__': | |||||

| test_fast_gradient_sign_method() | |||||

+ 138

- 0

example/mnist_demo/mnist_attack_genetic.py

View File

| @@ -0,0 +1,138 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import sys | |||||

| import time | |||||

| import numpy as np | |||||

| import pytest | |||||

| from scipy.special import softmax | |||||

| from mindspore import Tensor | |||||

| from mindspore import context | |||||

| from mindspore.train.serialization import load_checkpoint, load_param_into_net | |||||

| from mindarmour.attacks.black.genetic_attack import GeneticAttack | |||||

| from mindarmour.attacks.black.black_model import BlackModel | |||||

| from mindarmour.utils.logger import LogUtil | |||||

| from mindarmour.evaluations.attack_evaluation import AttackEvaluate | |||||

| from lenet5_net import LeNet5 | |||||

| context.set_context(mode=context.GRAPH_MODE, device_target="Ascend") | |||||

| sys.path.append("..") | |||||

| from data_processing import generate_mnist_dataset | |||||

| LOGGER = LogUtil.get_instance() | |||||

| TAG = 'Genetic_Attack' | |||||

| class ModelToBeAttacked(BlackModel): | |||||

| """model to be attack""" | |||||

| def __init__(self, network): | |||||

| super(ModelToBeAttacked, self).__init__() | |||||

| self._network = network | |||||

| def predict(self, inputs): | |||||

| """predict""" | |||||

| result = self._network(Tensor(inputs.astype(np.float32))) | |||||

| return result.asnumpy() | |||||

| @pytest.mark.level1 | |||||

| @pytest.mark.platform_arm_ascend_training | |||||

| @pytest.mark.platform_x86_ascend_training | |||||

| @pytest.mark.env_card | |||||

| @pytest.mark.component_mindarmour | |||||

| def test_genetic_attack_on_mnist(): | |||||

| """ | |||||

| Genetic-Attack test | |||||

| """ | |||||

| # upload trained network | |||||

| ckpt_name = './trained_ckpt_file/checkpoint_lenet-10_1875.ckpt' | |||||

| net = LeNet5() | |||||

| load_dict = load_checkpoint(ckpt_name) | |||||

| load_param_into_net(net, load_dict) | |||||

| # get test data | |||||

| data_list = "./MNIST_unzip/test" | |||||

| batch_size = 32 | |||||

| ds = generate_mnist_dataset(data_list, batch_size=batch_size) | |||||

| # prediction accuracy before attack | |||||

| model = ModelToBeAttacked(net) | |||||

| batch_num = 3 # the number of batches of attacking samples | |||||

| test_images = [] | |||||

| test_labels = [] | |||||

| predict_labels = [] | |||||

| i = 0 | |||||

| for data in ds.create_tuple_iterator(): | |||||

| i += 1 | |||||

| images = data[0].astype(np.float32) | |||||

| labels = data[1] | |||||

| test_images.append(images) | |||||

| test_labels.append(labels) | |||||

| pred_labels = np.argmax(model.predict(images), axis=1) | |||||

| predict_labels.append(pred_labels) | |||||

| if i >= batch_num: | |||||

| break | |||||

| predict_labels = np.concatenate(predict_labels) | |||||

| true_labels = np.concatenate(test_labels) | |||||

| accuracy = np.mean(np.equal(predict_labels, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy before attacking is : %g", accuracy) | |||||

| # attacking | |||||

| attack = GeneticAttack(model=model, pop_size=6, mutation_rate=0.05, | |||||

| per_bounds=0.1, step_size=0.25, temp=0.1, | |||||

| sparse=True) | |||||

| targeted_labels = np.random.randint(0, 10, size=len(true_labels)) | |||||

| for i in range(len(true_labels)): | |||||

| if targeted_labels[i] == true_labels[i]: | |||||

| targeted_labels[i] = (targeted_labels[i] + 1) % 10 | |||||

| start_time = time.clock() | |||||

| success_list, adv_data, query_list = attack.generate( | |||||

| np.concatenate(test_images), targeted_labels) | |||||

| stop_time = time.clock() | |||||

| LOGGER.info(TAG, 'success_list: %s', success_list) | |||||

| LOGGER.info(TAG, 'average of query times is : %s', np.mean(query_list)) | |||||

| pred_logits_adv = model.predict(adv_data) | |||||

| # rescale predict confidences into (0, 1). | |||||

| pred_logits_adv = softmax(pred_logits_adv, axis=1) | |||||

| pred_lables_adv = np.argmax(pred_logits_adv, axis=1) | |||||

| accuracy_adv = np.mean(np.equal(pred_lables_adv, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy after attacking is : %g", | |||||

| accuracy_adv) | |||||

| test_labels_onehot = np.eye(10)[true_labels] | |||||

| attack_evaluate = AttackEvaluate(np.concatenate(test_images), | |||||

| test_labels_onehot, adv_data, | |||||

| pred_logits_adv, targeted=True, | |||||

| target_label=targeted_labels) | |||||

| LOGGER.info(TAG, 'mis-classification rate of adversaries is : %s', | |||||

| attack_evaluate.mis_classification_rate()) | |||||

| LOGGER.info(TAG, 'The average confidence of adversarial class is : %s', | |||||

| attack_evaluate.avg_conf_adv_class()) | |||||

| LOGGER.info(TAG, 'The average confidence of true class is : %s', | |||||

| attack_evaluate.avg_conf_true_class()) | |||||

| LOGGER.info(TAG, 'The average distance (l0, l2, linf) between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_lp_distance()) | |||||

| LOGGER.info(TAG, 'The average structural similarity between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_ssim()) | |||||

| LOGGER.info(TAG, 'The average costing time is %s', | |||||

| (stop_time - start_time)/(batch_num*batch_size)) | |||||

| if __name__ == '__main__': | |||||

| test_genetic_attack_on_mnist() | |||||

+ 150

- 0

example/mnist_demo/mnist_attack_hsja.py

View File

| @@ -0,0 +1,150 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import sys | |||||

| import numpy as np | |||||

| import pytest | |||||

| from mindspore import Tensor | |||||

| from mindspore import context | |||||

| from mindspore.train.serialization import load_checkpoint, load_param_into_net | |||||

| from mindarmour.attacks.black.hop_skip_jump_attack import HopSkipJumpAttack | |||||

| from mindarmour.attacks.black.black_model import BlackModel | |||||

| from mindarmour.utils.logger import LogUtil | |||||

| from lenet5_net import LeNet5 | |||||

| sys.path.append("..") | |||||

| from data_processing import generate_mnist_dataset | |||||

| context.set_context(mode=context.GRAPH_MODE) | |||||

| context.set_context(device_target="Ascend") | |||||

| LOGGER = LogUtil.get_instance() | |||||

| TAG = 'HopSkipJumpAttack' | |||||

| class ModelToBeAttacked(BlackModel): | |||||

| """model to be attack""" | |||||

| def __init__(self, network): | |||||

| super(ModelToBeAttacked, self).__init__() | |||||

| self._network = network | |||||

| def predict(self, inputs): | |||||

| """predict""" | |||||

| if len(inputs.shape) == 3: | |||||

| inputs = inputs[np.newaxis, :] | |||||

| result = self._network(Tensor(inputs.astype(np.float32))) | |||||

| return result.asnumpy() | |||||

| def random_target_labels(true_labels): | |||||

| target_labels = [] | |||||

| for label in true_labels: | |||||

| while True: | |||||

| target_label = np.random.randint(0, 10) | |||||

| if target_label != label: | |||||

| target_labels.append(target_label) | |||||

| break | |||||

| return target_labels | |||||

| def create_target_images(dataset, data_labels, target_labels): | |||||

| res = [] | |||||

| for label in target_labels: | |||||

| for i in range(len(data_labels)): | |||||

| if data_labels[i] == label: | |||||

| res.append(dataset[i]) | |||||

| break | |||||

| return np.array(res) | |||||

| @pytest.mark.level1 | |||||

| @pytest.mark.platform_arm_ascend_training | |||||

| @pytest.mark.platform_x86_ascend_training | |||||

| @pytest.mark.env_card | |||||

| @pytest.mark.component_mindarmour | |||||

| def test_hsja_mnist_attack(): | |||||

| """ | |||||

| hsja-Attack test | |||||

| """ | |||||

| # upload trained network | |||||

| ckpt_name = './trained_ckpt_file/checkpoint_lenet-10_1875.ckpt' | |||||

| net = LeNet5() | |||||

| load_dict = load_checkpoint(ckpt_name) | |||||

| load_param_into_net(net, load_dict) | |||||

| net.set_train(False) | |||||

| # get test data | |||||

| data_list = "./MNIST_unzip/test" | |||||

| batch_size = 32 | |||||

| ds = generate_mnist_dataset(data_list, batch_size=batch_size) | |||||

| # prediction accuracy before attack | |||||

| model = ModelToBeAttacked(net) | |||||

| batch_num = 5 # the number of batches of attacking samples | |||||

| test_images = [] | |||||

| test_labels = [] | |||||

| predict_labels = [] | |||||

| i = 0 | |||||

| for data in ds.create_tuple_iterator(): | |||||

| i += 1 | |||||

| images = data[0].astype(np.float32) | |||||

| labels = data[1] | |||||

| test_images.append(images) | |||||

| test_labels.append(labels) | |||||

| pred_labels = np.argmax(model.predict(images), axis=1) | |||||

| predict_labels.append(pred_labels) | |||||

| if i >= batch_num: | |||||

| break | |||||

| predict_labels = np.concatenate(predict_labels) | |||||

| true_labels = np.concatenate(test_labels) | |||||

| accuracy = np.mean(np.equal(predict_labels, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy before attacking is : %s", | |||||

| accuracy) | |||||

| test_images = np.concatenate(test_images) | |||||

| # attacking | |||||

| norm = 'l2' | |||||

| search = 'grid_search' | |||||

| target = False | |||||

| attack = HopSkipJumpAttack(model, constraint=norm, stepsize_search=search) | |||||

| if target: | |||||

| target_labels = random_target_labels(true_labels) | |||||

| target_images = create_target_images(test_images, predict_labels, | |||||

| target_labels) | |||||

| attack.set_target_images(target_images) | |||||

| success_list, adv_data, query_list = attack.generate(test_images, target_labels) | |||||

| else: | |||||

| success_list, adv_data, query_list = attack.generate(test_images, None) | |||||

| adv_datas = [] | |||||

| gts = [] | |||||

| for success, adv, gt in zip(success_list, adv_data, true_labels): | |||||

| if success: | |||||

| adv_datas.append(adv) | |||||

| gts.append(gt) | |||||

| if len(gts) > 0: | |||||

| adv_datas = np.concatenate(np.asarray(adv_datas), axis=0) | |||||

| gts = np.asarray(gts) | |||||

| pred_logits_adv = model.predict(adv_datas) | |||||

| pred_lables_adv = np.argmax(pred_logits_adv, axis=1) | |||||

| accuracy_adv = np.mean(np.equal(pred_lables_adv, gts)) | |||||

| LOGGER.info(TAG, 'mis-classification rate of adversaries is : %s', | |||||

| accuracy_adv) | |||||

| if __name__ == '__main__': | |||||

| test_hsja_mnist_attack() | |||||

+ 124

- 0

example/mnist_demo/mnist_attack_jsma.py

View File

| @@ -0,0 +1,124 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import sys | |||||

| import time | |||||

| import numpy as np | |||||

| import pytest | |||||

| from scipy.special import softmax | |||||

| from mindspore import Model | |||||

| from mindspore import Tensor | |||||

| from mindspore import context | |||||

| from mindspore.train.serialization import load_checkpoint, load_param_into_net | |||||

| from mindarmour.attacks.jsma import JSMAAttack | |||||

| from mindarmour.utils.logger import LogUtil | |||||

| from mindarmour.evaluations.attack_evaluation import AttackEvaluate | |||||

| from lenet5_net import LeNet5 | |||||

| context.set_context(mode=context.GRAPH_MODE, device_target="Ascend") | |||||

| sys.path.append("..") | |||||

| from data_processing import generate_mnist_dataset | |||||

| LOGGER = LogUtil.get_instance() | |||||

| TAG = 'JSMA_Test' | |||||

| @pytest.mark.level1 | |||||

| @pytest.mark.platform_arm_ascend_training | |||||

| @pytest.mark.platform_x86_ascend_training | |||||

| @pytest.mark.env_card | |||||

| @pytest.mark.component_mindarmour | |||||

| def test_jsma_attack(): | |||||

| """ | |||||

| JSMA-Attack test | |||||

| """ | |||||

| # upload trained network | |||||

| ckpt_name = './trained_ckpt_file/checkpoint_lenet-10_1875.ckpt' | |||||

| net = LeNet5() | |||||

| load_dict = load_checkpoint(ckpt_name) | |||||

| load_param_into_net(net, load_dict) | |||||

| # get test data | |||||

| data_list = "./MNIST_unzip/test" | |||||

| batch_size = 32 | |||||

| ds = generate_mnist_dataset(data_list, batch_size=batch_size) | |||||

| # prediction accuracy before attack | |||||

| model = Model(net) | |||||

| batch_num = 3 # the number of batches of attacking samples | |||||

| test_images = [] | |||||

| test_labels = [] | |||||

| predict_labels = [] | |||||

| i = 0 | |||||

| for data in ds.create_tuple_iterator(): | |||||

| i += 1 | |||||

| images = data[0].astype(np.float32) | |||||

| labels = data[1] | |||||

| test_images.append(images) | |||||

| test_labels.append(labels) | |||||

| pred_labels = np.argmax(model.predict(Tensor(images)).asnumpy(), | |||||

| axis=1) | |||||

| predict_labels.append(pred_labels) | |||||

| if i >= batch_num: | |||||

| break | |||||

| predict_labels = np.concatenate(predict_labels) | |||||

| true_labels = np.concatenate(test_labels) | |||||

| targeted_labels = np.random.randint(0, 10, size=len(true_labels)) | |||||

| for i in range(len(true_labels)): | |||||

| if targeted_labels[i] == true_labels[i]: | |||||

| targeted_labels[i] = (targeted_labels[i] + 1) % 10 | |||||

| accuracy = np.mean(np.equal(predict_labels, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy before attacking is : %g", accuracy) | |||||

| # attacking | |||||

| classes = 10 | |||||

| attack = JSMAAttack(net, classes) | |||||

| start_time = time.clock() | |||||

| adv_data = attack.batch_generate(np.concatenate(test_images), | |||||

| targeted_labels, batch_size=32) | |||||

| stop_time = time.clock() | |||||

| pred_logits_adv = model.predict(Tensor(adv_data)).asnumpy() | |||||

| # rescale predict confidences into (0, 1). | |||||

| pred_logits_adv = softmax(pred_logits_adv, axis=1) | |||||

| pred_lables_adv = np.argmax(pred_logits_adv, axis=1) | |||||

| accuracy_adv = np.mean(np.equal(pred_lables_adv, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy after attacking is : %g", | |||||

| accuracy_adv) | |||||

| test_labels = np.eye(10)[np.concatenate(test_labels)] | |||||

| attack_evaluate = AttackEvaluate( | |||||

| np.concatenate(test_images).transpose(0, 2, 3, 1), | |||||

| test_labels, adv_data.transpose(0, 2, 3, 1), | |||||

| pred_logits_adv, targeted=True, target_label=targeted_labels) | |||||

| LOGGER.info(TAG, 'mis-classification rate of adversaries is : %s', | |||||

| attack_evaluate.mis_classification_rate()) | |||||

| LOGGER.info(TAG, 'The average confidence of adversarial class is : %s', | |||||

| attack_evaluate.avg_conf_adv_class()) | |||||

| LOGGER.info(TAG, 'The average confidence of true class is : %s', | |||||

| attack_evaluate.avg_conf_true_class()) | |||||

| LOGGER.info(TAG, 'The average distance (l0, l2, linf) between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_lp_distance()) | |||||

| LOGGER.info(TAG, 'The average structural similarity between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_ssim()) | |||||

| LOGGER.info(TAG, 'The average costing time is %s', | |||||

| (stop_time - start_time) / (batch_num*batch_size)) | |||||

| if __name__ == '__main__': | |||||

| test_jsma_attack() | |||||

+ 132

- 0

example/mnist_demo/mnist_attack_lbfgs.py

View File

| @@ -0,0 +1,132 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import sys | |||||

| import time | |||||

| import numpy as np | |||||

| import pytest | |||||

| from scipy.special import softmax | |||||

| from mindspore import Model | |||||

| from mindspore import Tensor | |||||

| from mindspore import context | |||||

| from mindspore.train.serialization import load_checkpoint, load_param_into_net | |||||

| from mindarmour.attacks.lbfgs import LBFGS | |||||

| from mindarmour.utils.logger import LogUtil | |||||

| from mindarmour.evaluations.attack_evaluation import AttackEvaluate | |||||

| from lenet5_net import LeNet5 | |||||

| context.set_context(mode=context.GRAPH_MODE, device_target="Ascend") | |||||

| sys.path.append("..") | |||||

| from data_processing import generate_mnist_dataset | |||||

| LOGGER = LogUtil.get_instance() | |||||

| TAG = 'LBFGS_Test' | |||||

| @pytest.mark.level1 | |||||

| @pytest.mark.platform_arm_ascend_training | |||||

| @pytest.mark.platform_x86_ascend_training | |||||

| @pytest.mark.env_card | |||||

| @pytest.mark.component_mindarmour | |||||

| def test_lbfgs_attack(): | |||||

| """ | |||||

| LBFGS-Attack test | |||||

| """ | |||||

| # upload trained network | |||||

| ckpt_name = './trained_ckpt_file/checkpoint_lenet-10_1875.ckpt' | |||||

| net = LeNet5() | |||||

| load_dict = load_checkpoint(ckpt_name) | |||||

| load_param_into_net(net, load_dict) | |||||

| # get test data | |||||

| data_list = "./MNIST_unzip/test" | |||||

| batch_size = 32 | |||||

| ds = generate_mnist_dataset(data_list, batch_size=batch_size, sparse=False) | |||||

| # prediction accuracy before attack | |||||

| model = Model(net) | |||||

| batch_num = 3 # the number of batches of attacking samples | |||||

| test_images = [] | |||||

| test_labels = [] | |||||

| predict_labels = [] | |||||

| i = 0 | |||||

| for data in ds.create_tuple_iterator(): | |||||

| i += 1 | |||||

| images = data[0].astype(np.float32) | |||||

| labels = data[1] | |||||

| test_images.append(images) | |||||

| test_labels.append(labels) | |||||

| pred_labels = np.argmax(model.predict(Tensor(images)).asnumpy(), | |||||

| axis=1) | |||||

| predict_labels.append(pred_labels) | |||||

| if i >= batch_num: | |||||

| break | |||||

| predict_labels = np.concatenate(predict_labels) | |||||

| true_labels = np.argmax(np.concatenate(test_labels), axis=1) | |||||

| accuracy = np.mean(np.equal(predict_labels, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy before attacking is : %s", accuracy) | |||||

| # attacking | |||||

| is_targeted = True | |||||

| if is_targeted: | |||||

| targeted_labels = np.random.randint(0, 10, size=len(true_labels)).astype(np.int32) | |||||

| for i in range(len(true_labels)): | |||||

| if targeted_labels[i] == true_labels[i]: | |||||

| targeted_labels[i] = (targeted_labels[i] + 1) % 10 | |||||

| else: | |||||

| targeted_labels = true_labels.astype(np.int32) | |||||

| targeted_labels = np.eye(10)[targeted_labels].astype(np.float32) | |||||

| attack = LBFGS(net, is_targeted=is_targeted) | |||||

| start_time = time.clock() | |||||

| adv_data = attack.batch_generate(np.concatenate(test_images), | |||||

| targeted_labels, | |||||

| batch_size=batch_size) | |||||

| stop_time = time.clock() | |||||

| pred_logits_adv = model.predict(Tensor(adv_data)).asnumpy() | |||||

| # rescale predict confidences into (0, 1). | |||||

| pred_logits_adv = softmax(pred_logits_adv, axis=1) | |||||

| pred_labels_adv = np.argmax(pred_logits_adv, axis=1) | |||||

| accuracy_adv = np.mean(np.equal(pred_labels_adv, true_labels)) | |||||

| LOGGER.info(TAG, "prediction accuracy after attacking is : %s", | |||||

| accuracy_adv) | |||||

| attack_evaluate = AttackEvaluate(np.concatenate(test_images).transpose(0, 2, 3, 1), | |||||

| np.concatenate(test_labels), | |||||

| adv_data.transpose(0, 2, 3, 1), | |||||

| pred_logits_adv, | |||||

| targeted=is_targeted, | |||||

| target_label=np.argmax(targeted_labels, | |||||

| axis=1)) | |||||

| LOGGER.info(TAG, 'mis-classification rate of adversaries is : %s', | |||||

| attack_evaluate.mis_classification_rate()) | |||||

| LOGGER.info(TAG, 'The average confidence of adversarial class is : %s', | |||||

| attack_evaluate.avg_conf_adv_class()) | |||||

| LOGGER.info(TAG, 'The average confidence of true class is : %s', | |||||

| attack_evaluate.avg_conf_true_class()) | |||||

| LOGGER.info(TAG, 'The average distance (l0, l2, linf) between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_lp_distance()) | |||||

| LOGGER.info(TAG, 'The average structural similarity between original ' | |||||

| 'samples and adversarial samples are: %s', | |||||

| attack_evaluate.avg_ssim()) | |||||

| LOGGER.info(TAG, 'The average costing time is %s', | |||||

| (stop_time - start_time)/(batch_num*batch_size)) | |||||

| if __name__ == '__main__': | |||||

| test_lbfgs_attack() | |||||

+ 168

- 0

example/mnist_demo/mnist_attack_nes.py

View File

| @@ -0,0 +1,168 @@ | |||||

| # Copyright 2019 Huawei Technologies Co., Ltd | |||||

| # | |||||

| # Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| # you may not use this file except in compliance with the License. | |||||

| # You may obtain a copy of the License at | |||||

| # | |||||

| # http://www.apache.org/licenses/LICENSE-2.0 | |||||

| # | |||||

| # Unless required by applicable law or agreed to in writing, software | |||||

| # distributed under the License is distributed on an "AS IS" BASIS, | |||||

| # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| # See the License for the specific language governing permissions and | |||||

| # limitations under the License. | |||||

| import sys | |||||

| import numpy as np | |||||

| import pytest | |||||

| from mindspore import Tensor | |||||

| from mindspore import context | |||||

| from mindspore.train.serialization import load_checkpoint, load_param_into_net | |||||

| from mindarmour.attacks.black.natural_evolutionary_strategy import NES | |||||

| from mindarmour.attacks.black.black_model import BlackModel | |||||

| from mindarmour.utils.logger import LogUtil | |||||

| from lenet5_net import LeNet5 | |||||

| sys.path.append("..") | |||||

| from data_processing import generate_mnist_dataset | |||||

| context.set_context(mode=context.GRAPH_MODE) | |||||

| context.set_context(device_target="Ascend") | |||||

| LOGGER = LogUtil.get_instance() | |||||

| TAG = 'HopSkipJumpAttack' | |||||

| class ModelToBeAttacked(BlackModel): | |||||

| """model to be attack""" | |||||

| def __init__(self, network): | |||||

| super(ModelToBeAttacked, self).__init__() | |||||

| self._network = network | |||||

| def predict(self, inputs): | |||||

| """predict""" | |||||

| if len(inputs.shape) == 3: | |||||

| inputs = inputs[np.newaxis, :] | |||||

| result = self._network(Tensor(inputs.astype(np.float32))) | |||||

| return result.asnumpy() | |||||

| def random_target_labels(true_labels, labels_list): | |||||

| target_labels = [] | |||||

| for label in true_labels: | |||||

| while True: | |||||

| target_label = np.random.choice(labels_list) | |||||

| if target_label != label: | |||||

| target_labels.append(target_label) | |||||

| break | |||||

| return target_labels | |||||

| def _pseudorandom_target(index, total_indices, true_class): | |||||

| """ pseudo random_target """ | |||||

| rng = np.random.RandomState(index) | |||||

| target = true_class | |||||

| while target == true_class: | |||||

| target = rng.randint(0, total_indices) | |||||

| return target | |||||

| def create_target_images(dataset, data_labels, target_labels): | |||||

| res = [] | |||||

| for label in target_labels: | |||||

| for i in range(len(data_labels)): | |||||

| if data_labels[i] == label: | |||||

| res.append(dataset[i]) | |||||

| break | |||||

| return np.array(res) | |||||

| @pytest.mark.level1 | |||||

| @pytest.mark.platform_arm_ascend_training | |||||

| @pytest.mark.platform_x86_ascend_training | |||||

| @pytest.mark.env_card | |||||

| @pytest.mark.component_mindarmour | |||||

| def test_nes_mnist_attack(): | |||||

| """ | |||||

| hsja-Attack test | |||||

| """ | |||||

| # upload trained network | |||||