Compare commits

merge into: hummingbird:master

hummingbird:master

hummingbird:r0.1

hummingbird:r0.2

hummingbird:r0.3

hummingbird:r0.5

hummingbird:r0.6

hummingbird:r0.7

hummingbird:r1.0

hummingbird:r1.1

hummingbird:r1.2

hummingbird:r1.3

hummingbird:r1.5

hummingbird:r1.6

hummingbird:r1.7

hummingbird:r1.8

hummingbird:r1.9

hummingbird:r2.0

hummingbird:serving

pull from: hummingbird:r0.1

hummingbird:master

hummingbird:r0.1

hummingbird:r0.2

hummingbird:r0.3

hummingbird:r0.5

hummingbird:r0.6

hummingbird:r0.7

hummingbird:r1.0

hummingbird:r1.1

hummingbird:r1.2

hummingbird:r1.3

hummingbird:r1.5

hummingbird:r1.6

hummingbird:r1.7

hummingbird:r1.8

hummingbird:r1.9

hummingbird:r2.0

hummingbird:serving

100 changed files with 711 additions and 7180 deletions

Split View

Diff Options

-

+2 -3.gitee/PULL_REQUEST_TEMPLATE.md

-

+0 -19.github/ISSUE_TEMPLATE/RFC.md

-

+0 -43.github/ISSUE_TEMPLATE/bug-report.md

-

+0 -19.github/ISSUE_TEMPLATE/task-tracking.md

-

+0 -24.github/PULL_REQUEST_TEMPLATE.md

-

+1 -2.gitignore

-

+0 -1.jenkins/check/config/filter_cppcheck.txt

-

+0 -1.jenkins/check/config/filter_cpplint.txt

-

+0 -48.jenkins/check/config/filter_pylint.txt

-

+0 -6.jenkins/check/config/whitelizard.txt

-

+0 -3.jenkins/test/config/dependent_packages.yaml

-

+0 -3.vscode/settings.json

-

+0 -8OWNERS

-

+28 -98README.md

-

+0 -141README_CN.md

-

+5 -373RELEASE.md

-

+0 -69RELEASE_CN.md

-

BINdocs/adversarial_robustness_cn.png

-

BINdocs/adversarial_robustness_en.png

-

+0 -618docs/api/api_python/mindarmour.adv_robustness.attacks.rst

-

+0 -96docs/api/api_python/mindarmour.adv_robustness.defenses.rst

-

+0 -348docs/api/api_python/mindarmour.adv_robustness.detectors.rst

-

+0 -174docs/api/api_python/mindarmour.adv_robustness.evaluations.rst

-

+0 -186docs/api/api_python/mindarmour.fuzz_testing.rst

-

+0 -143docs/api/api_python/mindarmour.natural_robustness.transform.image.rst

-

+0 -269docs/api/api_python/mindarmour.privacy.diff_privacy.rst

-

+0 -108docs/api/api_python/mindarmour.privacy.evaluation.rst

-

+0 -175docs/api/api_python/mindarmour.privacy.sup_privacy.rst

-

+0 -129docs/api/api_python/mindarmour.reliability.rst

-

+0 -344docs/api/api_python/mindarmour.rst

-

+0 -113docs/api/api_python/mindarmour.utils.rst

-

BINdocs/differential_privacy_architecture_cn.png

-

BINdocs/differential_privacy_architecture_en.png

-

BINdocs/fuzzer_architecture_cn.png

-

BINdocs/fuzzer_architecture_en.png

-

BINdocs/mindarmour_architecture.png

-

BINdocs/privacy_leakage_cn.png

-

BINdocs/privacy_leakage_en.png

-

+62 -0example/data_processing.py

-

+46 -0example/mnist_demo/README.md

-

+5 -4example/mnist_demo/lenet5_net.py

-

+19 -11example/mnist_demo/mnist_attack_cw.py

-

+20 -11example/mnist_demo/mnist_attack_deepfool.py

-

+27 -19example/mnist_demo/mnist_attack_fgsm.py

-

+24 -19example/mnist_demo/mnist_attack_genetic.py

-

+27 -19example/mnist_demo/mnist_attack_hsja.py

-

+22 -13example/mnist_demo/mnist_attack_jsma.py

-

+30 -21example/mnist_demo/mnist_attack_lbfgs.py

-

+24 -15example/mnist_demo/mnist_attack_nes.py

-

+29 -21example/mnist_demo/mnist_attack_pgd.py

-

+23 -14example/mnist_demo/mnist_attack_pointwise.py

-

+20 -15example/mnist_demo/mnist_attack_pso.py

-

+37 -21example/mnist_demo/mnist_attack_salt_and_pepper.py

-

+144 -0example/mnist_demo/mnist_defense_nad.py

-

+44 -46example/mnist_demo/mnist_evaluation.py

-

+26 -26example/mnist_demo/mnist_similarity_detector.py

-

+46 -23example/mnist_demo/mnist_train.py

-

+0 -45examples/README.md

-

+0 -16examples/__init__.py

-

+0 -32examples/ai_fuzzer/README.md

-

+0 -176examples/ai_fuzzer/fuzz_testing_and_model_enhense.py

-

+0 -89examples/ai_fuzzer/lenet5_mnist_coverage.py

-

+0 -131examples/ai_fuzzer/lenet5_mnist_fuzzing.py

-

+0 -188examples/common/dataset/data_processing.py

-

+0 -0examples/common/networks/__init__.py

-

+0 -0examples/common/networks/lenet5/__init__.py

-

+0 -98examples/common/networks/lenet5/lenet5_net_for_fuzzing.py

-

+0 -0examples/common/networks/resnet/__init__.py

-

+0 -401examples/common/networks/resnet/resnet.py

-

+0 -14examples/common/networks/vgg/__init__.py

-

+0 -45examples/common/networks/vgg/config.py

-

+0 -39examples/common/networks/vgg/crossentropy.py

-

+0 -23examples/common/networks/vgg/linear_warmup.py

-

+0 -0examples/common/networks/vgg/utils/__init__.py

-

+0 -36examples/common/networks/vgg/utils/util.py

-

+0 -219examples/common/networks/vgg/utils/var_init.py

-

+0 -142examples/common/networks/vgg/vgg.py

-

+0 -40examples/common/networks/vgg/warmup_cosine_annealing_lr.py

-

+0 -84examples/common/networks/vgg/warmup_step_lr.py

-

+0 -0examples/community/__init__.py

-

+0 -119examples/community/face_adversarial_attack/README.md

-

+0 -275examples/community/face_adversarial_attack/adversarial_attack.py

-

+0 -45examples/community/face_adversarial_attack/example_non_target_attack.py

-

+0 -46examples/community/face_adversarial_attack/example_target_attack.py

-

+0 -154examples/community/face_adversarial_attack/loss_design.py

-

BINexamples/community/face_adversarial_attack/photos/adv_input/adv.png

-

BINexamples/community/face_adversarial_attack/photos/input/input1.jpg

-

BINexamples/community/face_adversarial_attack/photos/target/target1.jpg

-

+0 -59examples/community/face_adversarial_attack/test.py

-

+0 -40examples/model_security/README.md

-

+0 -0examples/model_security/__init__.py

-

+0 -0examples/model_security/model_attacks/__init__.py

-

+0 -0examples/model_security/model_attacks/black_box/__init__.py

-

+0 -47examples/model_security/model_attacks/cv/faster_rcnn/README.md

-

+0 -150examples/model_security/model_attacks/cv/faster_rcnn/coco_attack_genetic.py

-

+0 -135examples/model_security/model_attacks/cv/faster_rcnn/coco_attack_pgd.py

-

+0 -149examples/model_security/model_attacks/cv/faster_rcnn/coco_attack_pso.py

-

+0 -31examples/model_security/model_attacks/cv/faster_rcnn/src/FasterRcnn/__init__.py

-

+0 -84examples/model_security/model_attacks/cv/faster_rcnn/src/FasterRcnn/anchor_generator.py

-

+0 -166examples/model_security/model_attacks/cv/faster_rcnn/src/FasterRcnn/bbox_assign_sample.py

+ 2

- 3

.gitee/PULL_REQUEST_TEMPLATE.md

View File

| @@ -11,7 +11,7 @@ If this is your first time, please read our contributor guidelines: https://gite | |||

| > /kind feature | |||

| **What does this PR do / why do we need it**: | |||

| **What this PR does / why we need it**: | |||

| **Which issue(s) this PR fixes**: | |||

| @@ -21,6 +21,5 @@ Usage: `Fixes #<issue number>`, or `Fixes (paste link of issue)`. | |||

| --> | |||

| Fixes # | |||

| **Special notes for your reviewers**: | |||

| **Special notes for your reviewer**: | |||

+ 0

- 19

.github/ISSUE_TEMPLATE/RFC.md

View File

| @@ -1,19 +0,0 @@ | |||

| --- | |||

| name: RFC | |||

| about: Use this template for the new feature or enhancement | |||

| labels: kind/feature or kind/enhancement | |||

| --- | |||

| ## Background | |||

| - Describe the status of the problem you wish to solve | |||

| - Attach the relevant issue if have | |||

| ## Introduction | |||

| - Describe the general solution, design and/or pseudo-code | |||

| ## Trail | |||

| | No. | Task Description | Related Issue(URL) | | |||

| | --- | ---------------- | ------------------ | | |||

| | 1 | | | | |||

| | 2 | | | | |||

+ 0

- 43

.github/ISSUE_TEMPLATE/bug-report.md

View File

| @@ -1,43 +0,0 @@ | |||

| --- | |||

| name: Bug Report | |||

| about: Use this template for reporting a bug | |||

| labels: kind/bug | |||

| --- | |||

| <!-- Thanks for sending an issue! Here are some tips for you: | |||

| If this is your first time, please read our contributor guidelines: https://github.com/mindspore-ai/mindspore/blob/master/CONTRIBUTING.md | |||

| --> | |||

| ## Environment | |||

| ### Hardware Environment(`Ascend`/`GPU`/`CPU`): | |||

| > Uncomment only one ` /device <>` line, hit enter to put that in a new line, and remove leading whitespaces from that line: | |||

| > | |||

| > `/device ascend`</br> | |||

| > `/device gpu`</br> | |||

| > `/device cpu`</br> | |||

| ### Software Environment: | |||

| - **MindSpore version (source or binary)**: | |||

| - **Python version (e.g., Python 3.7.5)**: | |||

| - **OS platform and distribution (e.g., Linux Ubuntu 16.04)**: | |||

| - **GCC/Compiler version (if compiled from source)**: | |||

| ## Describe the current behavior | |||

| ## Describe the expected behavior | |||

| ## Steps to reproduce the issue | |||

| 1. | |||

| 2. | |||

| 3. | |||

| ## Related log / screenshot | |||

| ## Special notes for this issue | |||

+ 0

- 19

.github/ISSUE_TEMPLATE/task-tracking.md

View File

| @@ -1,19 +0,0 @@ | |||

| --- | |||

| name: Task | |||

| about: Use this template for task tracking | |||

| labels: kind/task | |||

| --- | |||

| ## Task Description | |||

| ## Task Goal | |||

| ## Sub Task | |||

| | No. | Task Description | Issue ID | | |||

| | --- | ---------------- | -------- | | |||

| | 1 | | | | |||

| | 2 | | | | |||

+ 0

- 24

.github/PULL_REQUEST_TEMPLATE.md

View File

| @@ -1,24 +0,0 @@ | |||

| <!-- Thanks for sending a pull request! Here are some tips for you: | |||

| If this is your first time, please read our contributor guidelines: https://github.com/mindspore-ai/mindspore/blob/master/CONTRIBUTING.md | |||

| --> | |||

| **What type of PR is this?** | |||

| > Uncomment only one ` /kind <>` line, hit enter to put that in a new line, and remove leading whitespaces from that line: | |||

| > | |||

| > `/kind bug`</br> | |||

| > `/kind task`</br> | |||

| > `/kind feature`</br> | |||

| **What does this PR do / why do we need it**: | |||

| **Which issue(s) this PR fixes**: | |||

| <!-- | |||

| *Automatically closes linked issue when PR is merged. | |||

| Usage: `Fixes #<issue number>`, or `Fixes (paste link of issue)`. | |||

| --> | |||

| Fixes # | |||

| **Special notes for your reviewers**: | |||

+ 1

- 2

.gitignore

View File

| @@ -13,7 +13,7 @@ build/ | |||

| dist/ | |||

| local_script/ | |||

| example/dataset/ | |||

| example/mnist_demo/MNIST/ | |||

| example/mnist_demo/MNIST_unzip/ | |||

| example/mnist_demo/trained_ckpt_file/ | |||

| example/mnist_demo/model/ | |||

| example/cifar_demo/model/ | |||

| @@ -26,4 +26,3 @@ mindarmour.egg-info/ | |||

| *pre_trained_model/ | |||

| *__pycache__/ | |||

| *kernel_meta | |||

| .DS_Store | |||

+ 0

- 1

.jenkins/check/config/filter_cppcheck.txt

View File

| @@ -1 +0,0 @@ | |||

| # MindArmour | |||

+ 0

- 1

.jenkins/check/config/filter_cpplint.txt

View File

| @@ -1 +0,0 @@ | |||

| # MindArmour | |||

+ 0

- 48

.jenkins/check/config/filter_pylint.txt

View File

| @@ -1,48 +0,0 @@ | |||

| # MindArmour | |||

| "mindarmour/mindarmour/privacy/diff_privacy" "protected-access" | |||

| "mindarmour/mindarmour/fuzz_testing/fuzzing.py" "missing-docstring" | |||

| "mindarmour/mindarmour/fuzz_testing/fuzzing.py" "protected-access" | |||

| "mindarmour/mindarmour/fuzz_testing/fuzzing.py" "consider-using-enumerate" | |||

| "mindarmour/setup.py" "missing-docstring" | |||

| "mindarmour/setup.py" "invalid-name" | |||

| "mindarmour/mindarmour/reliability/model_fault_injection/fault_injection.py" "protected-access" | |||

| "mindarmour/setup.py" "unused-argument" | |||

| # Tests | |||

| "mindarmour/tests/st" "missing-docstring" | |||

| "mindarmour/tests/ut" "missing-docstring" | |||

| "mindarmour/tests/st/resnet50/resnet_cifar10.py" "unused-argument" | |||

| "mindarmour/tests/ut/python/fuzzing/test_fuzzing.py" "invalid-name" | |||

| "mindarmour/tests/ut/python/attacks/test_lbfgs.py" "wrong-import-position" | |||

| "mindarmour/tests/ut/python/attacks/black/test_nes.py" "wrong-import-position" | |||

| "mindarmour/tests/ut/python/attacks/black/test_nes.py" "consider-using-enumerate" | |||

| "mindarmour/tests/ut/python/attacks/black/test_hsja.py" "wrong-import-position" | |||

| "mindarmour/tests/ut/python/attacks/black/test_hsja.py" "consider-using-enumerate" | |||

| "mindarmour/tests/ut/python/attacks/black/test_salt_and_pepper_attack.py" "unused-variable" | |||

| "mindarmour/tests/ut/python/attacks/black/test_pointwise_attack.py" "wrong-import-position" | |||

| "mindarmour/tests/ut/python/evaluations/test_radar_metric.py" "bad-continuation" | |||

| "mindarmour/tests/ut/python/diff_privacy/test_membership_inference.py" "wrong-import-position" | |||

| # Example | |||

| "mindarmour/examples/ai_fuzzer/lenet5_mnist_coverage.py" "missing-docstring" | |||

| "mindarmour/examples/ai_fuzzer/lenet5_mnist_fuzzing.py" "missing-docstring" | |||

| "mindarmour/examples/ai_fuzzer/fuzz_testing_and_model_enhense.py" "missing-docstring" | |||

| "mindarmour/examples/common/dataset/data_processing.py" "missing-docstring" | |||

| "mindarmour/examples/common/networks/lenet5/lenet5_net.py" "missing-docstring" | |||

| "mindarmour/examples/common/networks/lenet5/mnist_train.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/black_box/mnist_attack_genetic.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/black_box/mnist_attack_hsja.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/black_box/mnist_attack_nes.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/black_box/mnist_attack_pointwise.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/black_box/mnist_attack_pso.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/black_box/mnist_attack_salt_and_pepper.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/white_box/mnist_attack_cw.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/white_box/mnist_attack_deepfool.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/white_box/mnist_attack_fgsm.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/white_box/mnist_attack_jsma.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/white_box/mnist_attack_lbfgs.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/white_box/mnist_attack_mdi2fgsm.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_attacks/white_box/mnist_attack_pgd.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_defenses/mnist_defense_nad.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_defenses/mnist_evaluation.py" "missing-docstring" | |||

| "mindarmour/examples/model_security/model_defenses/mnist_similarity_detector.py" "missing-docstring" | |||

+ 0

- 6

.jenkins/check/config/whitelizard.txt

View File

| @@ -1,6 +0,0 @@ | |||

| # Scene1: | |||

| # function_name1, function_name2 | |||

| # Scene2: | |||

| # file_path:function_name1, function_name2 | |||

| # | |||

| mindarmour/examples/model_security/model_defenses/mnist_evaluation.py:test_defense_evaluation | |||

+ 0

- 3

.jenkins/test/config/dependent_packages.yaml

View File

| @@ -1,3 +0,0 @@ | |||

| mindspore: | |||

| 'mindspore/mindspore/version/202209/20220923/r1.9_20220923224458_c16390f59ab8dace3bb7e5a6ab4ae4d3bfe74bea/' | |||

+ 0

- 3

.vscode/settings.json

View File

| @@ -1,3 +0,0 @@ | |||

| { | |||

| "esbonio.sphinx.confDir": "" | |||

| } | |||

+ 0

- 8

OWNERS

View File

| @@ -1,8 +0,0 @@ | |||

| approvers: | |||

| - pkuliuliu | |||

| - ZhidanLiu | |||

| - jxlang910 | |||

| reviewers: | |||

| - 张澍坤 | |||

| - emmmmtang | |||

+ 28

- 98

README.md

View File

| @@ -1,123 +1,53 @@ | |||

| # MindArmour | |||

| <!-- TOC --> | |||

| - [MindArmour](#mindarmour) | |||

| - [What is MindArmour](#what-is-mindarmour) | |||

| - [Adversarial Robustness Module](#adversarial-robustness-module) | |||

| - [Fuzz Testing Module](#fuzz-testing-module) | |||

| - [Privacy Protection and Evaluation Module](#privacy-protection-and-evaluation-module) | |||

| - [Differential Privacy Training Module](#differential-privacy-training-module) | |||

| - [Privacy Leakage Evaluation Module](#privacy-leakage-evaluation-module) | |||

| - [Starting](#starting) | |||

| - [System Environment Information Confirmation](#system-environment-information-confirmation) | |||

| - [Installation](#installation) | |||

| - [Installation by Source Code](#installation-by-source-code) | |||

| - [Installation by pip](#installation-by-pip) | |||

| - [Installation Verification](#installation-verification) | |||

| - [Docs](#docs) | |||

| - [Community](#community) | |||

| - [Contributing](#contributing) | |||

| - [Release Notes](#release-notes) | |||

| - [License](#license) | |||

| <!-- /TOC --> | |||

| [查看中文](./README_CN.md) | |||

| - [What is MindArmour](#what-is-mindarmour) | |||

| - [Setting up](#setting-up-mindarmour) | |||

| - [Docs](#docs) | |||

| - [Community](#community) | |||

| - [Contributing](#contributing) | |||

| - [Release Notes](#release-notes) | |||

| - [License](#license) | |||

| ## What is MindArmour | |||

| MindArmour focus on security and privacy of artificial intelligence. MindArmour can be used as a tool box for MindSpore users to enhance model security and trustworthiness and protect privacy data. MindArmour contains three module: Adversarial Robustness Module, Fuzz Testing Module, Privacy Protection and Evaluation Module. | |||

| A tool box for MindSpore users to enhance model security and trustworthiness. | |||

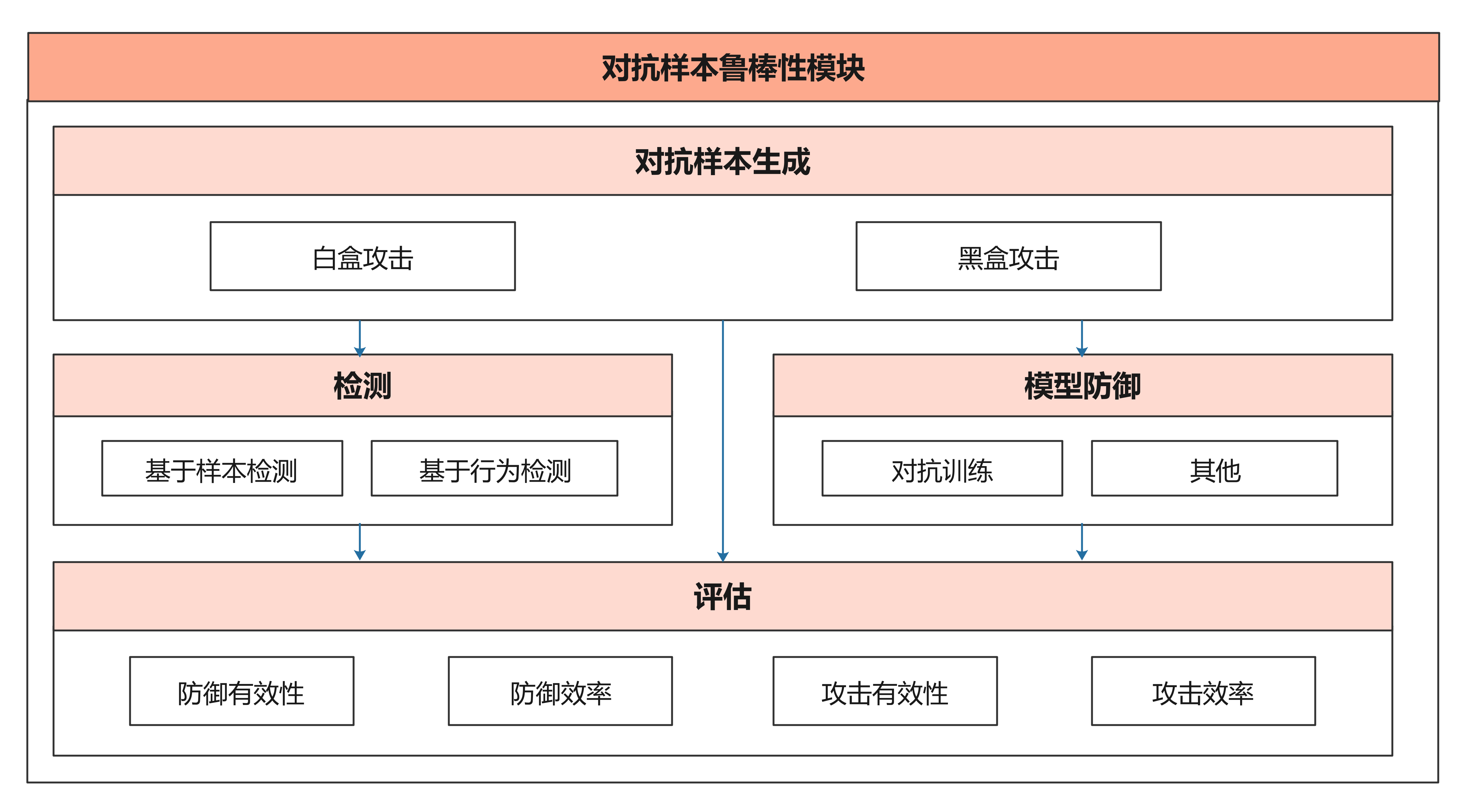

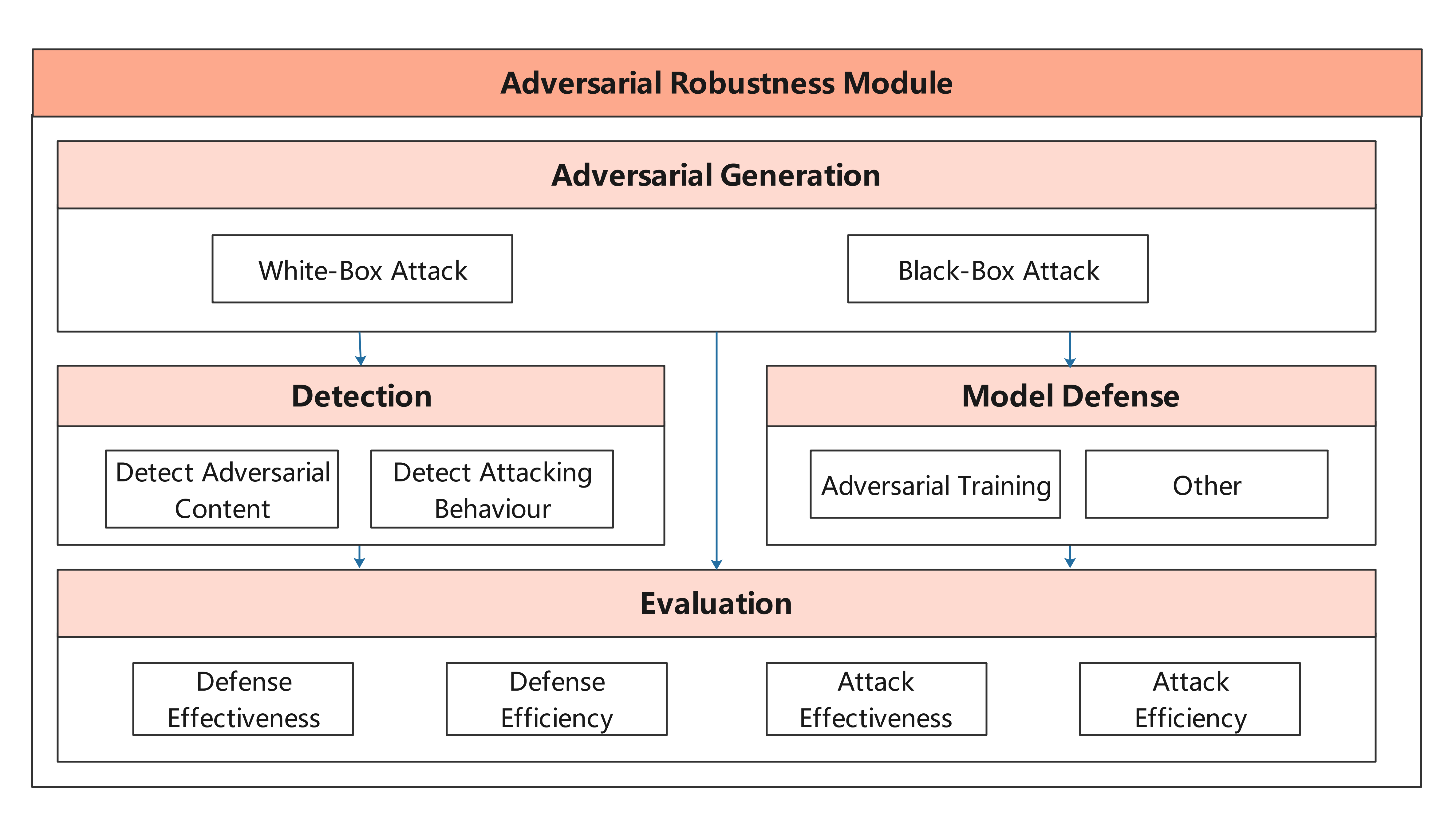

| ### Adversarial Robustness Module | |||

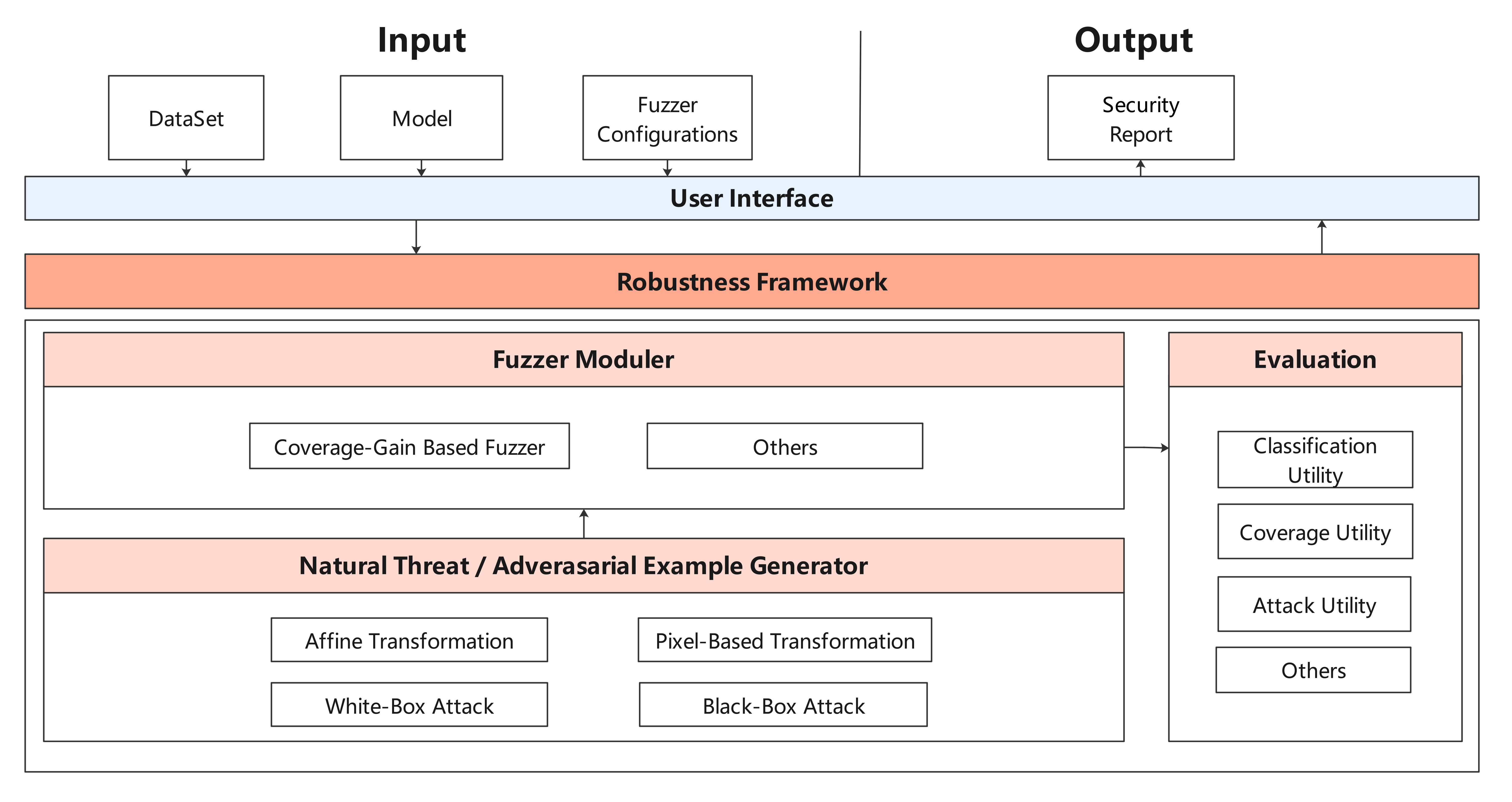

| MindArmour is designed for adversarial examples, including four submodule: adversarial examples generation, adversarial example detection, model defense and evaluation. The architecture is shown as follow: | |||

| Adversarial robustness module is designed for evaluating the robustness of the model against adversarial examples, and provides model enhancement methods to enhance the model's ability to resist the adversarial attack and improve the model's robustness. | |||

| This module includes four submodule: Adversarial Examples Generation, Adversarial Examples Detection, Model Defense and Evaluation. | |||

|  | |||

| The architecture is shown as follow: | |||

| ## Setting up MindArmour | |||

|  | |||

| ### Dependencies | |||

| ### Fuzz Testing Module | |||

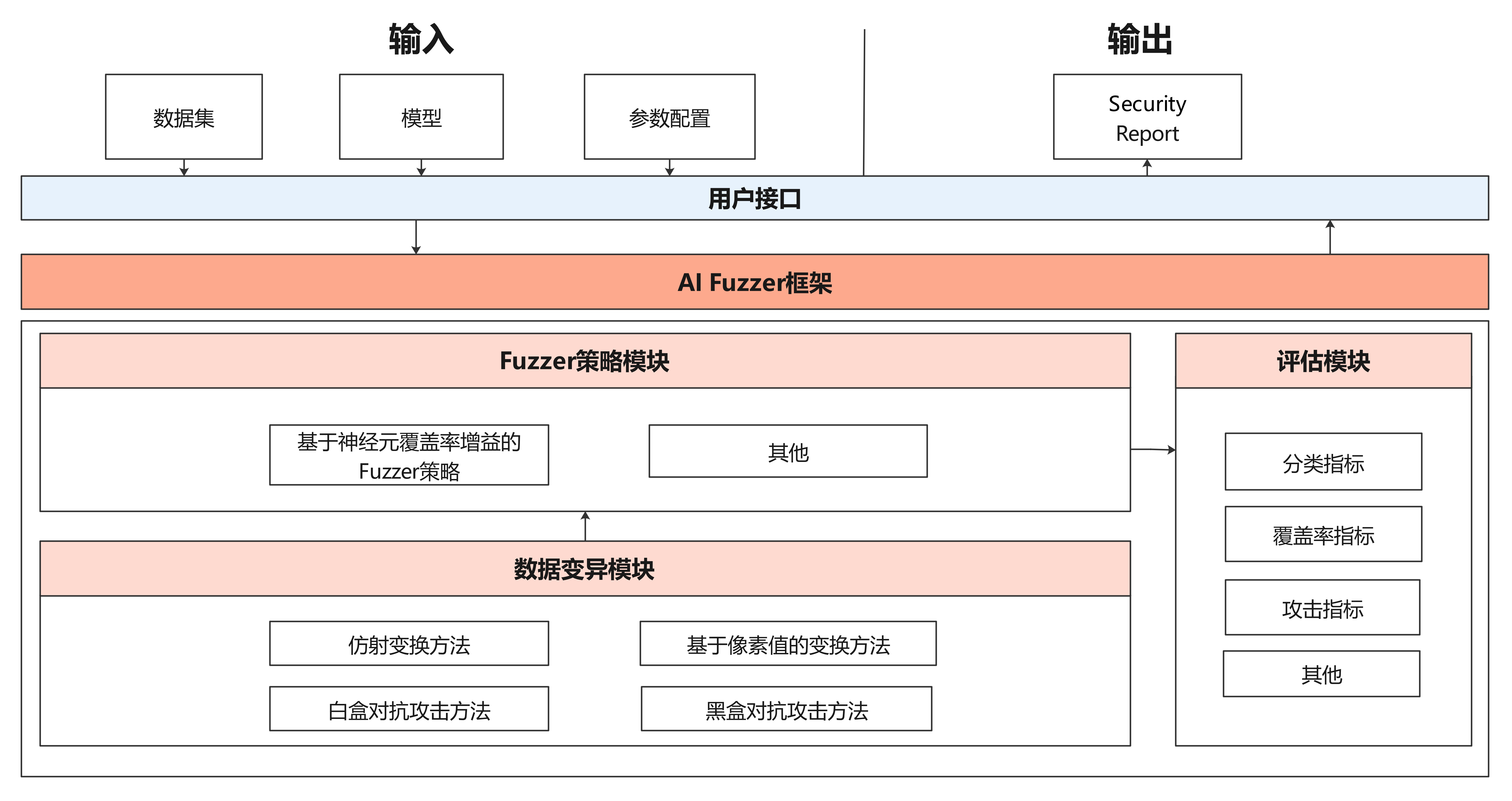

| Fuzz Testing module is a security test for AI models. We introduce neuron coverage gain as a guide to fuzz testing according to the characteristics of neural networks. | |||

| Fuzz testing is guided to generate samples in the direction of increasing neuron coverage rate, so that the input can activate more neurons and neuron values have a wider distribution range to fully test neural networks and explore different types of model output results and wrong behaviors. | |||

| The architecture is shown as follow: | |||

|  | |||

| ### Privacy Protection and Evaluation Module | |||

| Privacy Protection and Evaluation Module includes two modules: Differential Privacy Training Module and Privacy Leakage Evaluation Module. | |||

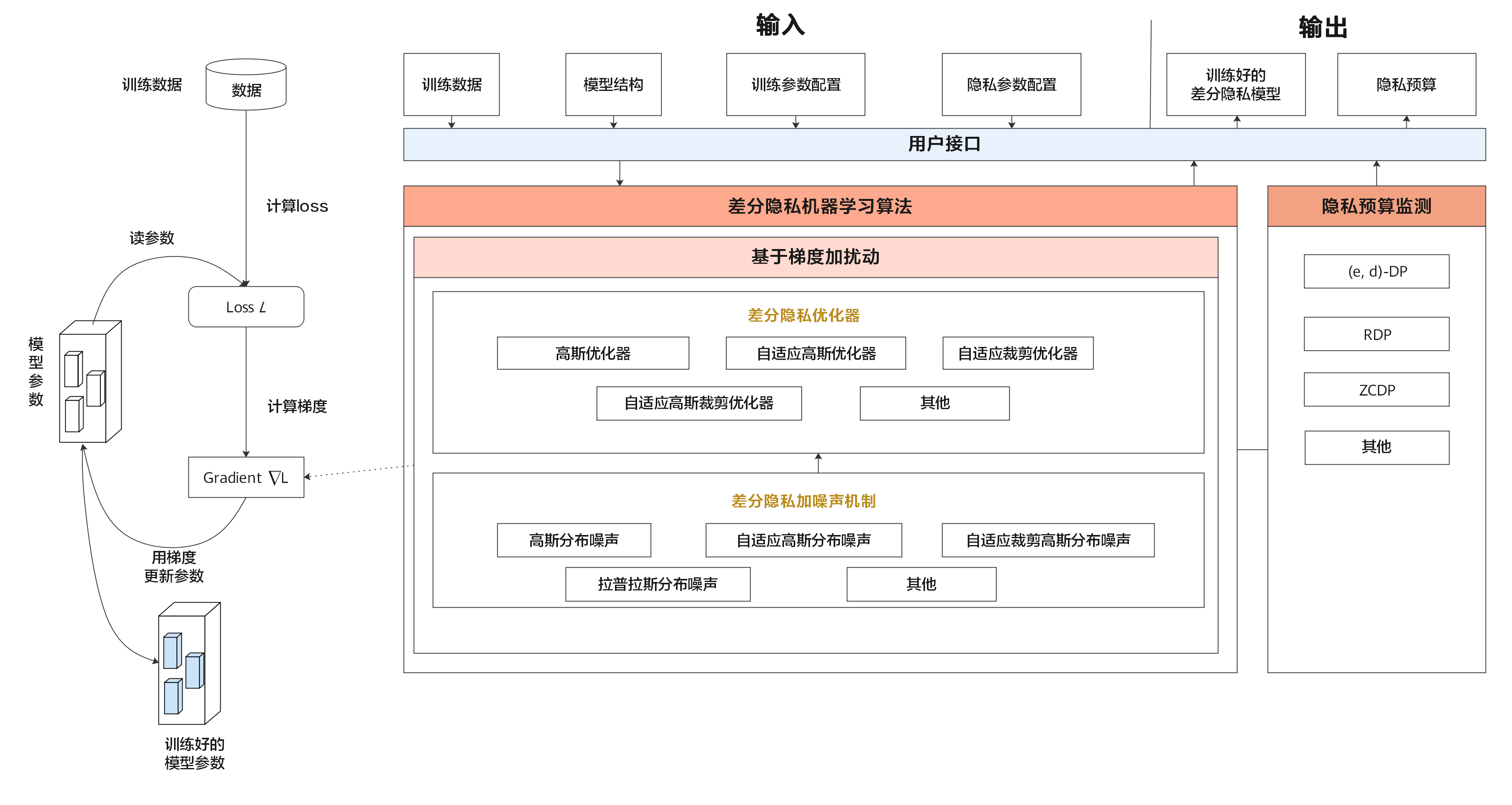

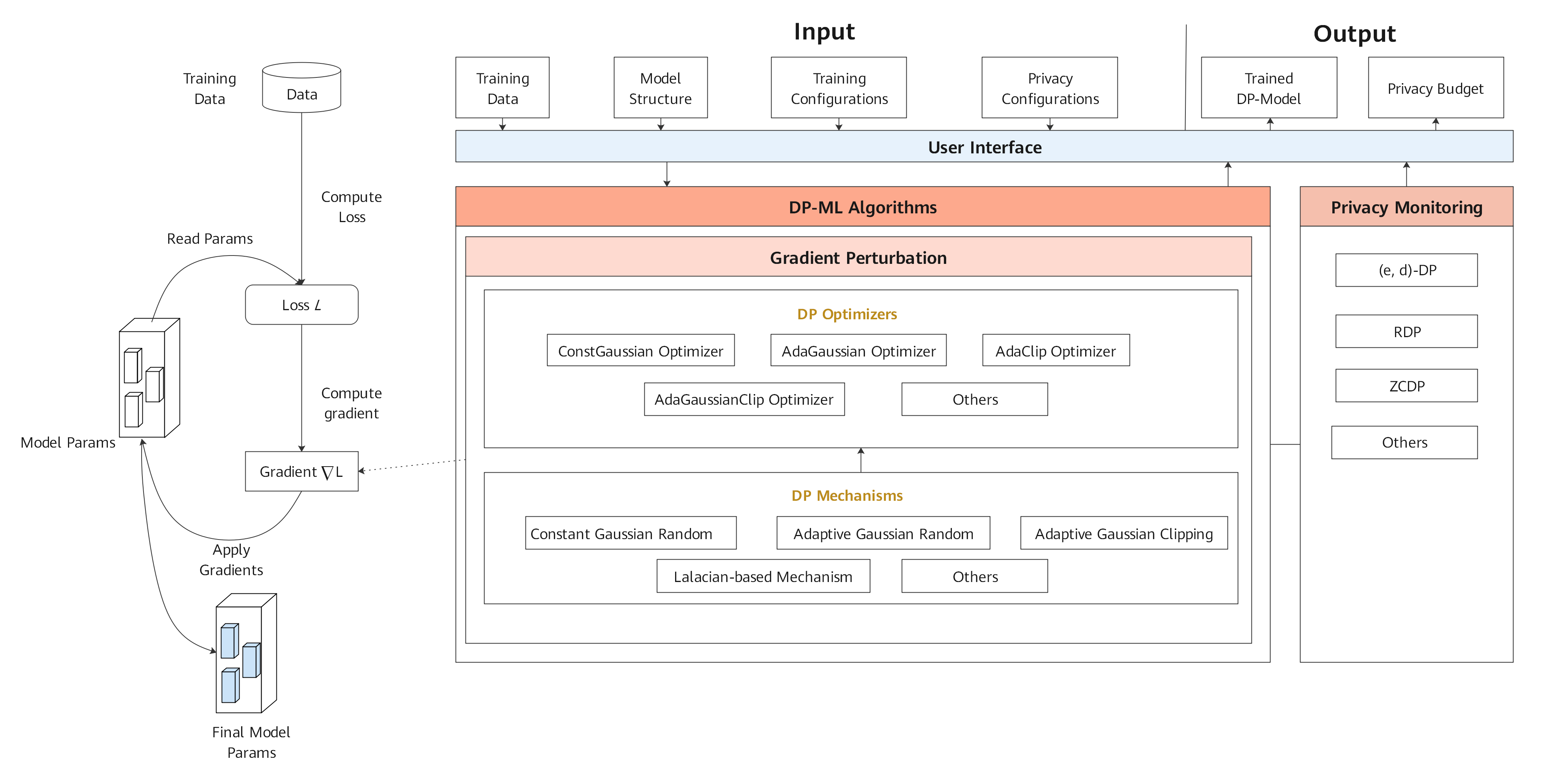

| #### Differential Privacy Training Module | |||

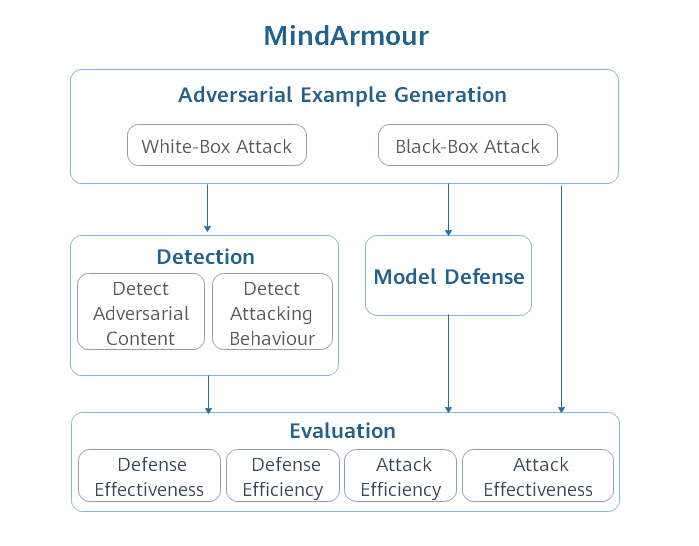

| Differential Privacy Training Module implements the differential privacy optimizer. Currently, `SGD`, `Momentum` and `Adam` are supported. They are differential privacy optimizers based on the Gaussian mechanism. | |||

| This mechanism supports both non-adaptive and adaptive policy. Rényi differential privacy (RDP) and Zero-Concentrated differential privacy(ZCDP) are provided to monitor differential privacy budgets. | |||

| The architecture is shown as follow: | |||

|  | |||

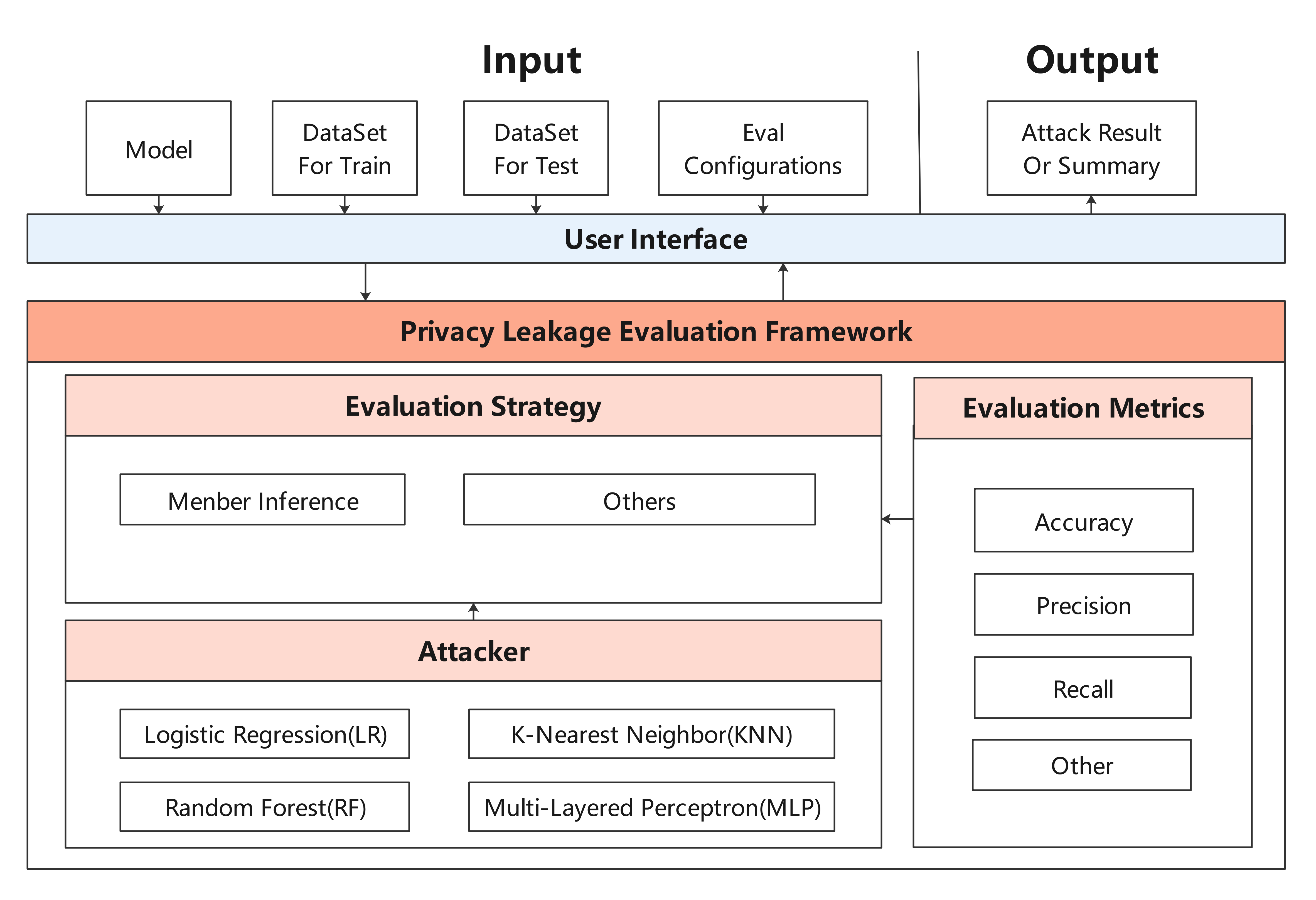

| #### Privacy Leakage Evaluation Module | |||

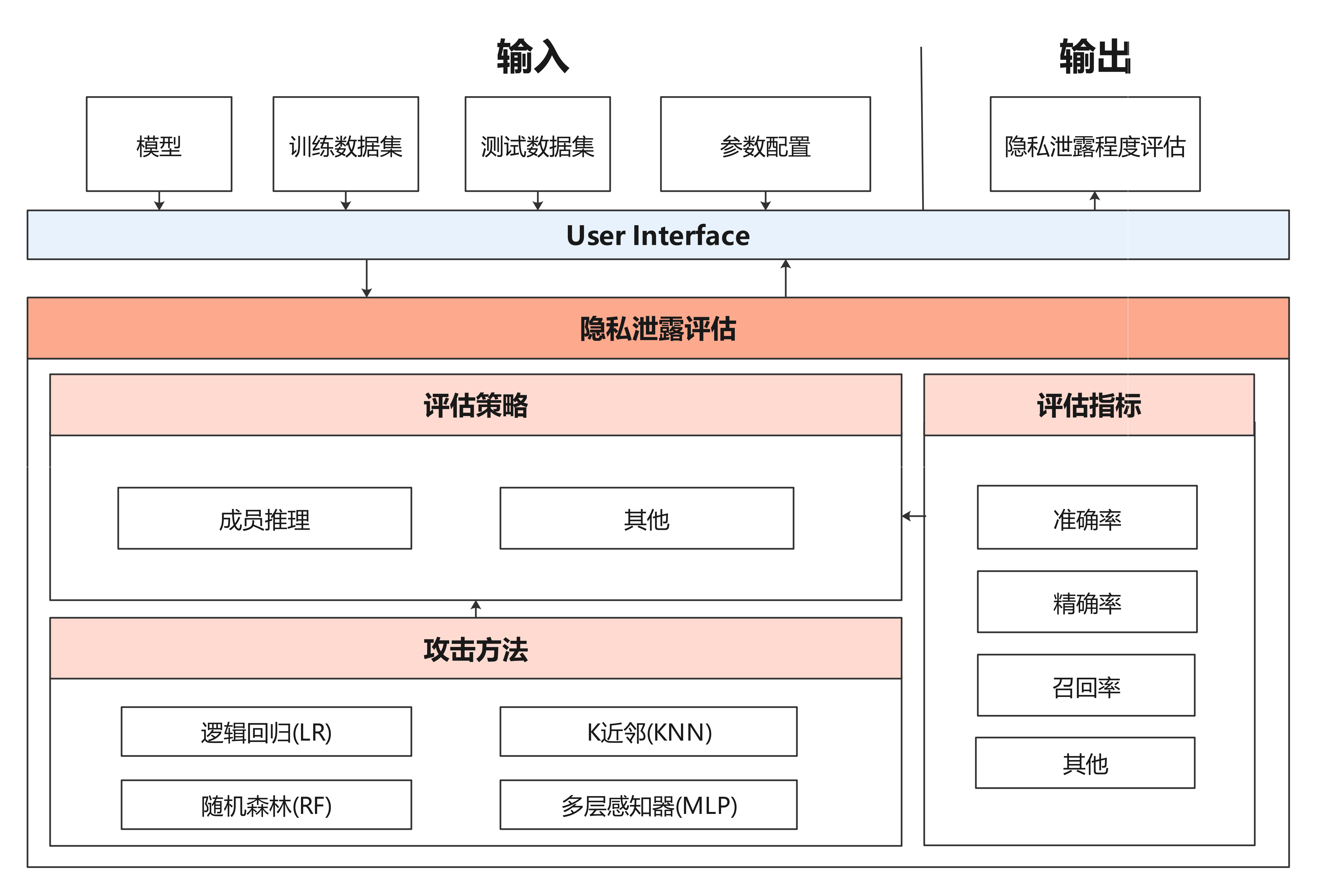

| Privacy Leakage Evaluation Module is used to assess the risk of a model revealing user privacy. The privacy data security of the deep learning model is evaluated by using membership inference method to infer whether the sample belongs to training dataset. | |||

| The architecture is shown as follow: | |||

|  | |||

| ## Starting | |||

| ### System Environment Information Confirmation | |||

| - The hardware platform should be Ascend, GPU or CPU. | |||

| - See our [MindSpore Installation Guide](https://www.mindspore.cn/install) to install MindSpore. | |||

| The versions of MindArmour and MindSpore must be consistent. | |||

| - All other dependencies are included in [setup.py](https://gitee.com/mindspore/mindarmour/blob/master/setup.py). | |||

| This library uses MindSpore to accelerate graph computations performed by many machine learning models. Therefore, installing MindSpore is a pre-requisite. All other dependencies are included in `setup.py`. | |||

| ### Installation | |||

| ### Version dependency | |||

| Due the dependency between MindArmour and MindSpore, please follow the table below and install the corresponding MindSpore verision from [MindSpore download page](https://www.mindspore.cn/versions/en). | |||

| | MindArmour Version | Branch | MindSpore Version | | |||

| | ------------------ | --------------------------------------------------------- | ----------------- | | |||

| | 2.0.0 | [r2.0](https://gitee.com/mindspore/mindarmour/tree/r2.0/) | >=1.7.0 | | |||

| | 1.9.0 | [r1.9](https://gitee.com/mindspore/mindarmour/tree/r1.9/) | >=1.7.0 | | |||

| | 1.8.0 | [r1.8](https://gitee.com/mindspore/mindarmour/tree/r1.8/) | >=1.7.0 | | |||

| | 1.7.0 | [r1.7](https://gitee.com/mindspore/mindarmour/tree/r1.7/) | r1.7 | | |||

| #### Installation by Source Code | |||

| #### Installation for development | |||

| 1. Download source code from Gitee. | |||

| ```bash | |||

| git clone https://gitee.com/mindspore/mindarmour.git | |||

| ``` | |||

| 2. Compile and install in MindArmour directory. | |||

| ```bash | |||

| cd mindarmour | |||

| python setup.py install | |||

| ``` | |||

| ```bash | |||

| git clone https://gitee.com/mindspore/mindarmour.git | |||

| ``` | |||

| #### Installation by pip | |||

| 2. Compile and install in MindArmour directory. | |||

| ```bash | |||

| pip install https://ms-release.obs.cn-north-4.myhuaweicloud.com/{version}/MindArmour/{arch}/mindarmour-{version}-cp37-cp37m-linux_{arch}.whl --trusted-host ms-release.obs.cn-north-4.myhuaweicloud.com -i https://pypi.tuna.tsinghua.edu.cn/simple | |||

| $ cd mindarmour | |||

| $ python setup.py install | |||

| ``` | |||

| > - When the network is connected, dependency items are automatically downloaded during .whl package installation. (For details about other dependency items, see [setup.py](https://gitee.com/mindspore/mindarmour/blob/master/setup.py)). In other cases, you need to manually install dependency items. | |||

| > - `{version}` denotes the version of MindArmour. For example, when you are downloading MindArmour 1.0.1, `{version}` should be 1.0.1. | |||

| > - `{arch}` denotes the system architecture. For example, the Linux system you are using is x86 architecture 64-bit, `{arch}` should be `x86_64`. If the system is ARM architecture 64-bit, then it should be `aarch64`. | |||

| #### `Pip` installation | |||

| ### Installation Verification | |||

| 1. Download whl package from [MindSpore website](https://www.mindspore.cn/versions/en), then run the following command: | |||

| ``` | |||

| pip install mindarmour-{version}-cp37-cp37m-linux_{arch}.whl | |||

| ``` | |||

| Successfully installed, if there is no error message such as `No module named 'mindarmour'` when execute the following command: | |||

| 2. Successfully installed, if there is no error message such as `No module named 'mindarmour'` when execute the following command: | |||

| ```bash | |||

| python -c 'import mindarmour' | |||

| @@ -129,7 +59,7 @@ Guidance on installation, tutorials, API, see our [User Documentation](https://g | |||

| ## Community | |||

| [MindSpore Slack](https://join.slack.com/t/mindspore/shared_invite/enQtOTcwMTIxMDI3NjM0LTNkMWM2MzI5NjIyZWU5ZWQ5M2EwMTQ5MWNiYzMxOGM4OWFhZjI4M2E5OGI2YTg3ODU1ODE2Njg1MThiNWI3YmQ) - Ask questions and find answers. | |||

| - [MindSpore Slack](https://join.slack.com/t/mindspore/shared_invite/enQtOTcwMTIxMDI3NjM0LTNkMWM2MzI5NjIyZWU5ZWQ5M2EwMTQ5MWNiYzMxOGM4OWFhZjI4M2E5OGI2YTg3ODU1ODE2Njg1MThiNWI3YmQ) - Ask questions and find answers. | |||

| ## Contributing | |||

+ 0

- 141

README_CN.md

View File

| @@ -1,141 +0,0 @@ | |||

| # MindArmour | |||

| <!-- TOC --> | |||

| - [MindArmour](#mindarmour) | |||

| - [简介](#简介) | |||

| - [对抗样本鲁棒性模块](#对抗样本鲁棒性模块) | |||

| - [Fuzz Testing模块](#fuzz-testing模块) | |||

| - [隐私保护模块](#隐私保护模块) | |||

| - [差分隐私训练模块](#差分隐私训练模块) | |||

| - [隐私泄露评估模块](#隐私泄露评估模块) | |||

| - [开始](#开始) | |||

| - [确认系统环境信息](#确认系统环境信息) | |||

| - [安装](#安装) | |||

| - [源码安装](#源码安装) | |||

| - [pip安装](#pip安装) | |||

| - [验证是否成功安装](#验证是否成功安装) | |||

| - [文档](#文档) | |||

| - [社区](#社区) | |||

| - [贡献](#贡献) | |||

| - [版本](#版本) | |||

| - [版权](#版权) | |||

| <!-- /TOC --> | |||

| [View English](./README.md) | |||

| ## 简介 | |||

| MindArmour关注AI的安全和隐私问题。致力于增强模型的安全可信、保护用户的数据隐私。主要包含3个模块:对抗样本鲁棒性模块、Fuzz Testing模块、隐私保护与评估模块。 | |||

| ### 对抗样本鲁棒性模块 | |||

| 对抗样本鲁棒性模块用于评估模型对于对抗样本的鲁棒性,并提供模型增强方法用于增强模型抗对抗样本攻击的能力,提升模型鲁棒性。对抗样本鲁棒性模块包含了4个子模块:对抗样本的生成、对抗样本的检测、模型防御、攻防评估。 | |||

| 对抗样本鲁棒性模块的架构图如下: | |||

|  | |||

| ### Fuzz Testing模块 | |||

| Fuzz Testing模块是针对AI模型的安全测试,根据神经网络的特点,引入神经元覆盖率,作为Fuzz测试的指导,引导Fuzzer朝着神经元覆盖率增加的方向生成样本,让输入能够激活更多的神经元,神经元值的分布范围更广,以充分测试神经网络,探索不同类型的模型输出结果和错误行为。 | |||

| Fuzz Testing模块的架构图如下: | |||

|  | |||

| ### 隐私保护模块 | |||

| 隐私保护模块包含差分隐私训练与隐私泄露评估。 | |||

| #### 差分隐私训练模块 | |||

| 差分隐私训练包括动态或者非动态的差分隐私`SGD`、`Momentum`、`Adam`优化器,噪声机制支持高斯分布噪声、拉普拉斯分布噪声,差分隐私预算监测包含ZCDP、RDP。 | |||

| 差分隐私的架构图如下: | |||

|  | |||

| #### 隐私泄露评估模块 | |||

| 隐私泄露评估模块用于评估模型泄露用户隐私的风险。利用成员推理方法来推测样本是否属于用户训练数据集,从而评估深度学习模型的隐私数据安全。 | |||

| 隐私泄露评估模块框架图如下: | |||

|  | |||

| ## 开始 | |||

| ### 确认系统环境信息 | |||

| - 硬件平台为Ascend、GPU或CPU。 | |||

| - 参考[MindSpore安装指南](https://www.mindspore.cn/install),完成MindSpore的安装。 | |||

| MindArmour与MindSpore的版本需保持一致。 | |||

| - 其余依赖请参见[setup.py](https://gitee.com/mindspore/mindarmour/blob/master/setup.py)。 | |||

| ### 安装 | |||

| #### MindSpore版本依赖关系 | |||

| 由于MindArmour与MindSpore有依赖关系,请按照下表所示的对应关系,在[MindSpore下载页面](https://www.mindspore.cn/versions)下载并安装对应的whl包。 | |||

| | MindArmour | 分支 | MindSpore | | |||

| | ---------- | --------------------------------------------------------- | --------- | | |||

| | 2.0.0 | [r2.0](https://gitee.com/mindspore/mindarmour/tree/r2.0/) | >=1.7.0 | | |||

| | 1.9.0 | [r1.9](https://gitee.com/mindspore/mindarmour/tree/r1.9/) | >=1.7.0 | | |||

| | 1.8.0 | [r1.8](https://gitee.com/mindspore/mindarmour/tree/r1.8/) | >=1.7.0 | | |||

| | 1.7.0 | [r1.7](https://gitee.com/mindspore/mindarmour/tree/r1.7/) | r1.7 | | |||

| #### 源码安装 | |||

| 1. 从Gitee下载源码。 | |||

| ```bash | |||

| git clone https://gitee.com/mindspore/mindarmour.git | |||

| ``` | |||

| 2. 在源码根目录下,执行如下命令编译并安装MindArmour。 | |||

| ```bash | |||

| cd mindarmour | |||

| python setup.py install | |||

| ``` | |||

| #### pip安装 | |||

| ```bash | |||

| pip install https://ms-release.obs.cn-north-4.myhuaweicloud.com/{version}/MindArmour/{arch}/mindarmour-{version}-cp37-cp37m-linux_{arch}.whl --trusted-host ms-release.obs.cn-north-4.myhuaweicloud.com -i https://pypi.tuna.tsinghua.edu.cn/simple | |||

| ``` | |||

| > - 在联网状态下,安装whl包时会自动下载MindArmour安装包的依赖项(依赖项详情参见[setup.py](https://gitee.com/mindspore/mindarmour/blob/master/setup.py)),其余情况需自行安装。 | |||

| > - `{version}`表示MindArmour版本号,例如下载1.0.1版本MindArmour时,`{version}`应写为1.0.1。 | |||

| > - `{arch}`表示系统架构,例如使用的Linux系统是x86架构64位时,`{arch}`应写为`x86_64`。如果系统是ARM架构64位,则写为`aarch64`。 | |||

| ### 验证是否成功安装 | |||

| 执行如下命令,如果没有报错`No module named 'mindarmour'`,则说明安装成功。 | |||

| ```bash | |||

| python -c 'import mindarmour' | |||

| ``` | |||

| ## 文档 | |||

| 安装指导、使用教程、API,请参考[用户文档](https://gitee.com/mindspore/docs)。 | |||

| ## 社区 | |||

| 社区问答:[MindSpore Slack](https://join.slack.com/t/mindspore/shared_invite/enQtOTcwMTIxMDI3NjM0LTNkMWM2MzI5NjIyZWU5ZWQ5M2EwMTQ5MWNiYzMxOGM4OWFhZjI4M2E5OGI2YTg3ODU1ODE2Njg1MThiNWI3YmQ)。 | |||

| ## 贡献 | |||

| 欢迎参与社区贡献,详情参考[Contributor Wiki](https://gitee.com/mindspore/mindspore/blob/master/CONTRIBUTING.md)。 | |||

| ## 版本 | |||

| 版本信息参考:[RELEASE](RELEASE.md)。 | |||

| ## 版权 | |||

| [Apache License 2.0](LICENSE) | |||

+ 5

- 373

RELEASE.md

View File

| @@ -1,379 +1,11 @@ | |||

| # MindArmour Release Notes | |||

| ## MindArmour 2.0.0 Release Notes | |||

| ### API Change | |||

| * Add version check with MindSpore. | |||

| ### Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Zhidan, Zhang Shukun, Liu Liu, Tang Cong. | |||

| Contributions of any kind are welcome! | |||

| ## MindArmour 1.9.0 Release Notes | |||

| ### API Change | |||

| * Add Chinese version api of natural robustness feature. | |||

| ### Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Zhidan, Zhang Shukun, Jin Xiulang, Liu Liu, Tang Cong, Yangyuan. | |||

| Contributions of any kind are welcome! | |||

| ## MindArmour 1.8.0 Release Notes | |||

| ### API Change | |||

| * Add Chinese version of all existed api. | |||

| ### Contributors | |||

| Thanks goes to these wonderful people: | |||

| Zhang Shukun, Liu Zhidan, Jin Xiulang, Liu Liu, Tang Cong, Yangyuan. | |||

| Contributions of any kind are welcome! | |||

| ## MindArmour 1.7.0 Release Notes | |||

| ### Major Features and Improvements | |||

| #### Robustness | |||

| * [STABLE] Real-World Robustness Evaluation Methods | |||

| ### API Change | |||

| * Change value of parameter `mutate_config` in `mindarmour.fuzz_testing.Fuzzer.fuzzing` interface. ([!333](https://gitee.com/mindspore/mindarmour/pulls/333)) | |||

| ### Bug fixes | |||

| * Update version of third-party dependence pillow from more than or equal to 6.2.0 to more than or equal to 7.2.0. ([!329](https://gitee.com/mindspore/mindarmour/pulls/329)) | |||

| ### Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Zhidan, Zhang Shukun, Jin Xiulang, Liu Liu. | |||

| Contributions of any kind are welcome! | |||

| # MindArmour 1.6.0 | |||

| ## MindArmour 1.6.0 Release Notes | |||

| ### Major Features and Improvements | |||

| #### Reliability | |||

| * [BETA] Data Drift Detection for Image Data | |||

| * [BETA] Model Fault Injection | |||

| ### Bug fixes | |||

| ### Contributors | |||

| Thanks goes to these wonderful people: | |||

| Wu Xiaoyu,Feng Zhenye, Liu Zhidan, Jin Xiulang, Liu Luobin, Liu Liu, Zhang Shukun | |||

| # MindArmour 1.5.0 | |||

| ## MindArmour 1.5.0 Release Notes | |||

| ### Major Features and Improvements | |||

| #### Reliability | |||

| * [BETA] Reconstruct AI Fuzz and Neuron Coverage Metrics | |||

| ### Bug fixes | |||

| ### Contributors | |||

| Thanks goes to these wonderful people: | |||

| Wu Xiaoyu,Liu Zhidan, Jin Xiulang, Liu Luobin, Liu Liu | |||

| # MindArmour 1.3.0-rc1 | |||

| ## MindArmour 1.3.0 Release Notes | |||

| ### Major Features and Improvements | |||

| #### Privacy | |||

| * [STABLE] Data Drift Detection for Time Series Data | |||

| ### Bug fixes | |||

| * [BUGFIX] Optimization of API description. | |||

| ### Contributors | |||

| Thanks goes to these wonderful people: | |||

| Wu Xiaoyu,Liu Zhidan, Jin Xiulang, Liu Luobin, Liu Liu | |||

| # MindArmour 1.2.0 | |||

| ## MindArmour 1.2.0 Release Notes | |||

| ### Major Features and Improvements | |||

| #### Privacy | |||

| * [STABLE] Tailored-based privacy protection technology (Pynative) | |||

| * [STABLE] Model Inversion. Reverse analysis technology of privacy information | |||

| ### API Change | |||

| #### Backwards Incompatible Change | |||

| ##### C++ API | |||

| [Modify] ... | |||

| [Add] ... | |||

| [Delete] ... | |||

| ##### Java API | |||

| [Add] ... | |||

| #### Deprecations | |||

| ##### C++ API | |||

| ##### Java API | |||

| ### Bug fixes | |||

| [BUGFIX] ... | |||

| ### Contributors | |||

| Thanks goes to these wonderful people: | |||

| han.yin | |||

| # MindArmour 1.1.0 Release Notes | |||

| ## MindArmour | |||

| ### Major Features and Improvements | |||

| * [STABLE] Attack capability of the Object Detection models. | |||

| * Some white-box adversarial attacks, such as [iterative] gradient method and DeepFool now can be applied to Object Detection models. | |||

| * Some black-box adversarial attacks, such as PSO and Genetic Attack now can be applied to Object Detection models. | |||

| ### Backwards Incompatible Change | |||

| #### Python API | |||

| #### C++ API | |||

| ### Deprecations | |||

| #### Python API | |||

| #### C++ API | |||

| ### New Features | |||

| #### Python API | |||

| #### C++ API | |||

| ### Improvements | |||

| #### Python API | |||

| #### C++ API | |||

| ### Bug fixes | |||

| #### Python API | |||

| #### C++ API | |||

| ## Contributors | |||

| Thanks goes to these wonderful people: | |||

| Xiulang Jin, Zhidan Liu, Luobin Liu and Liu Liu. | |||

| Contributions of any kind are welcome! | |||

| # Release 1.0.0 | |||

| ## Major Features and Improvements | |||

| ### Differential privacy model training | |||

| * Privacy leakage evaluation. | |||

| * Parameter verification enhancement. | |||

| * Support parallel computing. | |||

| ### Model robustness evaluation | |||

| * Fuzzing based Adversarial Robustness testing. | |||

| * Parameter verification enhancement. | |||

| ### Other | |||

| * Api & Directory Structure | |||

| * Adjusted the directory structure based on different features. | |||

| * Optimize the structure of examples. | |||

| ## Bugfixes | |||

| ## Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Liu, Xiulang Jin, Zhidan Liu and Luobin Liu. | |||

| Contributions of any kind are welcome! | |||

| # Release 0.7.0-beta | |||

| ## Major Features and Improvements | |||

| ### Differential privacy model training | |||

| * Privacy leakage evaluation. | |||

| * Using Membership inference to evaluate the effectiveness of privacy-preserving techniques for AI. | |||

| ### Model robustness evaluation | |||

| * Fuzzing based Adversarial Robustness testing. | |||

| * Coverage-guided test set generation. | |||

| ## Bugfixes | |||

| ## Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Liu, Xiulang Jin, Zhidan Liu, Luobin Liu and Huanhuan Zheng. | |||

| Contributions of any kind are welcome! | |||

| # Release 0.6.0-beta | |||

| ## Major Features and Improvements | |||

| ### Differential privacy model training | |||

| * Optimizers with differential privacy | |||

| * Differential privacy model training now supports some new policies. | |||

| * Adaptive Norm policy is supported. | |||

| * Adaptive Noise policy with exponential decrease is supported. | |||

| * Differential Privacy Training Monitor | |||

| * A new monitor is supported using zCDP as its asymptotic budget estimator. | |||

| ## Bugfixes | |||

| ## Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Liu, Huanhuan Zheng, XiuLang jin, Zhidan liu. | |||

| Contributions of any kind are welcome. | |||

| # Release 0.5.0-beta | |||

| ## Major Features and Improvements | |||

| ### Differential privacy model training | |||

| * Optimizers with differential privacy | |||

| * Differential privacy model training now supports both Pynative mode and graph mode. | |||

| * Graph mode is recommended for its performance. | |||

| ## Bugfixes | |||

| ## Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Liu, Huanhuan Zheng, Xiulang Jin, Zhidan Liu. | |||

| Contributions of any kind are welcome! | |||

| # Release 0.3.0-alpha | |||

| ## Major Features and Improvements | |||

| ### Differential Privacy Model Training | |||

| Differential Privacy is coming! By using Differential-Privacy-Optimizers, one can still train a model as usual, while the trained model preserved the privacy of training dataset, satisfying the definition of | |||

| differential privacy with proper budget. | |||

| * Optimizers with Differential Privacy([PR23](https://gitee.com/mindspore/mindarmour/pulls/23), [PR24](https://gitee.com/mindspore/mindarmour/pulls/24)) | |||

| * Some common optimizers now have a differential privacy version (SGD/Adam). We are adding more. | |||

| * Automatically and adaptively add Gaussian Noise during training to achieve Differential Privacy. | |||

| * Automatically stop training when Differential Privacy Budget exceeds. | |||

| * Differential Privacy Monitor([PR22](https://gitee.com/mindspore/mindarmour/pulls/22)) | |||

| * Calculate overall budget consumed during training, indicating the ultimate protect effect. | |||

| ## Bug fixes | |||

| ## Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Liu, Huanhuan Zheng, Zhidan Liu, Xiulang Jin | |||

| Contributions of any kind are welcome! | |||

| # Release 0.2.0-alpha | |||

| ## Major Features and Improvements | |||

| * Add a white-box attack method: M-DI2-FGSM([PR14](https://gitee.com/mindspore/mindarmour/pulls/14)). | |||

| * Add three neuron coverage metrics: KMNCov, NBCov, SNACov([PR12](https://gitee.com/mindspore/mindarmour/pulls/12)). | |||

| * Add a coverage-guided fuzzing test framework for deep neural networks([PR13](https://gitee.com/mindspore/mindarmour/pulls/13)). | |||

| * Update the MNIST Lenet5 examples. | |||

| * Remove some duplicate code. | |||

| ## Bug fixes | |||

| ## Contributors | |||

| Thanks goes to these wonderful people: | |||

| Liu Liu, Huanhuan Zheng, Zhidan Liu, Xiulang Jin | |||

| Contributions of any kind are welcome! | |||

| # Release 0.1.0-alpha | |||

| Initial release of MindArmour. | |||

| ## Major Features | |||

| * Support adversarial attack and defense on the platform of MindSpore. | |||

| * Include 13 white-box and 7 black-box attack methods. | |||

| * Provide 5 detection algorithms to detect attacking in multiple way. | |||

| * Provide adversarial training to enhance model security. | |||

| * Provide 6 evaluation metrics for attack methods and 9 evaluation metrics for defense methods. | |||

| - Support adversarial attack and defense on the platform of MindSpore. | |||

| - Include 13 white-box and 7 black-box attack methods. | |||

| - Provide 5 detection algorithms to detect attacking in multiple way. | |||

| - Provide adversarial training to enhance model security. | |||

| - Provide 6 evaluation metrics for attack methods and 9 evaluation metrics for defense methods. | |||

+ 0

- 69

RELEASE_CN.md

View File

| @@ -1,69 +0,0 @@ | |||

| # MindArmour Release Notes | |||

| [View English](./RELEASE.md) | |||

| ## MindArmour 2.0.0 Release Notes | |||

| ### API Change | |||

| * 增加与MindSpore的版本校验关系。 | |||

| ### 贡献 | |||

| 感谢以下人员做出的贡献: | |||

| Liu Zhidan, Zhang Shukun, Liu Liu, Tang Cong. | |||

| 欢迎以任何形式对项目提供贡献! | |||

| ## MindArmour 1.9.0 Release Notes | |||

| ### API Change | |||

| * 增加自然鲁棒性特性的api中文版本 | |||

| ### 贡献 | |||

| 感谢以下人员做出的贡献: | |||

| Liu Zhidan, Zhang Shukun, Jin Xiulang, Liu Liu, Tang Cong, Yangyuan. | |||

| 欢迎以任何形式对项目提供贡献! | |||

| ## MindArmour 1.8.0 Release Notes | |||

| ### API Change | |||

| * 增加所有特性的api中文版本 | |||

| ### 贡献 | |||

| 感谢以下人员做出的贡献: | |||

| Zhang Shukun, Liu Zhidan, Jin Xiulang, Liu Liu, Tang Cong, Yangyuan. | |||

| 欢迎以任何形式对项目提供贡献! | |||

| ## MindArmour 1.7.0 Release Notes | |||

| ### 主要特性和增强 | |||

| #### 鲁棒性 | |||

| * [STABLE] 自然扰动评估方法 | |||

| ### API Change | |||

| * 接口`mindarmour.fuzz_testing.Fuzzer.fuzzing`的参数`mutate_config`的取值范围变化。 ([!333](https://gitee.com/mindspore/mindarmour/pulls/333)) | |||

| ### Bug修复 | |||

| * 更新第三方依赖pillow的版本从大于等于6.2.0更新为大于等于7.2.0. ([!329](https://gitee.com/mindspore/mindarmour/pulls/329)) | |||

| ### 贡献 | |||

| 感谢以下人员做出的贡献: | |||

| Liu Zhidan, Zhang Shukun, Jin Xiulang, Liu Liu. | |||

| 欢迎以任何形式对项目提供贡献! | |||

BIN

docs/adversarial_robustness_cn.png

View File

BIN

docs/adversarial_robustness_en.png

View File

+ 0

- 618

docs/api/api_python/mindarmour.adv_robustness.attacks.rst

View File

| @@ -1,618 +0,0 @@ | |||

| mindarmour.adv_robustness.attacks | |||

| ================================= | |||

| 本模块包括经典的黑盒和白盒攻击算法,以制作对抗样本。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.FastGradientMethod(network, eps=0.07, alpha=None, bounds=(0.0, 1.0), norm_level=2, is_targeted=False, loss_fn=None) | |||

| 基于梯度计算的单步攻击,扰动的范数包括 'L1'、'L2'和'Linf'。 | |||

| 参考文献:`I. J. Goodfellow, J. Shlens, and C. Szegedy, "Explaining and harnessing adversarial examples," in ICLR, 2015. <https://arxiv.org/abs/1412.6572>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.07。 | |||

| - **alpha** (Union[float, None]) - 单步随机扰动与数据范围的比例。默认值:None。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值, 数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **norm_level** (Union[int, str, numpy.inf]) - 范数类型。 | |||

| 可取值:numpy.inf、1、2、'1'、'2'、'l1'、'l2'、'np.inf'、'inf'、'linf'。默认值:2。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **loss_fn** (Union[loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.RandomFastGradientMethod(network, eps=0.07, alpha=0.035, bounds=(0.0, 1.0), norm_level=2, is_targeted=False, loss_fn=None) | |||

| 使用随机扰动的快速梯度法(Fast Gradient Method)。 | |||

| 基于梯度计算的单步攻击,其对抗性噪声是根据输入的梯度生成的,然后加入随机扰动,从而生成对抗样本。 | |||

| 参考文献:`Florian Tramer, Alexey Kurakin, Nicolas Papernot, "Ensemble adversarial training: Attacks and defenses" in ICLR, 2018 <https://arxiv.org/abs/1705.07204>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.07。 | |||

| - **alpha** (float) - 单步随机扰动与数据范围的比例。默认值:0.035。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **norm_level** (Union[int, str, numpy.inf]) - 范数类型。 | |||

| 可取值:numpy.inf、1、2、'1'、'2'、'l1'、'l2'、'np.inf'、'inf'、'linf'。默认值:2。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **loss_fn** (Union[loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| 异常: | |||

| - **ValueError** - `eps` 小于 `alpha` 。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.FastGradientSignMethod(network, eps=0.07, alpha=None, bounds=(0.0, 1.0), is_targeted=False, loss_fn=None) | |||

| 快速梯度下降法(Fast Gradient Sign Method)攻击计算输入数据的梯度,然后使用梯度的符号创建对抗性噪声。 | |||

| 参考文献:`Ian J. Goodfellow, J. Shlens, and C. Szegedy, "Explaining and harnessing adversarial examples," in ICLR, 2015 <https://arxiv.org/abs/1412.6572>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.07。 | |||

| - **alpha** (Union[float, None]) - 单步随机扰动与数据范围的比例。默认值:None。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.RandomFastGradientSignMethod(network, eps=0.07, alpha=0.035, bounds=(0.0, 1.0), is_targeted=False, loss_fn=None) | |||

| 快速梯度下降法(Fast Gradient Sign Method)使用随机扰动。 | |||

| 随机快速梯度符号法(Random Fast Gradient Sign Method)攻击计算输入数据的梯度,然后使用带有随机扰动的梯度符号来创建对抗性噪声。 | |||

| 参考文献:`F. Tramer, et al., "Ensemble adversarial training: Attacks and defenses," in ICLR, 2018 <https://arxiv.org/abs/1705.07204>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.07。 | |||

| - **alpha** (float) - 单步随机扰动与数据范围的比例。默认值:0.005。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| 异常: | |||

| - **ValueError** - `eps` 小于 `alpha` 。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.LeastLikelyClassMethod(network, eps=0.07, alpha=None, bounds=(0.0, 1.0), loss_fn=None) | |||

| 单步最不可能类方法(Single Step Least-Likely Class Method)是FGSM的变体,它以最不可能类为目标,以生成对抗样本。 | |||

| 参考文献:`F. Tramer, et al., "Ensemble adversarial training: Attacks and defenses," in ICLR, 2018 <https://arxiv.org/abs/1705.07204>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.07。 | |||

| - **alpha** (Union[float, None]) - 单步随机扰动与数据范围的比例。默认值:None。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.RandomLeastLikelyClassMethod(network, eps=0.07, alpha=0.035, bounds=(0.0, 1.0), loss_fn=None) | |||

| 随机最不可能类攻击方法:以置信度最小类别对应的梯度加一个随机扰动为攻击方向。 | |||

| 具有随机扰动的单步最不可能类方法(Single Step Least-Likely Class Method)是随机FGSM的变体,它以最不可能类为目标,以生成对抗样本。 | |||

| 参考文献:`F. Tramer, et al., "Ensemble adversarial training: Attacks and defenses," in ICLR, 2018 <https://arxiv.org/abs/1705.07204>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.07。 | |||

| - **alpha** (float) - 单步随机扰动与数据范围的比例。默认值:0.005。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| 异常: | |||

| - **ValueError** - `eps` 小于 `alpha` 。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.IterativeGradientMethod(network, eps=0.3, eps_iter=0.1, bounds=(0.0, 1.0), nb_iter=5, loss_fn=None) | |||

| 所有基于迭代梯度的攻击的抽象基类。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的对抗性扰动占数据范围的比例。默认值:0.3。 | |||

| - **eps_iter** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.1。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **nb_iter** (int) - 迭代次数。默认值:5。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入样本和原始/目标标签生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (Union[numpy.ndarray, tuple]) - 良性输入样本,用于创建对抗样本。 | |||

| - **labels** (Union[numpy.ndarray, tuple]) - 原始/目标标签。若每个输入有多个标签,将它包装在元组中。 | |||

| 异常: | |||

| - **NotImplementedError** - 此函数在迭代梯度方法中不可用。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.BasicIterativeMethod(network, eps=0.3, eps_iter=0.1, bounds=(0.0, 1.0), is_targeted=False, nb_iter=5, loss_fn=None) | |||

| 基本迭代法(Basic Iterative Method)攻击,一种生成对抗示例的迭代FGSM方法。 | |||

| 参考文献:`A. Kurakin, I. Goodfellow, and S. Bengio, "Adversarial examples in the physical world," in ICLR, 2017 <https://arxiv.org/abs/1607.02533>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的对抗性扰动占数据范围的比例。默认值:0.3。 | |||

| - **eps_iter** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.1。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **nb_iter** (int) - 迭代次数。默认值:5。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 使用迭代FGSM方法生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (Union[numpy.ndarray, tuple]) - 良性输入样本,用于创建对抗样本。 | |||

| - **labels** (Union[numpy.ndarray, tuple]) - 原始/目标标签。若每个输入有多个标签,将它包装在元组中。 | |||

| 返回: | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.MomentumIterativeMethod(network, eps=0.3, eps_iter=0.1, bounds=(0.0, 1.0), is_targeted=False, nb_iter=5, decay_factor=1.0, norm_level='inf', loss_fn=None) | |||

| 动量迭代法(Momentum Iterative Method)攻击,通过在迭代中积累损失函数的梯度方向上的速度矢量,加速梯度下降算法,如FGSM、FGM和LLCM,从而生成对抗样本。 | |||

| 参考文献:`Y. Dong, et al., "Boosting adversarial attacks with momentum," arXiv:1710.06081, 2017 <https://arxiv.org/abs/1710.06081>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的对抗性扰动占数据范围的比例。默认值:0.3。 | |||

| - **eps_iter** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.1。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。 | |||

| 以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **nb_iter** (int) - 迭代次数。默认值:5。 | |||

| - **decay_factor** (float) - 迭代中的衰变因子。默认值:1.0。 | |||

| - **norm_level** (Union[int, str, numpy.inf]) - 范数类型。 | |||

| 可取值:numpy.inf、1、2、'1'、'2'、'l1'、'l2'、'np.inf'、'inf'、'linf'。默认值:numpy.inf。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入数据和原始/目标标签生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (Union[numpy.ndarray, tuple]) - 良性输入样本,用于创建对抗样本。 | |||

| - **labels** (Union[numpy.ndarray, tuple]) - 原始/目标标签。若每个输入有多个标签,将它包装在元组中。 | |||

| 返回: | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.ProjectedGradientDescent(network, eps=0.3, eps_iter=0.1, bounds=(0.0, 1.0), is_targeted=False, nb_iter=5, norm_level='inf', loss_fn=None) | |||

| 投影梯度下降(Projected Gradient Descent)攻击是基本迭代法的变体,在这种方法中,每次迭代之后,扰动被投影在指定半径的p范数球上(除了剪切对抗样本的值,使其位于允许的数据范围内)。这是Madry等人提出的用于对抗性训练的攻击。 | |||

| 参考文献:`A. Madry, et al., "Towards deep learning models resistant to adversarial attacks," in ICLR, 2018 <https://arxiv.org/abs/1706.06083>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的对抗性扰动占数据范围的比例。默认值:0.3。 | |||

| - **eps_iter** (float) - 攻击产生的单步对抗扰动占数据范围的比例。默认值:0.1。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **nb_iter** (int) - 迭代次数。默认值:5。 | |||

| - **norm_level** (Union[int, str, numpy.inf]) - 范数类型。 | |||

| 可取值:numpy.inf、1、2、'1'、'2'、'l1'、'l2'、'np.inf'、'inf'、'linf'。默认值:'numpy.inf'。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 基于BIM方法迭代生成对抗样本。通过带有参数norm_level的投影方法归一化扰动。 | |||

| 参数: | |||

| - **inputs** (Union[numpy.ndarray, tuple]) - 良性输入样本,用于创建对抗样本。 | |||

| - **labels** (Union[numpy.ndarray, tuple]) - 原始/目标标签。若每个输入有多个标签,将它包装在元组中。 | |||

| 返回: | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.DiverseInputIterativeMethod(network, eps=0.3, bounds=(0.0, 1.0), is_targeted=False, prob=0.5, loss_fn=None) | |||

| 多样性输入迭代法(Diverse Input Iterative Method)攻击遵循基本迭代法,并在每次迭代时对输入数据应用随机转换。对输入数据的这种转换可以提高对抗样本的可转移性。 | |||

| 参考文献:`Xie, Cihang and Zhang, et al., "Improving Transferability of Adversarial Examples With Input Diversity," in CVPR, 2019 <https://arxiv.org/abs/1803.06978>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的对抗性扰动占数据范围的比例。默认值:0.3。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **prob** (float) - 对输入样本的转换概率。默认值:0.5。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.MomentumDiverseInputIterativeMethod(network, eps=0.3, bounds=(0.0, 1.0), is_targeted=False, norm_level='l1', prob=0.5, loss_fn=None) | |||

| 动量多样性输入迭代法(Momentum Diverse Input Iterative Method)攻击是一种动量迭代法,在每次迭代时对输入数据应用随机变换。对输入数据的这种转换可以提高对抗样本的可转移性。 | |||

| 参考文献:`Xie, Cihang and Zhang, et al., "Improving Transferability of Adversarial Examples With Input Diversity," in CVPR, 2019 <https://arxiv.org/abs/1803.06978>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **eps** (float) - 攻击产生的对抗性扰动占数据范围的比例。默认值:0.3。 | |||

| - **bounds** (tuple) - 数据的上下界,表示数据范围。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **norm_level** (Union[int, str, numpy.inf]) - 范数类型。 | |||

| 可取值:numpy.inf、1、2、'1'、'2'、'l1'、'l2'、'np.inf'、'inf'、'linf'。默认值:'l1'。 | |||

| - **prob** (float) - 对输入样本的转换概率。默认值:0.5。 | |||

| - **loss_fn** (Union[Loss, None]) - 用于优化的损失函数。如果为None,则输入网络已配备损失函数。默认值:None。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.DeepFool(network, num_classes, model_type='classification', reserve_ratio=0.3, max_iters=50, overshoot=0.02, norm_level=2, bounds=None, sparse=True) | |||

| DeepFool是一种无目标的迭代攻击,通过将良性样本移动到最近的分类边界并跨越边界来实现。 | |||

| 参考文献:`DeepFool: a simple and accurate method to fool deep neural networks <https://arxiv.org/abs/1511.04599>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **num_classes** (int) - 模型输出的标签数,应大于零。 | |||

| - **model_type** (str) - 目标模型的类型。现在支持'classification'和'detection'。默认值:'classification'。 | |||

| - **reserve_ratio** (Union[int, float]) - 攻击后可检测到的对象百分比,仅当model_type='detection'时有效。保留比率应在(0, 1)的范围内。默认值:0.3。 | |||

| - **max_iters** (int) - 最大迭代次数,应大于零。默认值:50。 | |||

| - **overshoot** (float) - 过冲参数。默认值:0.02。 | |||

| - **norm_level** (Union[int, str, numpy.inf]) - 矢量范数类型。可取值:numpy.inf或2。默认值:2。 | |||

| - **bounds** (Union[tuple, list]) - 数据范围的上下界。以(数据最小值,数据最大值)的形式出现。默认值:None。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入样本和原始标签生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (Union[numpy.ndarray, tuple]) - 输入样本。 | |||

| - 如果 `model_type` ='classification',则输入的格式应为numpy.ndarray。输入的格式可以是(input1, input2, ...)。 | |||

| - 如果 `model_type` ='detection',则只能是一个数组。 | |||

| - **labels** (Union[numpy.ndarray, tuple]) - 目标标签或ground-truth标签。 | |||

| - 如果 `model_type` ='classification',标签的格式应为numpy.ndarray。 | |||

| - 如果 `model_type` ='detection',标签的格式应为(gt_boxes, gt_labels)。 | |||

| 返回: | |||

| - **numpy.ndarray** - 对抗样本。 | |||

| 异常: | |||

| - **NotImplementedError** - `norm_level` 不在[2, numpy.inf, '2', 'inf']中。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.CarliniWagnerL2Attack(network, num_classes, box_min=0.0, box_max=1.0, bin_search_steps=5, max_iterations=1000, confidence=0, learning_rate=5e-3, initial_const=1e-2, abort_early_check_ratio=5e-2, targeted=False, fast=True, abort_early=True, sparse=True) | |||

| 使用L2范数的Carlini & Wagner攻击通过分别利用两个损失生成对抗样本:“对抗损失”可使生成的示例实际上是对抗性的,“距离损失”可以控制对抗样本的质量。 | |||

| 参考文献:`Nicholas Carlini, David Wagner: "Towards Evaluating the Robustness of Neural Networks" <https://arxiv.org/abs/1608.04644>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **num_classes** (int) - 模型输出的标签数,应大于零。 | |||

| - **box_min** (float) - 目标模型输入的下界。默认值:0。 | |||

| - **box_max** (float) - 目标模型输入的上界。默认值:1.0。 | |||

| - **bin_search_steps** (int) - 用于查找距离和置信度之间的最优trade-off常数的二分查找步数。默认值:5。 | |||

| - **max_iterations** (int) - 最大迭代次数,应大于零。默认值:1000。 | |||

| - **confidence** (float) - 对抗样本输出的置信度。默认值:0。 | |||

| - **learning_rate** (float) - 攻击算法的学习率。默认值:5e-3。 | |||

| - **initial_const** (float) - 用于平衡扰动范数和置信度差异的初始trade-off常数。默认值:1e-2。 | |||

| - **abort_early_check_ratio** (float) - 检查所有迭代中所有比率的损失进度。默认值:5e-2。 | |||

| - **targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **fast** (bool) - 如果为True,则返回第一个找到的对抗样本。如果为False,则返回扰动较小的对抗样本。默认值:True。 | |||

| - **abort_early** (bool) - 是否提前终止。 | |||

| - 如果为True,则当损失在一段时间内没有减少,Adam将被中止。 | |||

| - 如果为False,Adam将继续工作,直到到达最大迭代。默认值:True。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入数据和目标标签生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 输入样本。 | |||

| - **labels** (numpy.ndarray) - 输入样本的真值标签或目标标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.JSMAAttack(network, num_classes, box_min=0.0, box_max=1.0, theta=1.0, max_iteration=1000, max_count=3, increase=True, sparse=True) | |||

| 基于Jacobian的显著图攻击(Jacobian-based Saliency Map Attack)是一种基于输入特征显著图的有目标的迭代攻击。它使用每个类标签相对于输入的每个组件的损失梯度。然后,使用显著图来选择产生最大误差的维度。 | |||

| 参考文献:`The limitations of deep learning in adversarial settings <https://arxiv.org/abs/1511.07528>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 目标模型。 | |||

| - **num_classes** (int) - 模型输出的标签数,应大于零。 | |||

| - **box_min** (float) - 目标模型输入的下界。默认值:0。 | |||

| - **box_max** (float) - 目标模型输入的上界。默认值:1.0。 | |||

| - **theta** (float) - 一个像素的变化率(相对于输入数据范围)。默认值:1.0。 | |||

| - **max_iteration** (int) - 迭代的最大轮次。默认值:1000。 | |||

| - **max_count** (int) - 每个像素的最大更改次数。默认值:3。 | |||

| - **increase** (bool) - 如果为True,则增加扰动。如果为False,则减少扰动。默认值:True。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 批量生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 输入样本。 | |||

| - **labels** (numpy.ndarray) - 目标标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 对抗样本。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.LBFGS(network, eps=1e-5, bounds=(0.0, 1.0), is_targeted=True, nb_iter=150, search_iters=30, loss_fn=None, sparse=False) | |||

| L-BFGS-B攻击使用有限内存BFGS优化算法来最小化输入与对抗样本之间的距离。 | |||

| 参考文献:`Pedro Tabacof, Eduardo Valle. "Exploring the Space of Adversarial Images" <https://arxiv.org/abs/1510.05328>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 被攻击模型的网络。 | |||

| - **eps** (float) - 攻击步长。默认值:1e-5。 | |||

| - **bounds** (tuple) - 数据的上下界。默认值:(0.0, 1.0) | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:True。 | |||

| - **nb_iter** (int) - lbfgs优化器的迭代次数,应大于零。默认值:150。 | |||

| - **search_iters** (int) - 步长的变更数,应大于零。默认值:30。 | |||

| - **loss_fn** (Functions) - 替代模型的损失函数。默认值:None。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:False。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入数据和目标标签生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 良性输入样本,用于创建对抗样本。 | |||

| - **labels** (numpy.ndarray) - 原始/目标标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.GeneticAttack(model, model_type='classification', targeted=True, reserve_ratio=0.3, sparse=True, pop_size=6, mutation_rate=0.005, per_bounds=0.15, max_steps=1000, step_size=0.20, temp=0.3, bounds=(0, 1.0), adaptive=False, c=0.1) | |||

| 遗传攻击(Genetic Attack)为基于遗传算法的黑盒攻击,属于差分进化算法。 | |||

| 此攻击是由Moustafa Alzantot等人(2018)提出的。 | |||

| 参考文献: `Moustafa Alzantot, Yash Sharma, Supriyo Chakraborty, "GeneticAttack: Practical Black-box Attacks with Gradient-FreeOptimization" <https://arxiv.org/abs/1805.11090>`_。 | |||

| 参数: | |||

| - **model** (BlackModel) - 目标模型。 | |||

| - **model_type** (str) - 目标模型的类型。现在支持'classification'和'detection'。默认值:'classification'。 | |||

| - **targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。 `model_type` ='detection'仅支持无目标攻击,默认值:True。 | |||

| - **reserve_ratio** (Union[int, float]) - 攻击后可检测到的对象百分比,仅当 `model_type` ='detection'时有效。保留比率应在(0, 1)的范围内。默认值:0.3。 | |||

| - **pop_size** (int) - 粒子的数量,应大于零。默认值:6。 | |||

| - **mutation_rate** (Union[int, float]) - 突变的概率,应在(0,1)的范围内。默认值:0.005。 | |||

| - **per_bounds** (Union[int, float]) - 扰动允许的最大无穷范数距离。 | |||

| - **max_steps** (int) - 每个对抗样本的最大迭代轮次。默认值:1000。 | |||

| - **step_size** (Union[int, float]) - 攻击步长。默认值:0.2。 | |||

| - **temp** (Union[int, float]) - 用于选择的采样温度。默认值:0.3。温度越大,个体选择概率之间的差异就越大。 | |||

| - **bounds** (Union[tuple, list, None]) - 数据的上下界。以(数据最小值,数据最大值)的形式出现。默认值:(0, 1.0)。 | |||

| - **adaptive** (bool) - 为True,则打开突变参数的动态缩放。如果为false,则打开静态突变参数。默认值:False。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| - **c** (Union[int, float]) - 扰动损失的权重。默认值:0.1。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入数据和目标标签(或ground_truth标签)生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (Union[numpy.ndarray, tuple]) - 输入样本。 | |||

| - 如果 `model_type` ='classification',则输入的格式应为numpy.ndarray。输入的格式可以是(input1, input2, ...)。 | |||

| - 如果 `model_type` ='detection',则只能是一个数组。 | |||

| - **labels** (Union[numpy.ndarray, tuple]) - 目标标签或ground-truth标签。 | |||

| - 如果 `model_type` ='classification',标签的格式应为numpy.ndarray。 | |||

| - 如果 `model_type` ='detection',标签的格式应为(gt_boxes, gt_labels)。 | |||

| 返回: | |||

| - **numpy.ndarray** - 每个攻击结果的布尔值。 | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| - **numpy.ndarray** - 每个样本的查询次数。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.HopSkipJumpAttack(model, init_num_evals=100, max_num_evals=1000, stepsize_search='geometric_progression', num_iterations=20, gamma=1.0, constraint='l2', batch_size=32, clip_min=0.0, clip_max=1.0, sparse=True) | |||

| Chen、Jordan和Wainwright提出的HopSkipJumpAttack是一种基于决策的攻击。此攻击需要访问目标模型的输出标签。 | |||

| 参考文献:`Chen J, Michael I. Jordan, Martin J. Wainwright. HopSkipJumpAttack: A Query-Efficient Decision-Based Attack. 2019. arXiv:1904.02144 <https://arxiv.org/abs/1904.02144>`_。 | |||

| 参数: | |||

| - **model** (BlackModel) - 目标模型。 | |||

| - **init_num_evals** (int) - 梯度估计的初始评估数。默认值:100。 | |||

| - **max_num_evals** (int) - 梯度估计的最大评估数。默认值:1000。 | |||

| - **stepsize_search** (str) - 表示要如何搜索步长; | |||

| - 可取值为'geometric_progression'或'grid_search'。默认值:'geometric_progression'。 | |||

| - **num_iterations** (int) - 迭代次数。默认值:20。 | |||

| - **gamma** (float) - 用于设置二进制搜索阈值theta。默认值:1.0。 | |||

| 对于l2攻击,二进制搜索阈值 `theta` 为 :math:`gamma / d^{3/2}` 。对于linf攻击是 :math:`gamma/d^2` 。默认值:1.0。 | |||

| - **constraint** (str) - 要优化距离的范数。可取值为'l2'或'linf'。默认值:'l2'。 | |||

| - **batch_size** (int) - 批次大小。默认值:32。 | |||

| - **clip_min** (float, optional) - 最小图像组件值。默认值:0。 | |||

| - **clip_max** (float, optional) - 最大图像组件值。默认值:1。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| 异常: | |||

| - **ValueError** - `stepsize_search` 不在['geometric_progression','grid_search']中。 | |||

| - **ValueError** - `constraint` 不在['l2', 'linf']中 | |||

| .. py:method:: generate(inputs, labels) | |||

| 在for循环中生成对抗图像。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 原始图像。 | |||

| - **labels** (numpy.ndarray) - 目标标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 每个攻击结果的布尔值。 | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| - **numpy.ndarray** - 每个样本的查询次数。 | |||

| .. py:method:: set_target_images(target_images) | |||

| 设置目标图像进行目标攻击。 | |||

| 参数: | |||

| - **target_images** (numpy.ndarray) - 目标图像。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.NES(model, scene, max_queries=10000, top_k=-1, num_class=10, batch_size=128, epsilon=0.3, samples_per_draw=128, momentum=0.9, learning_rate=1e-3, max_lr=5e-2, min_lr=5e-4, sigma=1e-3, plateau_length=20, plateau_drop=2.0, adv_thresh=0.25, zero_iters=10, starting_eps=1.0, starting_delta_eps=0.5, label_only_sigma=1e-3, conservative=2, sparse=True) | |||

| 该类是自然进化策略(Natural Evolutionary Strategies,NES)攻击法的实现。NES使用自然进化策略来估计梯度,以提高查询效率。NES包括三个设置:Query-Limited设置、Partial-Information置和Label-Only设置。 | |||

| - 在'query-limit'设置中,攻击对目标模型的查询数量有限,但可以访问所有类的概率。 | |||

| - 在'partial-info'设置中,攻击仅有权访问top-k类的概率。 | |||

| - 在'label-only'设置中,攻击只能访问按其预测概率排序的k个推断标签列表。 | |||

| 在Partial-Information设置和Label-Only设置中,NES会进行目标攻击,因此用户需要使用set_target_images方法来设置目标类的目标图像。 | |||

| 参考文献:`Andrew Ilyas, Logan Engstrom, Anish Athalye, and Jessy Lin. Black-box adversarial attacks with limited queries and information. In ICML, July 2018 <https://arxiv.org/abs/1804.08598>`_。 | |||

| 参数: | |||

| - **model** (BlackModel) - 要攻击的目标模型。 | |||

| - **scene** (str) - 确定算法的场景,可选值为:'Label_Only'、'Partial_Info'、'Query_Limit'。 | |||

| - **max_queries** (int) - 生成对抗样本的最大查询编号。默认值:10000。 | |||

| - **top_k** (int) - 用于'Partial-Info'或'Label-Only'设置,表示攻击者可用的(Top-k)信息数量。对于Query-Limited设置,此输入应设置为-1。默认值:-1。 | |||

| - **num_class** (int) - 数据集中的类数。默认值:10。 | |||

| - **batch_size** (int) - 批次大小。默认值:128。 | |||

| - **epsilon** (float) - 攻击中允许的最大扰动。默认值:0.3。 | |||

| - **samples_per_draw** (int) - 对偶采样中绘制的样本数。默认值:128。 | |||

| - **momentum** (float) - 动量。默认值:0.9。 | |||

| - **learning_rate** (float) - 学习率。默认值:1e-3。 | |||

| - **max_lr** (float) - 最大学习率。默认值:5e-2。 | |||

| - **min_lr** (float) - 最小学习率。默认值:5e-4。 | |||

| - **sigma** (float) - 随机噪声的步长。默认值:1e-3。 | |||

| - **plateau_length** (int) - 退火算法中使用的平台长度。默认值:20。 | |||

| - **plateau_drop** (float) - 退火算法中使用的平台Drop。默认值:2.0。 | |||

| - **adv_thresh** (float) - 对抗阈值。默认值:0.25。 | |||

| - **zero_iters** (int) - 用于代理分数的点数。默认值:10。 | |||

| - **starting_eps** (float) - Label-Only设置中使用的启动epsilon。默认值:1.0。 | |||

| - **starting_delta_eps** (float) - Label-Only设置中使用的delta epsilon。默认值:0.5。 | |||

| - **label_only_sigma** (float) - Label-Only设置中使用的Sigma。默认值:1e-3。 | |||

| - **conservative** (int) - 用于epsilon衰变的守恒,如果没有收敛,它将增加。默认值:2。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入数据和目标标签生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 良性输入样本。 | |||

| - **labels** (numpy.ndarray) - 目标标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 每个攻击结果的布尔值。 | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| - **numpy.ndarray** - 每个样本的查询次数。 | |||

| 异常: | |||

| - **ValueError** - 在'Label-Only'或'Partial-Info'设置中 `top_k` 小于0。 | |||

| - **ValueError** - 在'Label-Only'或'Partial-Info'设置中target_imgs为None。 | |||

| - **ValueError** - `scene` 不在['Label_Only', 'Partial_Info', 'Query_Limit']中 | |||

| .. py:method:: set_target_images(target_images) | |||

| 在'Partial-Info'或'Label-Only'设置中设置目标攻击的目标样本。 | |||

| 参数: | |||

| - **target_images** (numpy.ndarray) - 目标攻击的目标样本。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.PointWiseAttack(model, max_iter=1000, search_iter=10, is_targeted=False, init_attack=None, sparse=True) | |||

| 点式攻击(Pointwise Attack)确保使用最小数量的更改像素为每个原始样本生成对抗样本。那些更改的像素将使用二进制搜索,以确保对抗样本和原始样本之间的距离尽可能接近。 | |||

| 参考文献:`L. Schott, J. Rauber, M. Bethge, W. Brendel: "Towards the first adversarially robust neural network model on MNIST", ICLR (2019) <https://arxiv.org/abs/1805.09190>`_。 | |||

| 参数: | |||

| - **model** (BlackModel) - 目标模型。 | |||

| - **max_iter** (int) - 生成对抗图像的最大迭代轮数。默认值:1000。 | |||

| - **search_iter** (int) - 二进制搜索的最大轮数。默认值:10。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **init_attack** (Union[Attack, None]) - 用于查找起点的攻击。默认值:None。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入样本和目标标签生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 良性输入样本,用于创建对抗样本。 | |||

| - **labels** (numpy.ndarray) - 对于有目标的攻击,标签是对抗性的目标标签。对于无目标攻击,标签是ground-truth标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 每个攻击结果的布尔值。 | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| - **numpy.ndarray** - 每个样本的查询次数。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.PSOAttack(model, model_type='classification', targeted=False, reserve_ratio=0.3, sparse=True, step_size=0.5, per_bounds=0.6, c1=2.0, c2=2.0, c=2.0, pop_size=6, t_max=1000, pm=0.5, bounds=None) | |||

| PSO攻击表示基于粒子群优化(Particle Swarm Optimization)算法的黑盒攻击,属于进化算法。 | |||

| 此攻击由Rayan Mosli等人(2019)提出。 | |||

| 参考文献:`Rayan Mosli, Matthew Wright, Bo Yuan, Yin Pan, "They Might NOT Be Giants: Crafting Black-Box Adversarial Examples with Fewer Queries Using Particle Swarm Optimization", arxiv: 1909.07490, 2019. <https://arxiv.org/abs/1909.07490>`_。 | |||

| 参数: | |||

| - **model** (BlackModel) - 目标模型。 | |||

| - **step_size** (Union[int, float]) - 攻击步长。默认值:0.5。 | |||

| - **per_bounds** (Union[int, float]) - 扰动的相对变化范围。默认值:0.6。 | |||

| - **c1** (Union[int, float]) - 权重系数。默认值:2。 | |||

| - **c2** (Union[int, float]) - 权重系数。默认值:2。 | |||

| - **c** (Union[int, float]) - 扰动损失的权重。默认值:2。 | |||

| - **pop_size** (int) - 粒子的数量,应大于零。默认值:6。 | |||

| - **t_max** (int) - 每个对抗样本的最大迭代轮数,应大于零。默认值:1000。 | |||

| - **pm** (Union[int, float]) - 突变的概率,应在(0,1)的范围内。默认值:0.5。 | |||

| - **bounds** (Union[list, tuple, None]) - 数据的上下界。以(数据最小值,数据最大值)的形式出现。默认值:None。 | |||

| - **targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。 `model_type` ='detection'仅支持无目标攻击,默认值:False。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| - **model_type** (str) - 目标模型的类型。现在支持'classification'和'detection'。默认值:'classification'。 | |||

| - **reserve_ratio** (Union[int, float]) - 攻击后可检测到的对象百分比,用于 `model_type` ='detection'模式。保留比率应在(0, 1)的范围内。默认值:0.3。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入数据和目标标签(或ground_truth标签)生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (Union[numpy.ndarray, tuple]) - 输入样本。 | |||

| - 如果 `model_type` ='classification',则输入的格式应为numpy.ndarray。输入的格式可以是(input1, input2, ...)。 | |||

| - 如果 `model_type` ='detection',则只能是一个数组。 | |||

| - **labels** (Union[numpy.ndarray, tuple]) - 目标标签或ground-truth标签。 | |||

| - 如果 `model_type` ='classification',标签的格式应为numpy.ndarray。 | |||

| - 如果 `model_type` ='detection',标签的格式应为(gt_boxes, gt_labels)。 | |||

| 返回: | |||

| - **numpy.ndarray** - 每个攻击结果的布尔值。 | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| - **numpy.ndarray** - 每个样本的查询次数。 | |||

| .. py:class:: mindarmour.adv_robustness.attacks.SaltAndPepperNoiseAttack(model, bounds=(0.0, 1.0), max_iter=100, is_targeted=False, sparse=True) | |||

| 增加椒盐噪声的量以生成对抗样本。 | |||

| 参数: | |||

| - **model** (BlackModel) - 目标模型。 | |||

| - **bounds** (tuple) - 数据的上下界。以(数据最小值,数据最大值)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **max_iter** (int) - 生成对抗样本的最大迭代。默认值:100。 | |||

| - **is_targeted** (bool) - 如果为True,则为目标攻击。如果为False,则为无目标攻击。默认值:False。 | |||

| - **sparse** (bool) - 如果为True,则输入标签为稀疏编码。如果为False,则输入标签为one-hot编码。默认值:True。 | |||

| .. py:method:: generate(inputs, labels) | |||

| 根据输入数据和目标标签生成对抗样本。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 原始的、未受扰动的输入。 | |||

| - **labels** (numpy.ndarray) - 目标标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 每个攻击结果的布尔值。 | |||

| - **numpy.ndarray** - 生成的对抗样本。 | |||

| - **numpy.ndarray** - 每个样本的查询次数。 | |||

+ 0

- 96

docs/api/api_python/mindarmour.adv_robustness.defenses.rst

View File

| @@ -1,96 +0,0 @@ | |||

| mindarmour.adv_robustness.defenses | |||

| ================================== | |||

| 该模块包括经典的防御算法,用于防御对抗样本,增强模型的安全性和可信性。 | |||

| .. py:class:: mindarmour.adv_robustness.defenses.AdversarialDefense(network, loss_fn=None, optimizer=None) | |||

| 使用给定的对抗样本进行对抗训练。 | |||

| 参数: | |||

| - **network** (Cell) - 要防御的MindSpore网络。 | |||

| - **loss_fn** (Union[Loss, None]) - 损失函数。默认值:None。 | |||

| - **optimizer** (Cell) - 用于训练网络的优化器。默认值:None。 | |||

| .. py:method:: defense(inputs, labels) | |||

| 通过使用输入样本进行训练来增强模型。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 输入样本。 | |||

| - **labels** (numpy.ndarray) - 输入样本的标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 防御操作的损失。 | |||

| .. py:class:: mindarmour.adv_robustness.defenses.AdversarialDefenseWithAttacks(network, attacks, loss_fn=None, optimizer=None, bounds=(0.0, 1.0), replace_ratio=0.5) | |||

| 利用特定的攻击方法和给定的对抗例子进行对抗训练,以增强模型的鲁棒性。 | |||

| 参数: | |||

| - **network** (Cell) - 要防御的MindSpore网络。 | |||

| - **attacks** (list[Attack]) - 攻击方法序列。 | |||

| - **loss_fn** (Union[Loss, None]) - 损失函数。默认值:None。 | |||

| - **optimizer** (Cell) - 用于训练网络的优化器。默认值:None。 | |||

| - **bounds** (tuple) - 数据的上下界。以(clip_min, clip_max)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **replace_ratio** (float) - 用对抗样本替换原始样本的比率,必须在0到1之间。默认值:0.5。 | |||

| 异常: | |||

| - **ValueError** - `replace_ratio` 不在0和1之间。 | |||

| .. py:method:: defense(inputs, labels) | |||

| 通过使用从输入样本生成的对抗样本进行训练来增强模型。 | |||

| 参数: | |||

| - **inputs** (numpy.ndarray) - 输入样本。 | |||

| - **labels** (numpy.ndarray) - 输入样本的标签。 | |||

| 返回: | |||

| - **numpy.ndarray** - 对抗性防御操作的损失。 | |||

| .. py:class:: mindarmour.adv_robustness.defenses.NaturalAdversarialDefense(network, loss_fn=None, optimizer=None, bounds=(0.0, 1.0), replace_ratio=0.5, eps=0.1) | |||

| 基于FGSM的对抗性训练。 | |||

| 参考文献:`A. Kurakin, et al., "Adversarial machine learning at scale," in ICLR, 2017 <https://arxiv.org/abs/1611.01236>`_。 | |||

| 参数: | |||

| - **network** (Cell) - 要防御的MindSpore网络。 | |||

| - **loss_fn** (Union[Loss, None]) - 损失函数。默认值:None。 | |||

| - **optimizer** (Cell) - 用于训练网络的优化器。默认值:None。 | |||

| - **bounds** (tuple) - 数据的上下界。以(clip_min, clip_max)的形式出现。默认值:(0.0, 1.0)。 | |||

| - **replace_ratio** (float) - 用对抗样本替换原始样本的比率。默认值:0.5。 | |||

| - **eps** (float) - 攻击方法(FGSM)的步长。默认值:0.1。 | |||

| .. py:class:: mindarmour.adv_robustness.defenses.ProjectedAdversarialDefense(network, loss_fn=None, optimizer=None, bounds=(0.0, 1.0), replace_ratio=0.5, eps=0.3, eps_iter=0.1, nb_iter=5, norm_level='inf') | |||

| 基于PGD的对抗性训练。 | |||